There are further ways to safeguard creators and artists, like content ID on YouTube.

The commitment they make to developing AI responsibly

AI is creating a plethora of opportunities and enabling artists to express themselves in novel and fascinating ways. At YouTube, they’re dedicated to making sure that other businesses and artists prosper in this dynamic environment. This entails giving people the instruments necessary to fully use AI’s creative potential while preserving their authority over the representation of their identity, including their voice and visage. currently creating new likeness management technologies to do this, which will protect them and open up new doors down the road.

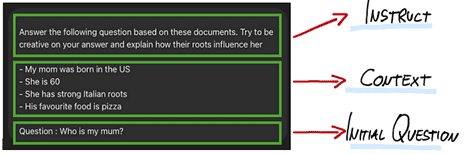

Instruments they are creating

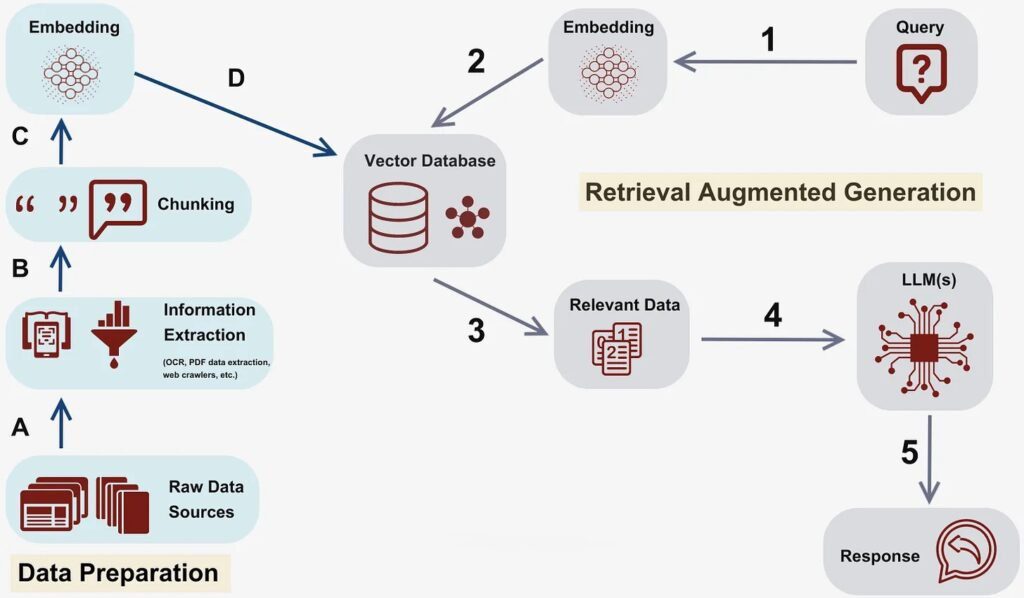

First, partners will be able to automatically identify and control artificial intelligence (AI)-generated video on YouTube that mimics their singing voices thanks to new synthetic-singing recognition technology created by Google under Content ID on YouTube. They are working with their partners to refine this technology, and early in the next year, a pilot program is scheduled.

Second, you’re working hard to build new technologies that will make it possible for individuals in a range of fields from artists and sports to actors and creators to recognize and control artificial intelligence (AI)-generated material on YouTube that features their faces. This, together with their most recent privacy changes, will provide a powerful toolkit to control the way AI is used to represent individuals on YouTube.

These two new capacities expand on their history of creating technology-driven strategies for large-scale rights concerns management. Since its launch in 2007, Content ID on YouTube has given rightsholders on YouTube granular control over their entire portfolios, processing billions of claims annually and bringing in billions more for artists and creators via the reuse of their creations. Currently determined to introduce the same degree of security and autonomy into the era of artificial intelligence.

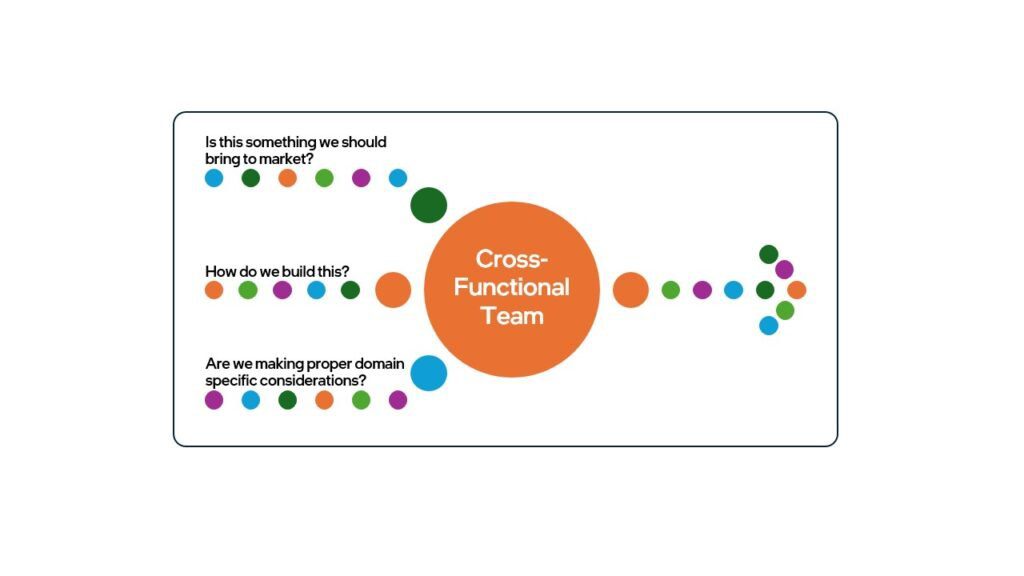

Preventing unwanted access to material and giving users more control

It utilize material submitted to YouTube, as they have done for many years, to enhance the user experience for creators and viewers on both YouTube and Google, including via the use of AI and machine learning tools. Please adhere to the conditions set out by the founders in doing this. This includes building new generative AI capabilities like auto dubbing and supporting their Trust & Safety operations as well as enhancing their recommendation algorithms. Going ahead, it’s still dedicated to making sure that YouTube material is utilized appropriately across Google or YouTube for the creation of their AI-powered solutions.

Regarding third parties, including those who would attempt to scrape YouTube material, they have made it quite clear that gaining illegal access to creator content is against their Terms of Service and lessens the value they provide to creators in return for their labor. Help keep taking steps to make sure that third parties abide by these rules, such as making continuous investments in the systems that identify and stop illegal access, all the way up to banning access for scrapers.

Having said that, they understand that as the field of generative AI develops, artists could want greater control over the terms of their partnerships with other businesses to produce AI tools. Currently creating new methods to offer YouTube creators control over how other parties may utilize their video on their platform because of this. Later this year, it will be able to reveal more.

Using Community Guidelines & AI Tools

The experimental Dream Screen for Shorts and other new generative AI tools on YouTube provide artists with new and interesting avenues to express their creativity and interact with their audience. The creative process is still in the creators’ control; they direct the tools’ output and choose what information to disclose.

Google commitment to building a responsible and secure community includes handling information produced by artificial intelligence. AI-generated material has to follow their Community Guidelines, just like any other YouTube video. In the end, creators are in charge of making sure their published work complies with these criteria, regardless of where it came from.

They’ve added safety features to their AI tools to help developers navigate it regulations and avoid any possible abuse. This implies that suggestions that break the rules or deal with delicate subjects may be blocked. They urge artists to thoroughly check AI-generated work before posting it, just as they would in any other scenario, even if their main objective is to inspire creativity. Google recognize they may not always get it right, especially in the early days of new goods, so they welcome input from creators.

Fostering creativity in people

They think that as AI develops, human creativity should be enhanced rather than replaced. Currently determined to collaborate with others to make sure that their opinions are heard in future developments, and it will keep creating safeguards to allay worries and accomplish their shared objectives. Since the beginning, they have concentrated on giving companies and artists the tools they need to create vibrant communities on YouTube, as well as still prioritize creating an atmosphere that encourages responsible innovation.