The recent success of big language models built on artificial intelligence has encouraged the market to consider more ambitiously how AI may change numerous corporate operations. Consumers’ and authorities’ worries about the security of their data and the AI models themselves have increased recently. In order to give consumers, businesses, and regulators trust in the use of AI, we must implement AI Governance throughout the data lifecycle. But how does this appear?

Artificial intelligence models are often rather straightforward; they take in data and then extract patterns to provide an output. The same is true for sophisticated large language models (LLMs), such as ChatGPT and Google Bard. Because of this, we must first concentrate on managing the data that the AI models are trained on before we can manage and regulate the deployment of AI models. We must comprehend the source, level of sensitivity, and lifetime of all the data we utilize in order to comply with this data governance. It is the cornerstone of any AI Governance process and is essential for reducing a variety of business risks.

Risks of using sensitive data to train LLM models

To meet unique corporate use cases, large language models may be trained on private data. For instance, a business may use ChatGPT to build a customized model that is trained on its CRM sales data. To assist sales teams with questions like “How many opportunities has product X won in the last year?” this model may be implemented as a Slack chatbot. or “Update me on the opportunity for Product Z with Company Y.”

These LLMs may easily be tailored for a wide range of customer service, HR, or marketing use cases. It’s also possible that these will supplement legal and medical guidance, making LLMs a primary diagnostic tool for healthcare professionals. The issue is that these use cases need educating LLMs using private, sensitive information. This is dangerous by nature. Among these dangers are:

1. The risk of privacy and re-identification

AI models pick up new skills from training sets of data, but what if that material is secret or delicate? It is possible to identify certain people using a sizable quantity of data, either directly or indirectly. Therefore, if we train an LLM on confidential information about a company’s clients, we may encounter circumstances when the deployment of that model might lead to the disclosure of sensitive data.

2. Data from in-model learning

Many straightforward AI models have two phases: training and deployment, the latter of which involves pausing training. LLMs are a little unique. Your communication with them is taken into account as they learn from it and reply appropriately.

Due to the fact that we must now consider input data other than the initial training data, managing model input data becomes much more difficult. Every time the model is queried, we also worry. What if we give the conversational model private information? Can we recognize the sensitivity so that we can stop the model from using it in other situations?

3. Access and security risk

The sensitivity of the model is somewhat determined by the sensitivity of the training data. AI deployment security is still being developed, despite the fact that we have well-established systems for regulating access to data – monitoring who is accessing what data and then dynamically concealing data based on the scenario. Despite the fact that there are a growing number of solutions in this area, we are still unable to completely control the sensitivity of model output based on the function of the person using the model (for example, the model identifying that a certain output could be sensitive and then reliably changing the output based on who is querying the LLM). As a result, these models are extremely susceptible to leaking any sort of private data used in model development.

4. The danger of intellectual property

What happens if we train a model using all of Drake’s songs, and the model then begins producing Drake knockoffs? Is the model violating Drake’s rights? Can you show that the model isn’t in some way stealing your ideas?

Regulators are still working to understand this issue, but it has the potential to significantly impact any generative AI that gains knowledge from creative works of intellectual property. We anticipate that this may result in significant litigation in the future, which will need to be avoided by carefully observing the intellectual property rights of any training-related data.

5. DSAR risk and permission

Consent is one of the main concepts guiding contemporary data privacy legislation. Customers must provide their permission for the usage of their data and must have the option to have it removed. This creates a distinct issue for the use of AI.

When confidential customer data is used to train an AI model, the model may subsequently be used to expose that sensitive data. The model would effectively need to be decommissioned and retrained without access to the revoked data if a customer were to request that the firm no longer use their data (a requirement of GDPR).

In order for businesses to trust the security of the training data and have an audit trail for the LLM’s consumption of the data, the training data must be governed in order for LLMs to be usable as enterprise software.

Data management for LLMs

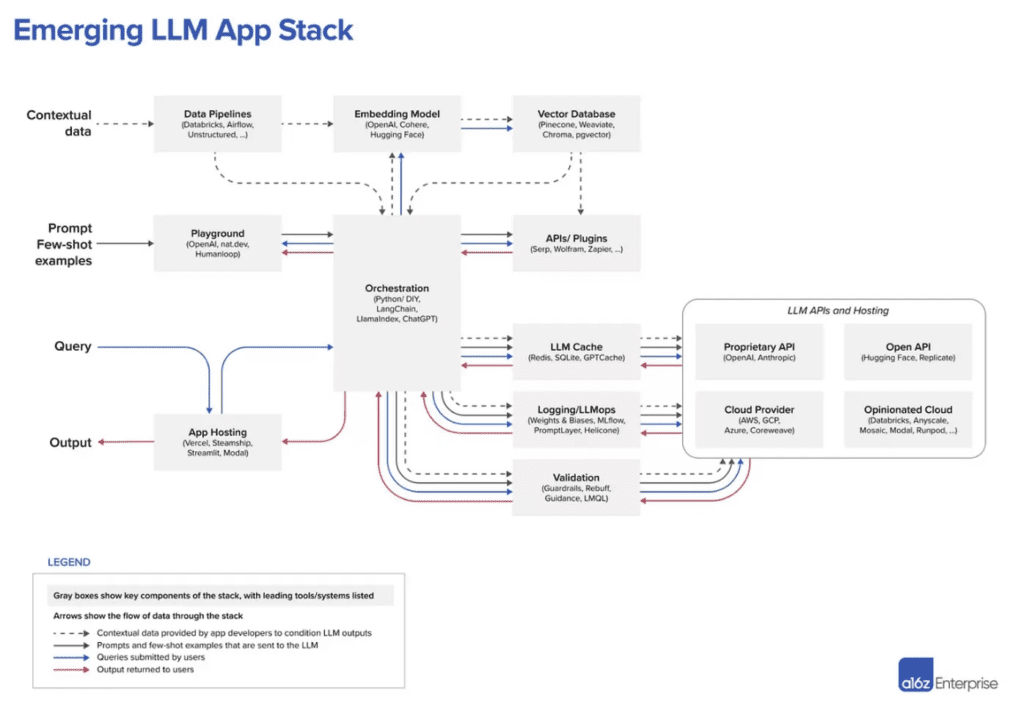

This essay by a16z (see figure below) is the clearest explanation of LLM architecture that I have ever seen. The top left piece of “contextual data data pipelines” is pretty nicely done, however as someone who spends all of my time focusing on data governance and privacy, data governance is absent.

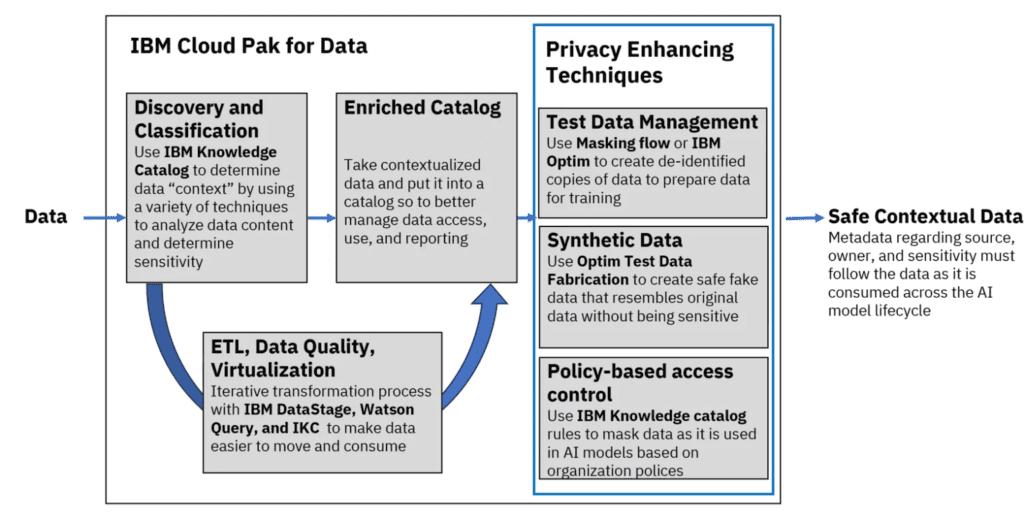

A number of features are available in the IBM Knowledge Catalog-powered data governance system to aid in improved data discovery, automated data quality, and data security. One can:

- Automatically find data and give context for the company to ensure consistency of knowledge

- By classifying data to allow self-service data discovery, create an auditable data inventory.

- To meet data privacy and legal standards, identify sensitive data and take proactive measures to secure it.

The application of Privacy Enhancing Technique is the final stage in the preceding process, which is frequently missed. How can we filter out the sensitive information before giving it to AI? This may be divided into three steps:

1.Determine which sensitive data elements need to be removed (hint: this is decided during data discovery and is related to the “context” of the data).

2.Remove the sensitive information while maintaining the use of the data (e.g., by maintaining referential integrity, maintaining statistical distributions that are broadly equal, etc.).

3.Keep a record of what transpired in instances 1) and 2) so that this information is there as models consume the data. The auditability benefits from that tracking.

Using IBM Watsonx and the data fabric, create a regulated basis for generative AI

With IBM Watsonx, IBM has made quick progress in giving ‘AI builders’ access to the potential of generative AI. The IBM Watsonx.ai studio is an enterprise-ready platform that combines foundation models-based generative AI with classical machine learning (ML). Watsonx also comes with watsonx.data, an open lakehouse-based, fit-for-purpose data storage. To access and exchange data across the hybrid cloud, querying, governance, and open data formats are provided.

For AI applications to be successful, a solid data base is essential. With IBM Data Fabric, clients can create the ideal data infrastructure for AI by acquiring, preparing, and organizing data before it is easily accessible to AI developers using watsonx.ai and watsonx.data.

As part of an open and adaptable data and AI platform that can be installed on external clouds, IBM provides a composable data fabric solution. This system offers features for entity resolution, data governance, data integration, data observability, data lineage, data quality, and data privacy management.

Start using data governance for business AI

One of the most revolutionary technologies of the coming ten years will be artificial intelligence models, especially LLMs. Aside from managing and governing AI models, it is crucial to manage and oversee the data fed into the AI since new AI legislation prescribe guidelines for its usage.

[…] IBM is available to assist you at any point in your digital transformation journey. In order to help you create excellent customer experiences, ensure application performance, save costs, and address the myriad problems of a contemporary IT environment, we offer solutions that assist you in transitioning from the outdated “break-fix” methodology to an AI-powered approach. […]

[…] supports generative AI, provides developer friendly tools for developing applications for popular gen AI use cases, and offers over 100 big models from Google, open-source contributors, and […]

[…] generation AI apps must overcome a number of obstacles that LLMs cannot solve on their own. These apps must deliver accurate and current information, offer […]

[…] Assistant makes sure that the LLM’s replies are based on a confined domain of enterprise-specific content rather of an open domain of […]

[…] the GDPR obligations and extra local privacy rules across Europe, data governance played a significant role in Gruppo Maggioli’s decision to use Google Cloud. With 11 European […]

[…] Large linguistic models (LLMs) are fundamental models (FM) with layers of neural networks trained on enormous quantities of unlabeled data. Self-supervised learning techniques let them do natural language processing (NLP) tasks like humans (Figure 1). […]

[…] marketers, AI and machine learning are essential for sorting through vast amounts of data and identifying anomalies or areas of […]

[…] can use open source LLMs trained on existing code and programming languages to construct applications and discover security […]