Do you think of the Terminator or Data from “Star Trek: The Next Generation” when you hear AI? No example of artificial general intelligence exists today, yet AI has created machine intelligence. It’s trained on massive public, proprietary, and sensor data.

As we start our AI on the Edge series, we’d like to define “AI” as it might include machine learning, neural networks, and deep learning. Don’t be embarrassed: “What is AI, exactly?” requires a more technical and sophisticated response.

The beginnings of AI research

Knowing the history of AI makes answering “what is AI” easier

As humans built competent electronic computers in the 1950s, AI emerged from science fiction. Alan Turing investigated AI’s mathematical feasibility. He proposed that machines can solve problems and make judgements using knowledge and reasoning like humans.

In his 1950 paper “Computing Machinery and Intelligence,” he developed the Turing Test to test intelligent machines. If a machine can have a conversation with a human over a text interface, the test suggests it is “thinking.” This simplified test makes a “thinking machine” more realistic.

AI Proof of Concept

AI research was hampered by the limited and expensive computers of the 1950s. Despite this, studies continued. Five years later, Logic Theorist, arguably the first AI programme, began a proof of concept. The 1956 Dartmouth Summer Research Project on Artificial Intelligence screened the programme. This landmark meeting brought together prominent academics from diverse fields for an open-ended discussion about AI, which host John McCarthy, then a Dartmouth mathematics professor, coined.

As computers got faster, cheaper, and more storage, AI research blossomed from 1957 through 1974. People learned which machine learning algorithm to use for their problem as algorithms improved. But mainstream applications were scarce, and AI research funding dried up. The utopian vision of 1960s and 1970s AI researchers like Marvin Minsky seemed doomed.

AI advancements today

In the 1980s, computing and data storage advances boosted AI research. AI renaissance fueled by new algorithms and investment. John Hopfield and David Rumelhart popularised “deep learning” techniques that let computers learn from experience.

Following this milestone were notable events. Deep Blue defeated world chess champion and grandmaster Gary Kasparov in 1997. The first reigning world chess champion lost to a machine. Dragon Systems speech-recognition software became widely accessible the same year. Stanford’s 2005 DARPA Grand Challenge winning robot drove independently over 131 miles on an unrehearsed desert track. Two years later, a Carnegie Mellon University vehicle won the DARPA Urban Challenge by autonomously travelling 55 miles in an urban area while avoiding traffic dangers and respecting traffic laws. In February 2011, IBM’s Watson question answering machine defeated the two greatest “Jeopardy!” champions in an exhibition match.

Though exciting, these public demonstrations weren’t mainstream AI solutions. The DARPA competitions spurred ongoing autonomous vehicle development. Math accelerators like GPUs, DSPs, FPGAs, and NPUs increased processing speeds by orders of magnitude over CPUs, launching the AI application explosion. Math accelerators like DSPs, GPUs, and NPUs perform hundreds or thousands of threads simultaneously, while CPUs can handle tens. AI researchers also had access to massive training data from cloud services and public data sets.

Large language models (LLMs) trained on massive amounts of unlabeled data become the foundation models for many jobs in 2018. Recent models like OpenAI’s GPT-3 in 2020 and DeepMind’s Gato in 2022 advanced AI. These generative AI models have expanded AI’s applicability. Using input prompts, generative AI can create new text, images, or other content. Previous AI uses included detecting bad parts in a product line, classifying faces in a video feed, and predicting the path of an autonomous vehicle.

How AI works and technological terminology

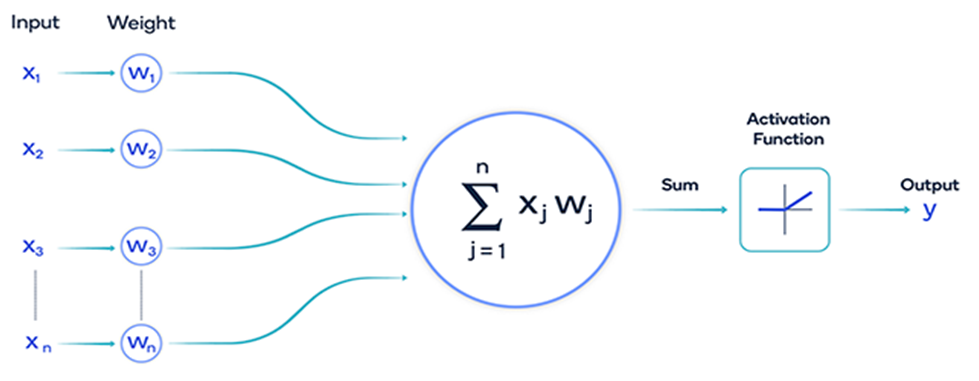

Modern AI uses artificial neurons built after animal and human brains. Each synthetic neuron layer processes input and feeds the next. Neural network. Each neuron weighs many inputs. Add weighted inputs and feed the result to an activation function. A ReLU activation function gives a deep learning model nonlinearity. Activation function outputs feed the neural network’s next layer. The model parameters are summation function bias and collective weights.

The number of interconnected neurons per layer and the number of layers affect neural network accuracy at the expense of performance, power, and size.

Deep learning’s depth

In “deep learning,” “deep” indicates several network layers. GPUs, NPUs, and other math accelerators increase computational capacity by a thousand-fold or more, enabling backpropagation for multi-layer networks and lowering training time from months to days.

Digital neuron parameters are learned. We learn from life and senses. AI learns from digital imprints because it has no sensations or experiences.

Using examples trains neural networks. Known “inputs” and “outputs,” which generate probability-weighted correlations in the digital neuron and are stored in the neural network’s “model” in supervised learning.

The difference between the network’s processed output (usually a prediction) and a desired output trains a neural network using an example. Minimising the difference between the prediction and the intended output modifies the network iteratively until accuracy is reached. This is backpropagation.

The neural network learns from new data and examples. The neural network model must be big to represent complex data. Training large models takes numerous examples to enhance accuracy and capabilities.

The trained neural network model interprets and creates inputs and outputs. Inference is model analysis of new data. Inference uses AI models in real life.

Training uses 32-bit or 16-bit floating point math, while inference models can be scaled down to 8-bit or 4-bit integer precision to save memory, power, and improve performance without affecting model accuracy. Quantization reduces model size from 32-bit to 8-bit by 25%.

Different neural network topologies increase performance, efficiency, and capacity. For voice recognition, picture recognition, autonomous cars, and other detection, classification, and prediction applications, CNNs, RNNs, and LSTMs are commonly employed.

Diffusion models, a popular latent variable model class, can denoise, inpaint, super-resolution upscale, and create images. This technique popularised generative AI. Image generation models start with a random noise image then reverse the diffusion process on numerous images to generate new images based on text input prompts. The OpenAI text-to-image model DALL-E 2 is one. Text-to-image generative AI models like Stable Diffusion and ControlNet are popular. LVMs are language-vision models.

Many LLMs like Llama 2, GPT-4, and BERT use Transformer neural network architecture from Google in 2017. Complex models are initiating the next generation of generative AI, which develops new content. As AI research continues, designs, algorithms, and methodologies will change.

Everywhere, real-time AI development has evolved multiple times

Deep learning educated neural networks with deeper neuron layers to store more data and express complex functions. Parallel computing and better approaches reduce neural network model training computational overhead.

DSP, FPGA, GPU, and NPU neural network training and inference eased deep neural network implementation. All cloud services and public data sets provided vast data for large-scale AI, another milestone.

A complex AI model needs text, music, photos, and videos. Every data type trains neural networks. This wealth of content makes neural networks smarter and stronger.

Sharing such data on edge devices and beyond the cloud requires processing it into smaller models. Democratic AI begins.

[…] straightforward AI models have two phases: training and deployment, the latter of which involves pausing training. LLMs […]

[…] to the realm of generative AI, a fast developing subject that has the potential to have a significant influence on every industry. […]

[…] Conversation, launched earlier this year in preview as Enterprise Search and Conversational AI on Generative AI App Builder, offers a simple orchestration layer to combine enterprise data with generative […]

[…] emails or in-app communications with financial advice might be time-consuming. Gen AI can assist create conversational one-to-one customised messaging at scale. Customer experience, […]