The Features of Amazon ElastiCache Serverless

Amazon ElastiCache Serverless, a new serverless offering, lets clients deploy a cache in under a minute and increase capacity depending on application traffic patterns. ElastiCache Serverless supports Redis and Memcached, two prominent open-source caching systems.

ElastiCache Serverless can run a cache for the most demanding workloads without capacity planning or caching knowledge. ElastiCache Serverless monitors your application’s memory, CPU, and network resource usage and scales immediately to match workload access patterns. Save time and money by creating a highly available cache with data automatically duplicated across several Availability Zones and up to 99.99 percent availability SLA for all workloads.

Customers needed extreme cache deployment and operation ease. ElastiCache Serverless abstracts cluster topology and cache infrastructure for basic endpoints. Without reconnects and node rediscoveries, you may simplify applications and improve operations.

Amazon ElastiCache Serverless has no upfront expenditures and charges for used resources. Pay for the cache data storage and ElastiCache Processing Units (ECPUs) your apps use.

Start using Amazon ElastiCache Serverless

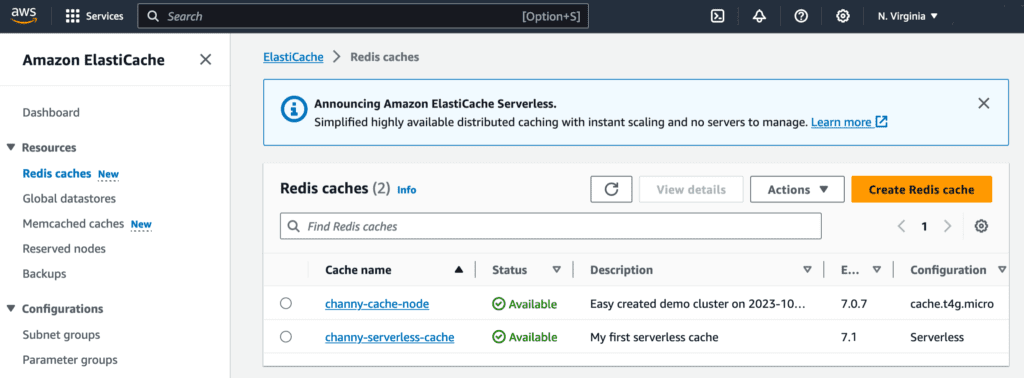

In the left navigation pane of the ElastiCache console, select Redis or Memcached caches to begin. ElastiCache Serverless supports Redis 7.1 and Memcached 1.6.

For example, in the case of Redis caches, choose Create Redis cache.

Two deployment choices are Serverless or Design your own cache for a node-based cache cluster. Select Serverless, New cache, and name.

Create a cache in your default VPC, Availability Zones, service-owned encryption key, and security groups using default settings. Recommended best practices will be configured automatically. No other adjustments are needed.

Set your own security groups or enable automatic backups to adjust default settings. To prevent your cache from growing too large, specify maximum compute and memory restrictions. When your cache hits its memory limit, least recently used (LRU) logic evicts TTL keys. When your computing limit is reached, ElastiCache throttles requests, increasing request latencies.

A new serverless cache shows connection and data protection settings, including an endpoint and network environment.

You can now configure the ElastiCache Serverless endpoint in your application and connect using redis-cli or another cluster-mode Redis client.

Cache management is possible with AWS CLI or SDKs. See AWS documentation Getting started with Amazon ElastiCache for Redis for details.

ElastiCache Serverless allows you to migrate data from an existing Redis cluster by supplying the ElastiCache backups or Amazon S3 location of a regular Redis rdb file when constructing your cache.

A Memcached serverless cache can be created and used like Redis.

ElastiCache Serverless for Memcached provides high availability and quick scaling, which Memcached does not. Memcached provides high availability without specialized business logic, numerous caches, or a third-party proxy layer. Now you may receive 99.99 percent availability SLA and data replication across Availability Zones.

Scaling and performance

ElastiCache Serverless scales without application downtime or performance degradation by scaling up and scaling out simultaneously to meet capacity needs.

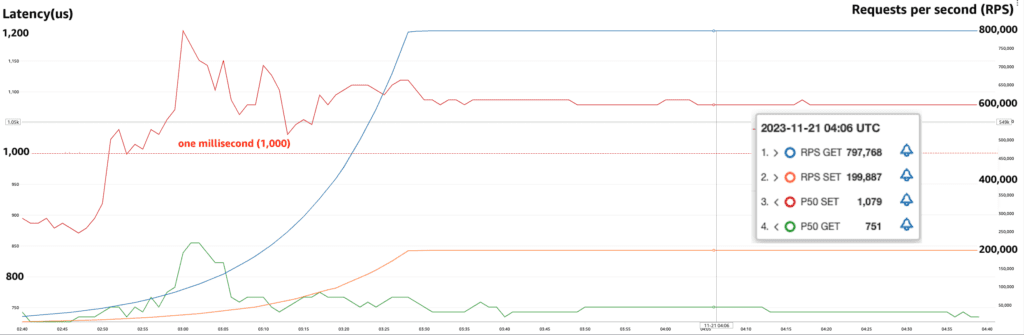

A simple scaling test showed ElastiCache Serverless’ performance. AWS started with a typical Redis workload with 80/20 reads and writes and 512-byte keys. The READONLY Redis command set our Redis client to Read From Replica (RFR) for optimal read performance. AWS want to demonstrate how ElastiCache Serverless can scale workloads quickly without latency.

As shown in the graph above, researchers doubled requests per second (RPS) every 10 minutes until the test’s target of 1M RPS. They found that p50 GET latency was always below 860 microseconds and about 751 microseconds. During rapid throughput increase, p50 SET latency stayed at 1,050 microseconds, not crossing 1,200.

Know something

Upgrade engine version – ElastiCache Serverless transparently deploys new features, bug fixes, security upgrades, and minor and patch engine versions to your cache. ElastiCache Serverless will notify you in the console and Amazon EventBridge about important version releases. No application impact is expected with ElastiCache Serverless major version updates.

Performance and monitoring – ElastiCache Serverless sends memory consumption (BytesUsedForCache), CPU usage (ElastiCacheProcessingUnits), and cache metrics (CacheMissRate, CacheHitRate, CacheHits, CacheMisses, and ThrottledRequests) to Amazon CloudWatch. ElastiCache Serverless publishes notable Amazon EventBridge events including cache creation, deletion, and limit adjustments. Details about metrics and events are in the documentation.

Security and compliance- ElastiCache Serverless caches are VPC-accessible. AWS IAM allows data plane access. The AWS account that created the ElastiCache Serverless cache has default access. Every ElastiCache Serverless connection is encrypted with transport layer security (TLS) to protect data at rest and in transit. You can restrict cache access by VPC, subnet, IAM access, and AWS KMS key for encryption. HIPAA-eligible ElastiCache Serverless is PCI-DSS, SOC, and ISO compliant.

Now available

Amazon ElastiCache Serverless is currently in all commercial AWS Regions, including China. ElastiCache Serverless has no upfront expenditures and charges for used resources. Cache data, ECPUs, and Snapshot storage cost GB-hours, GB-months, and GB-hours

[…] Amazon Titan Models System Requirements […]

[…] composite cloud-native patterns like AWS Active-Active Serverless APIs requires numerous separate patterns. Patterns […]

[…] Database, Amazon RDS Proxy, Performance Insights, Parallel Query, and Serverless v2 deployments are supported in Aurora MySQL 8.0, also known as Aurora MySQL 3. RDS for MySQL 8.0 […]