This is the first of a series of posts explaining why administrators and architects should to use Google Kubernetes Engine (GKE) to create batch processing systems.

Innovation is happening more quickly now that Kubernetes is a top container orchestration technology for managing and delivering containerized applications. This platform offers a strong foundation for coordinating batch workloads, including data processing jobs, machine learning model training, scientific simulations, and other compute-intensive operations. It is not simply restricted to executing microservices. GKE is a managed Kubernetes solution that expedites users’ time-to-value while affordably abstracting the underlying infrastructure.

Batch platforms handle Kubernetes Jobs, or batch workloads, in the order that they are received. The batch platform may use a queue to implement the logic in your business case.

Jobs and Pods are the two main Kubernetes resources in a batch platform. The Kubernetes Job API allows you to manage Jobs. A Job starts one or more Pods and keeps trying to run them until a predetermined number of them finish successfully. The Job records the successful completions of Pods as they happen. The job or assignment is deemed finished after a predetermined number of successful completions.

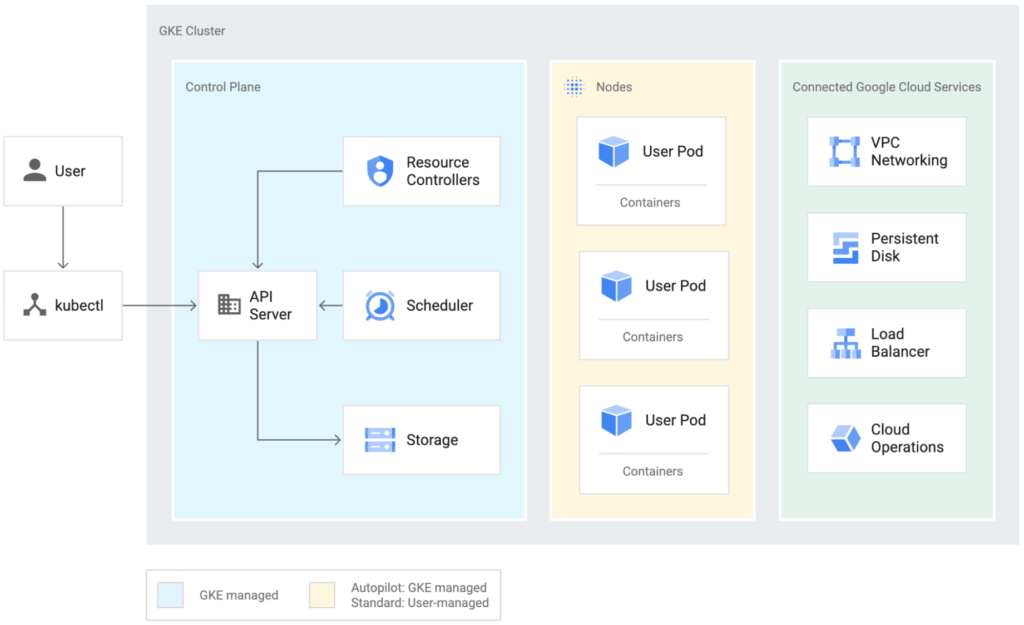

Workload orchestration and infrastructure complexity are reduced with GKE, a managed Kubernetes solution. Let’s examine GKE’s features in more detail to see why operating batch platforms there is a tempting option.

Cluster structure and resources at hand

Nodes, or Compute Engine virtual machines (VMs), are joined together to form clusters in a GKE environment. So that you can concentrate on your workload, GKE in Autopilot mode automatically maintains your cluster setup, including your nodes, scalability, security, and other predefined parameters. By default, autopilot clusters have high availability.

Google Cloud is always adding new virtual machine series and shapes that are suited for workloads.

Reliability

The biggest Kubernetes clusters that a managed provider can presently host are supported by GKE. For many bulk use cases, a cluster size of 15,000 nodes (as opposed to 5,000 nodes for open-source Kubernetes) is essential.

GKE’s scale is utilized by clients like Bayer Crop Science, which processes around 15 billion genotypes each hour. PGS has built an equivalent of one of the biggest supercomputers in the world using GKE. To handle workloads with different requirements, GKE may build numerous node pools, each with unique types/shapes and a sequence of virtual machines.

GKE Several tenants

An option to managing several single-tenant clusters and access control is GKE cluster multi-tenancy. According to this approach, several users and/or workloads referred to as “tenants” share a multi-tenant cluster. Tenant isolation is possible with GKE thanks to namespace separation, which lets you keep each tenant’s Kubernetes resources distinct. Next, rules may be used to limit resource utilization, ensure tenant isolation, impose restrictions on API access, and restrict the capabilities of containers.

“Fair-sharing” and Queueing

Tenants submitting workloads can fairly share the underlying cluster resources by implementing policies and configurations as an administrator. It’s up to you to determine what is reasonable in this situation. To make sure there is space on the platform for all tenants’ workloads, you might choose to set resource quota restrictions per tenant based on their minimal workload requirements. If resources are unavailable, you may also queue incoming jobs and process them in the order that they were received.

For batch, high performance computing, machine learning, and related applications on a Kubernetes cluster, Kueue is a Kubernetes-native task queueing system. Kueue controls quotas and how jobs use them to aid in the equitable distribution of cluster resources among its tenants. Kueue determines when a job should be preempted (i.e., when active pods should be destroyed), when a job should wait, and when a job should be admitted to start (i.e., pods can be formed). The flow from a user submitting a job to Kueue accepting it with the relevant nodeAffinity and the other components of Kubernetes turning on to handle the workload is depicted in the diagram below.

Kueue provides notions like ClusterQueue (a cluster-scoped object that regulates a pool of resources like as CPU, memory, and hardware accelerators) to assist administrators in configuring batch processing behavior. Cohorts of ClusterQueues may be formed, and the BorrowingLimit of each ClusterQueue allows for the restricted borrowing of unused quotas amongst members of the same cohort.

kueue’s ResourceFlavor object represents different types of resources and lets you correlate them with cluster nodes using labels and taints. For instance, your cluster may contain nodes with various CPU architectures (such as Arm or x86) or accelerator types and brands (such as Nvidia T4, A100, and so on). These resources can be represented as ResourceFlavors, which can then be referenced in ClusterQueues with quotas to regulate resource restrictions.

Dependable workloads with cost optimization

It is crucial to employ computing and storage platform resources as efficiently as possible. With Compute Engine Persistent Disks via Persistent volumes, your GKE batch workloads can benefit from long-lasting storage, helping you cut expenses and appropriately scale your compute instances to match your batch processing requirements without compromising performance.

Believability

You have control over which logs and metrics, if any, are delivered from your GKE cluster to Cloud Logging and Cloud Monitoring thanks to GKE’s integration with Google Cloud’s operations suite. For batch users, this means having the freedom to create their own workload-specific metrics using monitoring tools like Prometheus, as well as having access to comprehensive and current logs from their workload Pods. In order to facilitate hassle-free metrics collecting powered by Google’s planet-scale Monarch time-series database, GKE now offers Managed Service for Prometheus. It is highly recommended that you utilize managed collection as it removes the hassle of configuring and maintaining Prometheus servers. See batch platform monitoring for further information.

In summary

For containerized batch workloads, GKE offers a scalable, robust, secure, and affordable platform with both a fully customizable Standard mode and a hands-off Autopilot experience that can be tailored to your organization’s needs. GKE is prepared to be the new location for your batch workloads thanks to its strong integration with Google Cloud services, access to all Compute Engine VM types, CPU and accelerator architectures, and Kueue’s Kubernetes-native job queueing features. It enables you to manage massive computing systems, making scale, performance, and multitenancy easier to manage while optimizing costs.