AI Inference with Google Cloud GPUs and TPUs

There is a growing need for high-performance, low-cost AI inference (serving) in the quickly changing field of artificial intelligence. JetStream and MaxDiffusion are two new open source software products that we introduced this week.

Starting with Cloud TPUs, JetStream is a new inference engine for XLA devices. With up to three times more inferences per dollar for large language models (LLMs) than earlier Cloud TPU inference engines, JetStream is particularly designed for LLMs and marks a major advancement in both performance and cost effectiveness. JetStream provides support for JAX models via MaxText, Google’s highly scalable, high-performance reference implementation for LLMs that users may fork to expedite their development, and PyTorch models via PyTorch/XLA.

The equivalent of MaxText for latent diffusion models, MaxDiffusion simplifies the process of training and serving diffusion models that are optimized for optimal performance on XLA devices, beginning with Cloud TPUs.

Furthermore, Google is pleased to provide the most recent MLPerf Inference v4.0 performance results, which highlight the strength and adaptability of Google Cloud’s A3 virtual machines (VMs) driven by NVIDIA H100 GPUs.

JetStream: Cost-effective, high-performance LLM inference

With their ability to power a broad variety of applications including natural language comprehension, text production, and language translation, LLMs are at the vanguard of the AI revolution. Google developed JetStream, an inference engine that offers up to three times more inferences per dollar than earlier Cloud TPU inference engines, to lower the LLM inference costs for their clients.

Advanced speed optimizations are included in JetStream, including sliding window attention, continuous batching, and int8 quantization for weights, activations, and key-value (KV) caching. And JetStream supports your favourite framework, whether you’re using PyTorch or JAX. Google provide optimized MaxText and PyTorch/XLA versions of popular open models, such Gemma and Llama, for maximum cost-efficiency and speed, to further expedite your LLM inference procedures.

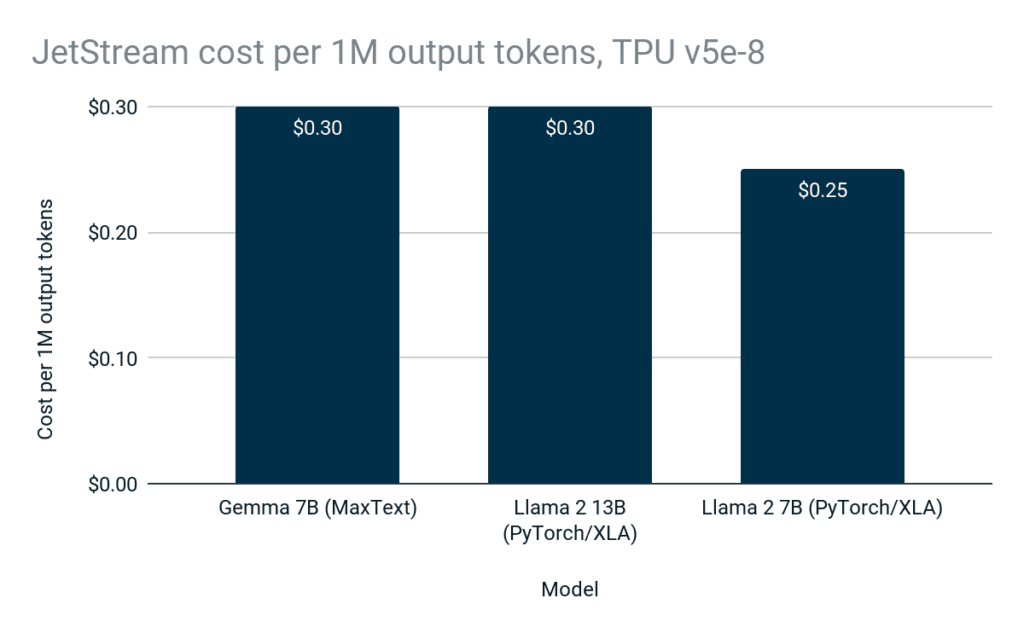

JetStream provides up to 4783 tokens/second for open models, such as Gemma in MaxText and Llama 2 in PyTorch/XLA, using Cloud TPU v5e-8:

Because of JetStream’s excellent speed and efficiency, Google Cloud users pay less for inference, increasing the accessibility and affordability of LLM inference:

JetStream is used by clients like Osmos to reduce the time it takes for LLM inference tasks:

“At Osmos, Google is created a data transformation engine driven by AI to assist businesses in growing their commercial partnerships by automating data processing. In order to map, evaluate, and convert the often disorganized and non-standard data that is received from clients and business partners into excellent, useable data, intelligence must be applied to each row of data. High-performance, scalable, and reasonably priced AI infrastructure for inference, training, and fine-tuning is required to do this.

Google Cloud TPU v5e

For their end-to-end AI processes, Google is decided on Cloud TPU v5e with MaxText, JAX, and JetStream for this reason. They used Google Cloud to rapidly and easily use JetStream to install Google’s most recent Gemma open model for inference on Cloud TPU v5e, and MaxText to fine-tune the model on billions of tokens. All are able to see results in a matter of hours rather than days thanks to Google’s AI-optimized hardware and software stack.”

Google are driving the next wave of AI applications by giving academics and developers an open-source, robust, and affordable framework for LLM inference. JetStream can help you explore new avenues in natural language processing and expedite your journey, regardless of your experience level with LLMs or AI.

With JetStream, experience LLM inference as it will be in the future. To get started on your next LLM project and learn more about JetStream, visit Google’s GitHub site. Long-term development and maintenance of JetStream on GitHub and via Google Cloud Customer Care are Google commitments. To further enhance the state of the art, they are extending an invitation to the community to collaborate on projects and make changes.

MaxDiffusion

Diffusion models are revolutionizing computer vision, much as LLMs revolutionized natural language processing. Google developed MaxDiffusion, a set of open-source diffusion-model reference implementations, to lower the expenses associated with installing these models for our clients. These JAX-written solutions are very efficient, scalable, and adaptable; for computer vision, imagine MaxText.

MaxDiffusion offers high-performance implementations of diffusion model building blocks, including high-throughput picture data loading, convolutions, and cross attention. MaxDiffusion is designed to be very flexible and customizable. Whether you’re a developer looking to include state-of-the-art gen AI capabilities into your products or a researcher pushing the limits of picture production, MaxDiffusion offers the framework you need to be successful.

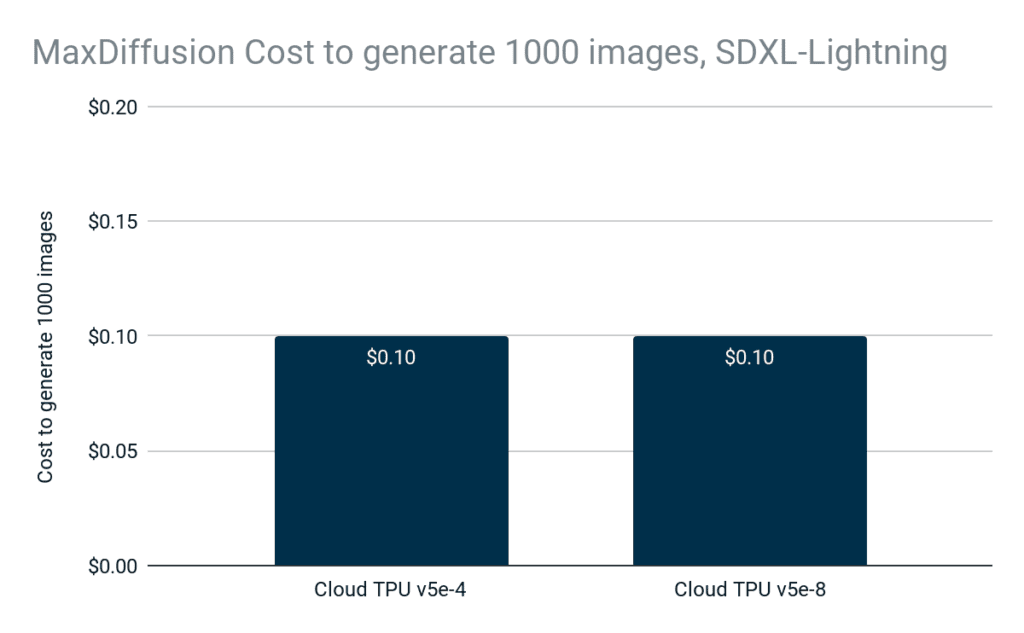

Utilizing the full potential of Cloud TPUs’ high speed and scalability, the MaxDiffusion implementation of the new SDXL-Lightning model delivers 6 images/s on Cloud TPU v5e-4 and throughput increases linearly to 12 images/s on Cloud TPU v5e-8.

Additionally, MaxDiffusion is economical, much like MaxText and JetStream; producing 1000 photos on Cloud TPU v5e-4 or Cloud TPU v5e-8 only costs $0.10.

Google Cloud is being used by clients like Codeway to increase cost-effectiveness for diffusion model inference at scale:

“At Codeway, Google develop popular applications and games that are used by over 115 million users in 160 countries worldwide. An AI-powered programme called “Wonder,” for instance, transforms words into digital artworks, while “Facedance” causes faces to dance with a variety of entertaining animations. Millions of people need access to AI, which means a very scalable and economical inference infrastructure is needed. Compared to competing inference systems, Google were able to serve diffusion models 45% quicker and handle 3.6 times more queries per hour using Cloud TPU v5e. This result in considerable infrastructure cost reductions at our size and enables us to economically reach even more consumers with AI-powered products.

A scalable, adaptable, and high-performance basis for picture production is offered by MaxDiffusion. MaxDiffusion can help you along the way, regardless of your level of experience with computer vision or whether you’re just getting started with picture production.

To find out more about MaxDiffusion and to get started on your next creative project, go over to Google’s GitHub repository.

Good outcomes in MLPerf 4.0 Inference for A3 Virtual Machines

Google made the broad availability of A3 virtual machines known in August 2023. The A3s are designed to train and handle difficult tasks such as LLMs, and they are powered by eight NVIDIA H100 Tensor Core GPUs in a single virtual machine. NVIDIA H100 GPU-powered A3 Mega, which doubles A3’s GPU-to-GPU networking capacity, will be on sale next month.

Google provided 20 results utilising A3 VMs for the MLPerf Inference v4.0 benchmark testing, spanning seven models, including the new Stable Diffusion XL and Llama 2 (70B) benchmarks:

- RetinaNet (Offline and on Server)

- 3D U-Net: accuracy of 99.9% and 99% (Offline)

- BERT: (Server and Offline) accuracy of 99 and 99%

- 99.9% accuracy for DLRM v2 (Server and Offline)

- GPT-J: accuracy rates of 99% and 99% (Server and Offline)

- Stable Diffusion XL (Offline and on the server)

- Llama 2: Accuracy (Server and Offline): 99% and 99%

Every result fell between 0 and 5% of the maximum performance shown in NVIDIA’s submissions. These outcomes demonstrate how closely Google Cloud and NVIDIA have collaborated to provide workload-optimized end-to-end solutions for gen AI and LLMs.

Using NVIDIA GPUs with Google Cloud TPUs to power AI in the future

With the help of software breakthroughs like JetStream, MaxText, and MaxDiffusion, as well as hardware improvements in Google Cloud TPUs and NVIDIA GPUs, Google’s AI inference innovation enables their clients to develop and expand AI applications. Developers may reach new levels of LLM inference performance and cost-efficiency with JetStream, opening up new possibilities for applications using natural language processing. With the help of MaxDiffusion, developers and researchers may investigate the full potential of diffusion models to generate images more quickly. Google’s strong MLPerf4.0 inference results on NVIDIA H100 Tensor Core GPU-powered A3 virtual machines (VMs) demonstrate the capability and adaptability of Cloud GPUs.