Nvidia Nim Deployment

The usage of generative AI has increased dramatically. The 2022 debut of OpenAI’s ChatGPT led to over 100M users in months and a boom in development across practically every sector.

POCs using open-source community models and APIs from Meta, Mistral, Stability, and other sources were started by developers by 2023.

As 2024 approaches, companies are turning their attention to full-scale production deployments, which include, among other things, logging, monitoring, and security, as well as integrating AI models with the corporate infrastructure already in place. This manufacturing route is difficult and time-consuming; it calls for specific knowledge, tools, and procedures, particularly when operating on a large scale.

What is Nvidia Nim?

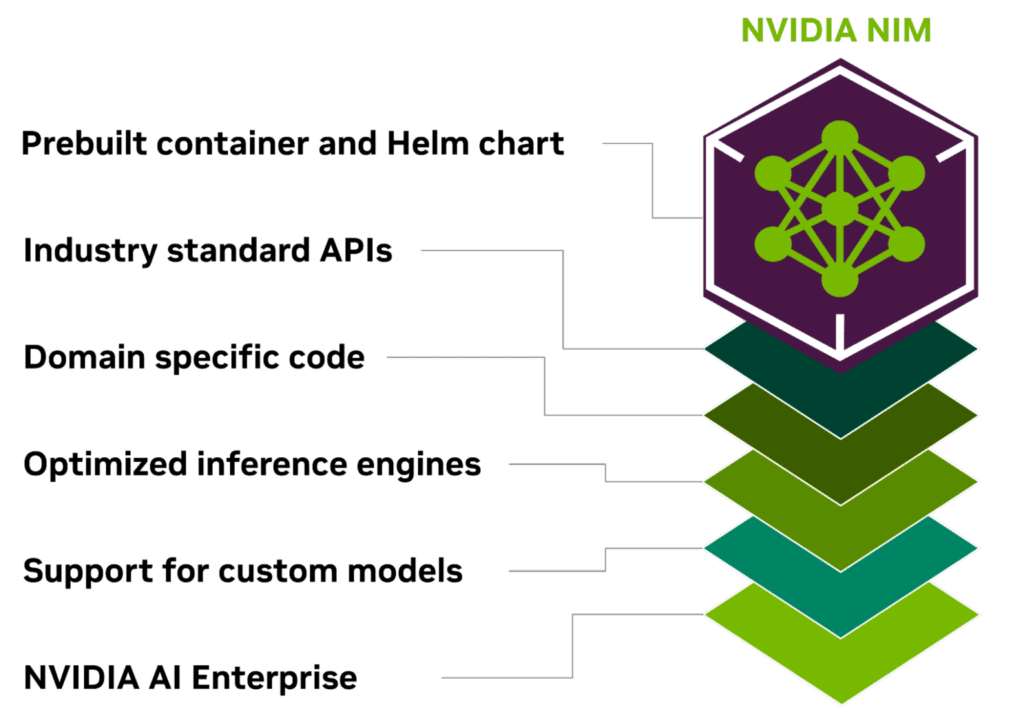

Industry-standard APIs, domain-specific code, efficient inference engines, and enterprise runtime are all included in NVIDIA NIM, a containerized inference microservice.

A simplified approach to creating AI-powered workplace apps and implementing AI models in real-world settings is offered by NVIDIA NIM, a component of NVIDIA AI workplace.

NIM is a collection of cloud-native microservices that have been developed with the goal of reducing time-to-market and streamlining the deployment of generative AI models on GPU-accelerated workstations, cloud environments, and data centers. By removing the complexity of creating AI models and packaging them for production using industry-standard APIs, it increases the number of developers.

NVIDIA NIM for AI inference optimization

With NVIDIA NIM, 10-100X more business application developers will be able to contribute to their organizations’ AI transformations by bridging the gap between the intricate realm of AI development and the operational requirements of corporate settings.

Figure: Industry-standard APIs, domain-specific code, efficient inference engines, and enterprise runtime are all included in NVIDIA NIM, a containerized inference microservice.

The following are a few of the main advantages of NIM.

Install somewhere

Model deployment across a range of infrastructures, including local workstations, cloud, and on-premises data centers, is made possible by NIM’s controllable and portable architecture. This covers workstations and PCs with NVIDIA RTX, NVIDIA Certified Systems, NVIDIA DGX, and NVIDIA DGX Cloud.

Various NVIDIA hardware platforms, cloud service providers, and Kubernetes distributions are subjected to rigorous validation and benchmarking processes for prebuilt containers and Helm charts packed with optimized models. This guarantees that enterprises can deploy their generative AI applications anywhere and retain complete control over their apps and the data they handle. It also provides support across all environments powered by NVIDIA.

Use industry-standard APIs while developing

It is easier to construct AI applications when developers can access AI models using APIs that follow industry standards for each domain. With as few as three lines of code, developers may update their AI apps quickly thanks to these APIs’ compatibility with the ecosystem’s normal deployment procedures. Rapid implementation and scalability of AI technologies inside corporate systems is made possible by their seamless integration and user-friendliness.

Use models specific to a domain

Through a number of important features, NVIDIA NIM also meets the demand for domain-specific solutions and optimum performance. It bundles specialized code and NVIDIA CUDA libraries relevant to a number of disciplines, including language, voice, video processing, healthcare, and more. With this method, apps are certain to be precise and pertinent to their particular use case.

Using inference engines that have been tuned

NIM provides the optimum latency and performance on accelerated infrastructure by using inference engines that are tuned for each model and hardware configuration. This enhances the end-user experience while lowering the cost of operating inference workloads as they grow. Developers may get even more precision and efficiency by aligning and optimizing models with private data sources that remain within their data center, in addition to providing improved community models.

Assistance with AI of an enterprise-level

NIM, a component of NVIDIA AI Enterprise, is constructed using an enterprise-grade base container that serves as a strong basis for corporate AI applications via feature branches, stringent validation, service-level agreements for enterprise support, and frequent CVE security upgrades. The extensive support network and optimization tools highlight NIM’s importance as a key component in implementing scalable, effective, and personalized AI systems in real-world settings.

Accelerated AI models that are prepared for use

NIM provides AI use cases across several domains with support for a large number of AI models, including community models, NVIDIA AI Foundation models, and bespoke models given by NVIDIA partners. Large language models (LLMs), vision language models (VLMs), voice, picture, video, 3D, drug discovery, medical imaging, and other models are included in this.

Using cloud APIs provided by NVIDIA and available via the NVIDIA API catalog, developers may test the most recent generative AI models. Alternatively, they may download NIM and use it to self-host the models. In this case, development time, complexity, and expense can be reduced by quickly deploying the models on-premises or on major cloud providers using Kubernetes.

By providing industry-standard APIs and bundling algorithmic, system, and runtime improvements, NIM microservices streamline the AI model deployment process. This makes it possible for developers to include NIM into their current infrastructure and apps without the need for complex customization or specialist knowledge.

Businesses may use NIM to optimize their AI infrastructure for optimal performance and cost-effectiveness without having to worry about containerization or the intricacies of developing AI models. NIM lowers hardware and operating costs while improving performance and scalability on top of accelerated AI infrastructure.

NVIDIA offers microservices for cross-domain model modification for companies wishing to customize models for corporate apps. NVIDIA NeMo allows for multimodal models, speech AI, and LLMs to be fine-tuned utilizing private data. With an expanding library of models for generative biology, chemistry, and molecular prediction, NVIDIA BioNeMo expedites the drug development process. With Edify models, NVIDIA Picasso speeds up creative operations. Customized generative AI models for the development of visual content may be implemented thanks to the training of these models using licensed libraries from producers of visual material.