GPUs for AI Inference

Extraordinary advancements in AI model architectures are being made because to ground-breaking innovations like Transformer and the quick expansion of high-quality training data. Large language models (LLMs) in generative AI, for instance, have been expanding by up to 10 times annually. In order to safely and effectively provide AI models to customers all around the globe, businesses are integrating these AI models into their goods and services, which is fueling the exponential rise in demand for AI-accelerated computing for AI training and inference.

The most recent MLPerf 3.1 Inference results1 demonstrate how Google’s system innovations allow our clients to keep up with the constantly increasing computational needs of the newest AI models. In comparison to current solutions, Google Cloud inference systems promise performance improvements of between 2 and 4 times and cost-efficiency improvements of over 2 times. At Google Cloud, we take pride in being the only cloud service provider to deliver a broad variety of high-performance, reasonably priced, and scalable AI inference services that are driven by both NVIDIA GPUs and Google Cloud’s specifically designed Tensor Processor Units (TPUs).

AI inference using GPU acceleration on Google Cloud

In order to provide our clients with the most cutting-edge GPU-accelerated inference platform, Google Cloud and NVIDIA are still working together. We just introduced the G2 VM, the first and only product in the cloud market driven by the NVIDIA L4 Tensor Core GPU, in addition to the A2 VM powered by the NVIDIA A100 GPU. Additionally, their newest A3 virtual machines, which use the H100 GPU, are now in private preview and will be on public release in the following month. All three of these NVIDIA-powered AI accelerators are exclusively available through Google Cloud.

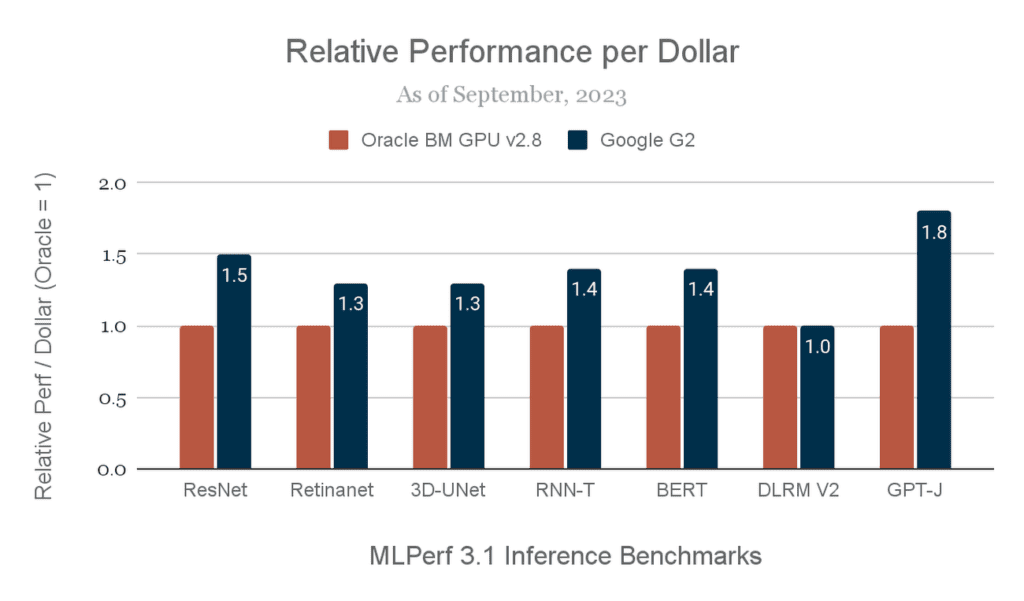

For challenging inference applications, the A3 VM offers a relative performance gain of 1.7x–3.9x over the A2 VM thanks to the H100 GPU’s excellent MLPerf 3.1 Inference Closed results. For clients that want to maximize inference cost-efficiency, the Google Cloud G2 VM powered by the L4 GPU is a fantastic option. The G2’s merits are highlighted by NVIDIA’s MLPerf 3.1 findings for the L4 GPU, which show up to 1.8x better performance per dollar than a similar public cloud inference product. G2 VMs are being used by Google Cloud clients like Bending Spoons to roll out fresh AI-powered user experiences.

From the outcomes of MLPerf v3.1 Inference Closed, we developed our performance per dollar metrics, which we employ throughout this blog article. All prices were accurate when this article was published. Performance per dollar is not a recognized MLPerf statistic, and the MLCommons Association has not endorsed it.

Scalable, affordable AI inference utilizing cloud TPUs

Inference for a wide variety of AI workloads, including the most recent state-of-the-art LLMs and generative AI models, is made possible by the new Cloud TPU v5e, which was unveiled at Google Cloud Next. Cloud TPU v5e offers high-throughput and low-latency inference performance and is built to be effective, scalable, and flexible. Fast predictions for the most complicated models are possible because to the 393 trillion int8 operations per second (TOPS) capacity of each TPU v5e processor.

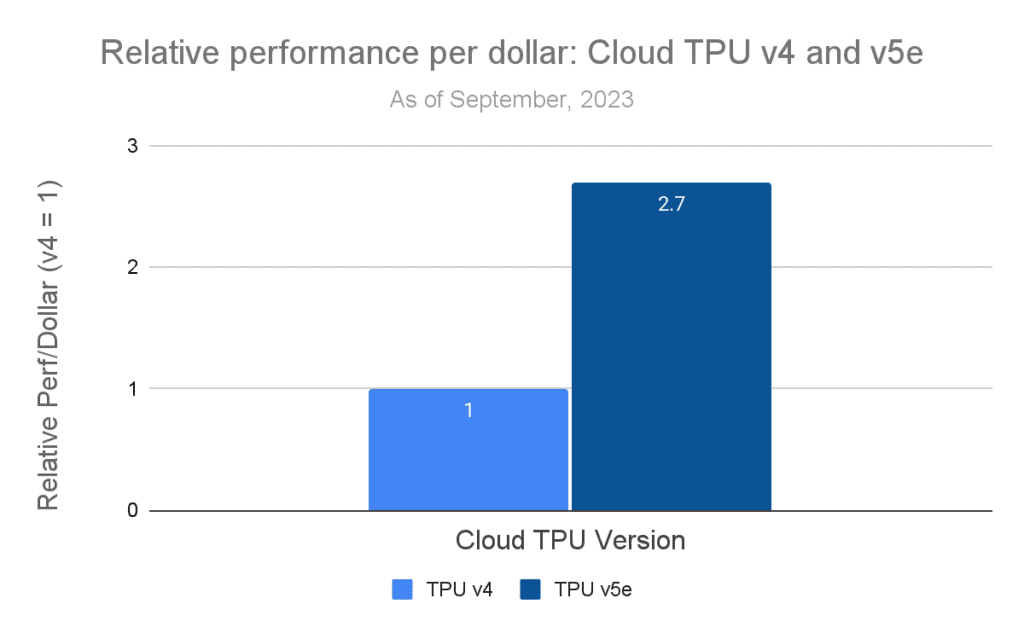

With numerous TPUs operating closely in harmony, Cloud TPU v5e can scale up to the biggest models with to our high-speed inter-chip interface (ICI). The 6-billion-parameter GPT-J LLM benchmark was performed on four TPU v5e processors for our MLPerf 3.1 inference results. Compared to TPU v4, TPU v5e offers 2.7 times more performance for every dollar spent:

Google obtained the TPU3 performance per dollar metrics from the MLPerfTM v3.1 Inference Closed findings, much as we did with the G2 performance per dollar data. All prices were accurate when this article was published. Performance per dollar is not a recognized MLPerfTM statistic, and the MLCommons Association has not confirmed it.

The enhanced inference software stack, which fully utilizes the potent TPU v5e hardware to match the QPS of the Cloud TPU v4 system on the GPT-J LLM test, makes it feasible to achieve the 2.7x increase in performance per dollar. The inference stack makes use of XLA, Google’s AI compiler, and SAX, a framework developed by Google DeepMind for high-performance AI inference. Important improvements include:

- Transformer operator fusions and XLA optimizations.

- Quantification of post-training weight using int8 precision.

- Using GSPMD, high-performance sharding in the 2×2 topology.

- Prefix computation and decoding operations in SAX are executed in batches using buckets.

- SAX’s dynamic batching.

TPU v5e grows effortlessly through our ICI from 1 chip to 256 chips, allowing the platform to flexibly handle a variety of inference and training needs, even though these findings highlight inference performance in four-chip setups. A 256-chip 16×16 TPU v5e architecture can enable inference for models with up to 2 trillion parameters, as demonstrated below.

The Cloud TPU v5e can further scale to multiple 256-chip pods thanks to our newly released multi-slice training and multi-host inference software, allowing customers to take advantage of the same technology Google uses internally to meet the demands of the biggest and most intricate model training and serving systems.

Increasing the pace of your AI innovation

With a wide selection of GPU and TPU-powered, high-performance AI inference options, Google Cloud is well situated to enable businesses to accelerate their AI workloads at scale.

YouTube uses the TPU v5e platform to provide suggestions to billions of people on the YouTube homepage and WatchNext. The TPU v5e offers up to 2.5 times as many queries at the same price as the last version.When executing inference on our production model, Cloud TPU v5e consistently provided up to 4X more performance per dollar than similar solutions on the market.

The TPU v5e hardware, which was created specifically for speeding the most cutting-edge AI and ML models, is fully used by the Google Cloud software stack, which is tuned for maximum performance and efficiency. Our time to solution was significantly expedited by this robust and flexible hardware and software combination, Instead of spending weeks manually fine-tuning bespoke kernels, we optimized our model in a matter of hours to meet and even surpass our inference performance goals.

We have been able to significantly reduce processing lag times by up to 15 seconds per job using G2 VMs. Additionally, Google Cloud has been crucial in enabling us to smoothly expand up to 32,000 GPUs at peak periods, such as when our Remini app shot to the top of the U.S. App Store, and down to a daily average of 2,000 GPUs.

[…] second priority is public support. The public sector must prioritize citizen-benefitting AI use cases for society to benefit. It might be used to provide real-time information, personalize […]

[…] Will Start Shipping AI GPUs to China in Q2 2024, With NVIDIA H20 AI GPUs Mass Production To […]