French Mistral AI

The goal of the French AI startup Mistral AI is to advance publicly accessible models to cutting-edge capabilities. Their area of expertise lies in rapidly and securely developing large language models (LLMs) for a range of applications, including code generation and chatbots.

Mistral 8x7B

AWS is happy to inform you that Mistral 7B and Mixtral 8x7B, two excellent Mistral AI models, will soon be accessible on Amazon Bedrock. Mistral AI will be the seventh foundation model provider added to Amazon Bedrock by AWS, following in the footsteps of other top AI firms like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon. With the help of these two Mistral AI models, you can develop and scale generative AI applications using Amazon Bedrock by selecting the best, most effective LLM for your use case.

An Overview of AI Models for Mistral

A brief synopsis of these two eagerly awaited Mistral AI models is provided below:

Mistral 7B AI

The first foundation model from Mistral AI, Mistral 7B, supports tasks involving the natural coding of English text. Its low memory requirements, high throughput, and low latency are all optimized for its size. This robust model supports a wide range of use cases, including text completion, code completion, and classification as well as text summarization.

Mixtral 8x7B is a well-liked, superior sparse Mixture-of-Experts (MoE) model that works well for text completion, text classification, question and answer, and code generation.

Selecting the appropriate foundation model is essential for developing applications that work. Here are some highlights that show you why Mistral AI models might work well for your use case:

Cost-performance balance: One standout feature of Mistral AI’s models is their exceptional cost-performance balance. These models are cost-effective, scalable, and economical due to the sparse MoE used in them.

Quick inference Mistral AI models are designed for minimal latency and have a remarkable inference speed. In addition, the models have a high throughput for their size and a low memory requirement. The most important use case for this feature is scaling your production use cases.

Trust and transparency: Mistral AI models are scalable and transparent. This makes it possible for businesses to comply with strict legal requirements.

All users can access Mistral AI models, making them available to a broad spectrum of users. This facilitates the integration of generative AI features into applications for businesses of all sizes.

Accessible Publicly accessible Mistral AI models will soon be available on Amazon Bedrock. As per usual, enter your email address to receive notifications when these models become available on Amazon Bedrock.

Mixtral 8x7B

Mixtral is a versatile and swift model that can be applied to various scenarios. It speaks multiple languages, has natural coding skills, and matches or surpasses Llama 2 70B on all benchmarks despite being six times faster. It manages sequence lengths up to 32k. It is available for use via our API, or you can install it yourself using Apache 2.0.

Expert Mixtral

A Superior Sparse Combination of Experts

Mistral AI is still committed to providing the developer community with the best open models. To advance AI, new technological approaches must be taken in addition to recycling well-known architectures and training paradigms. Above all, to encourage new inventions and applications, it is necessary to make original models beneficial to the community.

With open weights, Mixtral 8x7B is a premium sparse mixture of experts’ model (SMoE) that the team is pleased to present today. Licensed under Apache 2.0. With six times faster inference, Mixtral beats Llama 2 70B on the majority of benchmarks. When it comes to cost/performance trade-offs, it is the best model overall and the strongest open-weight model with a permissive license. In particular, it performs on most common benchmarks either on par with or better than GPT3.5 technology.

Mixtral is capable of the following.

- It manages a context with 32,000 tokens with grace.

- It can handle Spanish, German, Italian, French, and English.

- It performs well when generating code.

- It can be adjusted to produce an MT-Bench score of 8.3 for an instruction-following model.

Expanding the use of sparse architectures in open models

A network of sparse mixtures of experts is called Mixtral. There are eight different groups of parameters that the feedforward block selects from in this decoder-only model. A router network selects two of these groups (the “experts”) at each layer for each token to process it and add their output.

Because the model only uses a portion of the entire set of parameters per token, this technique allows an increase in model parameters while maintaining cost and latency control. In actuality, Mixtral uses only 12.9B parameters per token out of a total of 46.7B parameters. As a result, it uses the same amount of resources and processes input and output at the same rate as a 12.9B model.

Mixtral is pre-trained using data that has been taken from the public Web; experts and routers are trained at the same time.

Achievement

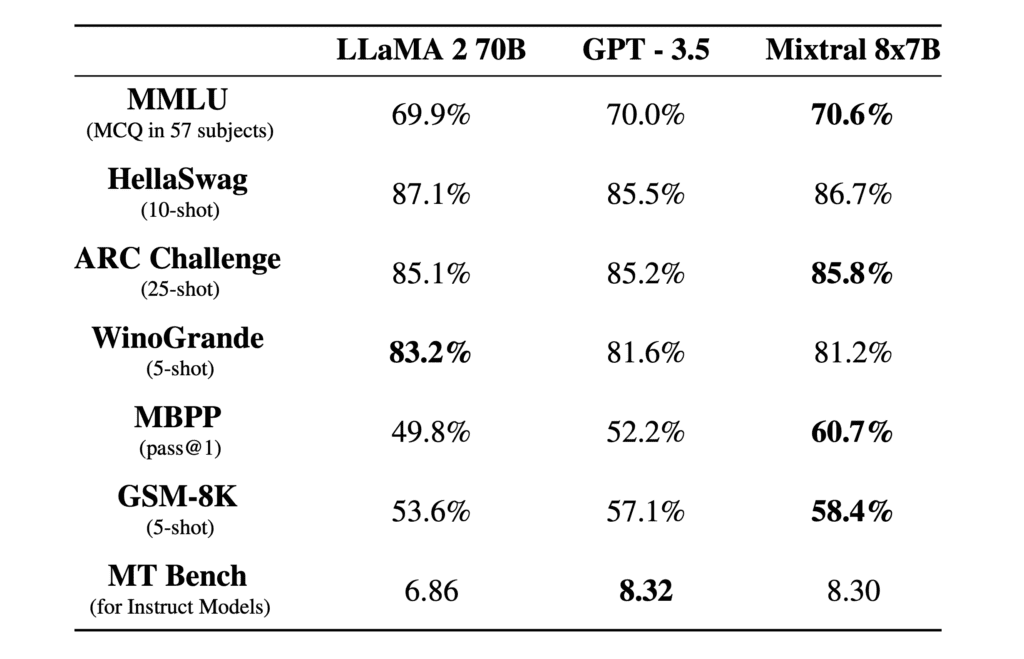

Contrast Mixtral with the GPT3.5 base model and the Llama 2 family. Mixtral performs on most benchmarks either on par with Llama 2 70B or better than GPT3.5.

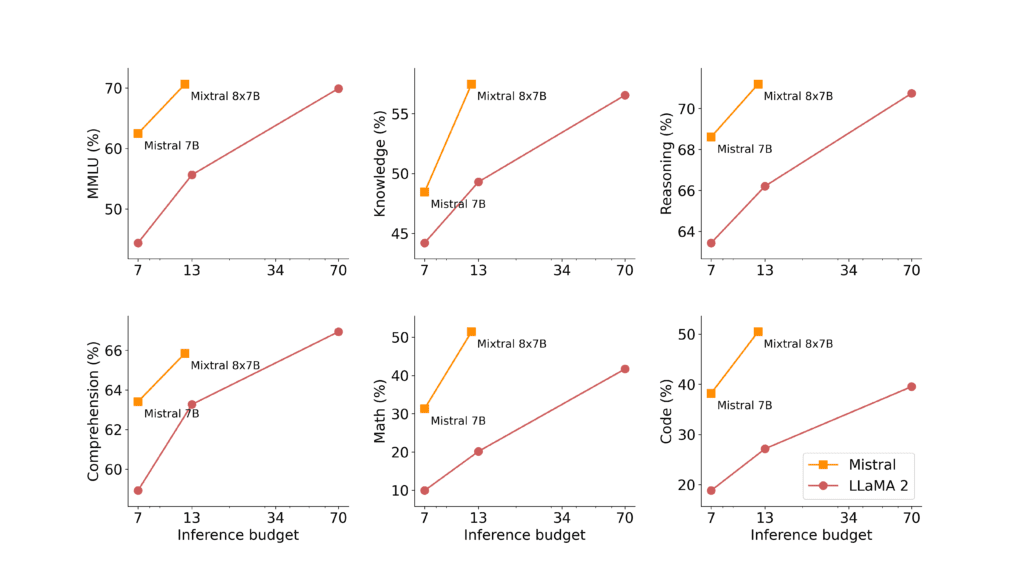

They quantify the quality versus inference budget tradeoff in the following figure. In comparison to Llama 2 models, Mistral 7B and Mixtral 8x7B are part of a family of extremely efficient models.

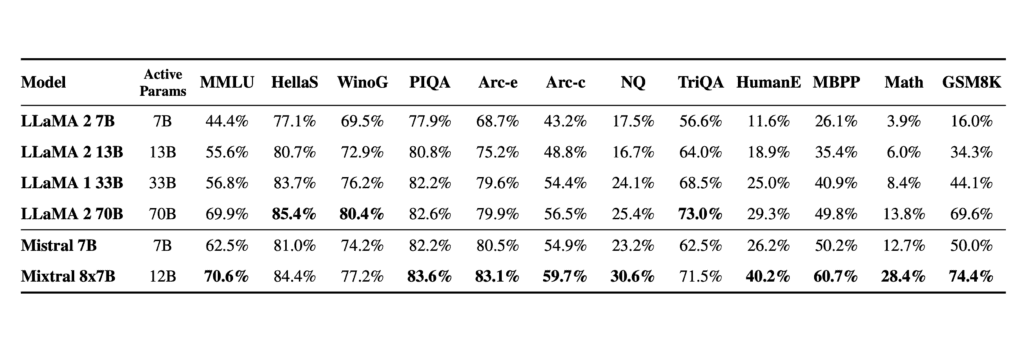

The detailed results for the above figure are provided in the table below.

Mixtral exhibits less bias on the BBQ benchmark than Llama 2. In general, Mixtral exhibits higher levels of positive sentiment on BOLD than Llama 2, with comparable variances observed within each dimension.

Languages: French, German, Spanish, Italian, and English are all mastered by Mixtral 8x7B.

Models with instructions

Alongside Mixtral 8x7B, Mistral AI is also release Mixtral 8x7B Instruct. For careful instruction following, this model has been optimized via direct preference optimization (DPO) and supervised fine-tuning. It performs similarly to GPT3.5 and receives an MT-Bench score of 8.30, making it the best open-source model.

As demonstrated here, Mixtral can be gently asked to prohibit certain outputs from being used in applications that demand a high degree of moderation. An appropriate tuning of preferences can also accomplish this. Remember that in the absence of such a prompt, the model will simply do as it is told.

Use an open-source deployment stack to implement Mixtral.

Mistral AI has submitted changes to the vLLM project, which integrates Megablocks CUDA kernels for efficient inference so that the community can run Mixtral with a fully open-source stack.

Deploying vLLM endpoints on any cloud instance is possible with Skypilot.

FAQS

When was Amazon Bedrock introduced?

One of the new tools for creating generative AI on AWS was Amazon Bedrock, which was first made available in preview form in April 2023.