Hyperdimensional Computing

The high-dimensional and distributed information representation found in the brain serves as the model for Hyperdimensional Computing (HDC), a machine learning technique. Hypervectors serve as the foundation for HDC, which, like other AI techniques, is implemented through training and inference stages.

HDC Hypervectors: Instruction and Interpretation

Hypervectors, which are enormous vectors, are how Hyperdimensional Computing represents data. Hypervectors can be examined for similarities and differences using the fundamental Hyperdimensional Computing operations (similarity, bundling, binding, and permutation), and an encoding system can be created.

Training:

In training, input feature vectors are transformed into hypervectors, which are compared and grouped into classes according to how similar they are or are not to each other. Ultimately, a class hypervector that represents every class element is created by bundling the hypervectors within each class. An HDC-based classification system can be developed thanks to this class hypervector.

Inference:

Each new feature vector is similarly converted to a hypervector once training has succeeded in creating class hypervectors from a series of input feature vectors. This allows for a quick, effective, and reliable classification system to be established by comparing the new hypervector to the pre-existing class hypervectors and determining whether the data is similar.

The inherent advantages of a classification system based on HDC are as follows:

Efficiency:

Compared to conventional techniques, hypervectors provide the efficient and compact representation of complicated patterns, improving processing and classification jobs’ efficiency.

Minimal Power:

Hyperdimensional Computing can be implemented in hardware with minimal power consumption because of its simplicity and the binary nature of its operations (XOR, AND, etc.). This is especially helpful for edge computing applications, wearable technology, and Internet of Things devices where energy efficiency is critical.

Extremely Parallel:

Hyperdimensional Computing‘s distributed architecture permits parallel processing, which is comparable to the brain’s capacity for parallel processing. For classification problems, this can greatly reduce computing times.

Quick Learning:

Unlike deep learning models, which frequently need a large amount of training data, Hyperdimensional Computing may do one-shot or few-shot learning, in which the system learns from a relatively small number of samples. Because of this feature, HDC is very beneficial in situations when data is limited or changing quickly.

Robust and Reliable:

Due to its great dimensionality, Hyperdimensional Computing is intrinsically resistant to mistakes and noise. Reliable categorization is possible even in noisy situations because little adjustments or distortions in the input data do not dramatically alter the overall representation.

Furthermore, the manipulation of hypervectors involves a large number of simple, repetitive operations, which makes them very suitable to hardware platform acceleration.

The efficient, low-power, highly parallel, and hardware-implementable nature of Hyperdimensional Computing applications makes them perfect for running on Altera FPGAs, which are also extremely parallel and efficient.

Utilising Altera FPGAs and oneAPI

Intel oneAPI Base ToolKit simplifies cross-architecture, high-performance application development. The Intel oneAPI Base Toolkit implements the industry standard and works with FPGAs, CPUs, GPUs, and AI accelerators.

The development process is made simpler by oneAPI, which is one of its primary advantages. Developers don’t need to learn multiple programming languages to construct SYCL/C++ apps that operate on diverse architectures when they use oneAPI. This saves a tonne of time and effort for developers because they can write code only once and have it operate on multiple processors. They can also create software applications and deploy them on the most economical and efficient platform without having to modify the code.

With Intel oneAPI Base Toolkit, Hyperdimensional Computing applications written in SYCL/C++ can be immediately implemented into Altera FPGAs.

To get started with oneAPI for FPGAs, see Boosting Productivity with High-Level Synthesis in Intel Quartus with oneAPI for information on tutorial examples, self-start learning videos, and reference designs.

Hyperdimensional Computing Picture Categorization using Altera FPGAs

Image classification is one scenario where HDC is applied in a practical setting. An HDC image classification system was implemented on an Altera FPGA for training and inference in this example, which was developed by Ian Peitzsch at the Centre for Space, High-Performance, and Resilient Computing (SHREC).

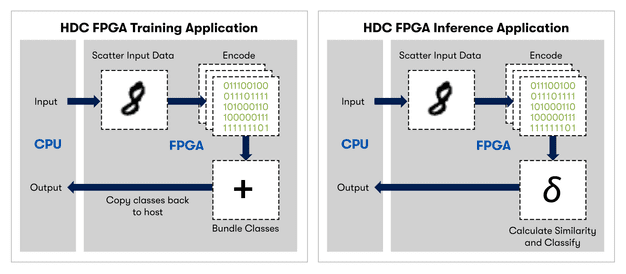

While the data flow is similar in both scenarios, the computations differ. Streaming feature vectors are sent to the FPGA from the host. Every vector is directed to one of eight compute units in the training flow and one of twenty-five compute units in the inference flow.

Training:

- The FPGA receives a stream of feature vectors from the host.

- The parallel encode compute units (CUs) receive each vector.

- Following the bundling of the output-encoded partial hypervectors, a label is produced.

- The class associated with the label receives the bundle of hypervectors.

- Following the processing of all the training data, the class hypervectors are streamed to the host in order to be normalised.

Inference:

- The FPGA receives a stream of feature vectors from the host.

- Every vector is sent to CUs that are parallel encoded.

- To create a single hypervector, the data is routed to the classification kernel.

- Every class hypervector is compared to this one, and the prediction belongs to the class that has the greatest resemblance.

- After then, the host receives a stream of the prediction.

HDC classification algorithms are a perfect fit for Altera FPGAs due to intrinsic properties of both oneAPI and FPGAs, in addition to the fact that Hyperdimensional Computing applications are often quite accessible to hardware implementation.

- The SYCL language’s Universal Shared Memory (USM) feature is supported by oneAPI. In the code-based and final hardware, SYCL USM uniquely permits the host and accelerator to share the same memory, unlike C or C++ alternatives. This lowers system latency to enhance overall performance and permits the simple, industry-standard coding technique of using a pointer to explicitly access data, whether on the host or the accelerator.

- The bottleneck is at the encoding step. The parallel nature of a programmable FPGA enables the usage of many compute units in parallel because each dimension of a hypervector can be encoded independently. When utilising several compute units, the inference time is greatly decreased by this parallel processing.

During training and inference, parallel processing was employed in the encoding stage, and Altera FPGA’s USM feature guaranteed a low latency solution.

At AI Inference, Altera FPGAs Perform Well

This Hyperdimensional Computing image classification algorithm was built on CPU, GPU, and FPGA resources in a real-world evaluation1.

- Cascade Lake-based Intel Xeon Platinum 8256 CPU (3.8GHz, 4 Cores).

- The eleventh generation Intel UHD 630 GPU.

- FPGA Intel Stratix 10 GX.

An Hyperdimensional Computing classification model with 2000 hyperdimensions of 32-bit floating-point values was utilised in all implementations. All three implementations obtained similar accuracy of about 94-95% using the NeuralHD retraining algorithm.

The outcomes highlight the advantages of utilising an FPGA during the inference phase.

The total amount of time spent training the model is the important metric.

Latency is the key performance indicator during inference.

Through training, the FPGA provided an x18 speed-up and the Intel GPU reached a 60x speed-up over the CPU. On the other hand, the accuracy of the CPU and FPGA implementations was about 97%, whereas the GPU implementation only managed to reach about 94% accuracy.

Due to memory constraints in this training scenario, the FPGA could only employ 8 CU in parallel during encoding. More parallel CUs and a quicker FPGA training period might be possible with a bigger FPGA. The evaluation was carried out using fixed resources on Intel DevCloud, and a larger FPGA was not accessible.

Benefits of FPGA AI Latency

With a 3× speed increase over the CPU, the FPGA exhibits the lowest latency during the inference stage. It is demonstrated that the GPU is overkill for inference, requiring significantly more time than the FPGA or even the CPU (demonstrating a slow-down rather than an acceleration).

These findings demonstrate the advantages of utilising an FPGA for AI inference once more. Altera FPGAs are the best option for quick and effective AI inference in data centre and edge applications because they combine the advantages of performance and generally lower costs with license-free oneAPI development tools. Any AI algorithm is trained/developed once and then implemented for inference on a mass scale.