Google Kubernetes Engine

Organizations of all sizes, from startups to major corporations, have acted to harness the power of generative AI by integrating it into their platforms, solutions, and applications ever since it became well-known in the AI space. Although producing new content by learning from existing content is where generative AI truly shines, it is increasingly crucial that the content produced has some level of domain- or area-specificity.

This blog post explains how to train models on Google Kubernetes Engine (GKE) using NVIDIA accelerated computing and the NVIDIA NeMo framework, illustrating how generative AI models can be customized to your use cases.

Constructing models for generative AI

When building generative AI models, high-quality data (referred to as the “dataset”) is essential. The output of the model is minimized by processing, enhancing, and analyzing data in a variety of formats, including text, code, images, and others. To facilitate the model’s training process, this data is fed into a model architecture based on the model’s modality. For Transformers, this could be text; for GANs (Generative Adversarial Networks), it could be images.

The model modifies its internal parameters during training in order to align its output with the data’s patterns and structures. A decreasing loss on the training set and better predictions on a test set are indicators of the model’s learning progress. The model is deemed to have converged when the performance continues to improve. After that, it might go through additional improvement processes like reinforcement-learning with human feedback (RLHF). To accelerate the rate of model learning, more hyperparameters can be adjusted, such as batch size or learning rate. A framework that provides the necessary constructs and tooling can speed up the process of building and customizing a model, making adoption easier.

What is the relationship between Kubernetes and Google Kubernetes Engine?

The open source container orchestration platform Kubernetes is implemented by Google under the management of Google Kubernetes Engine. Google built Kubernetes by using years of expertise running large-scale production workloads on our own cluster management system, Borg.

NVidia NeMo service

NVIDIA NeMo is an end-to-end, open-source platform designed specifically for creating personalized, enterprise-class generative AI models. NeMo uses cutting-edge technology from NVIDIA to enable a full workflow, including large-scale bespoke model training, automated distributed data processing, and infrastructure deployment and serving through Google Cloud. NeMo can also be used with NVIDIA AI Enterprise software, which can be purchased on the Google Cloud Marketplace, for enterprise-level deployments.

The modular design of the NeMo framework encourages data scientists, ML engineers, and developers to combine and match these essential elements when creating AI models

Nvidia Nemo framework

Data curation

Taking information out of datasets, deduplicating it, and filtering it to produce high-quality training data

Distributed training

Advanced parallelism in training models is achieved through which distributes workloads among tens of thousands of compute nodes equipped with NVIDIA graphics processing units (GPUs).

Model customization

Apply methods like P-tuning, SFT (Supervised Fine Tuning), and RLHF (Reinforcement Learning from Human Feedback) to modify a number of basic, pre-trained models to particular domains.

Deployment

The deployment process involves a smooth integration with the NVIDIA Triton Inference Server, resulting in high throughput, low latency, and accuracy. The NeMo framework offers boundaries to respect the security and safety specifications.

- In order to begin the journey toward generative AI, it helps organizations to promote innovation, maximize operational efficiency, and create simple access to software frameworks.

- To implement NeMo on an HPC system that might have schedulers such as the Slurm workload manager, they suggest utilizing the ML Solution that is accessible via the Cloud HPC Toolkit.

Scalable training with GKE

Massively parallel processing, fast memory and storage access, and quick networking are necessary for building and modifying models. Furthermore, there are several demands on the infrastructure, including fault tolerance, coordinating distributed workloads, leveraging resources efficiently, scalability for quicker iterations, and scaling large-scale models.

With just one platform to handle all of their workloads, Google Kubernetes Engine gives clients the opportunity to have a more reliable and consistent development process. With its unparalleled scalability and compatibility with a wide range of hardware accelerators, including NVIDIA GPUs, Google Kubernetes Engine serves as a foundation platform that offers the best accelerator orchestration available, helping to save costs and improve performance dramatically.

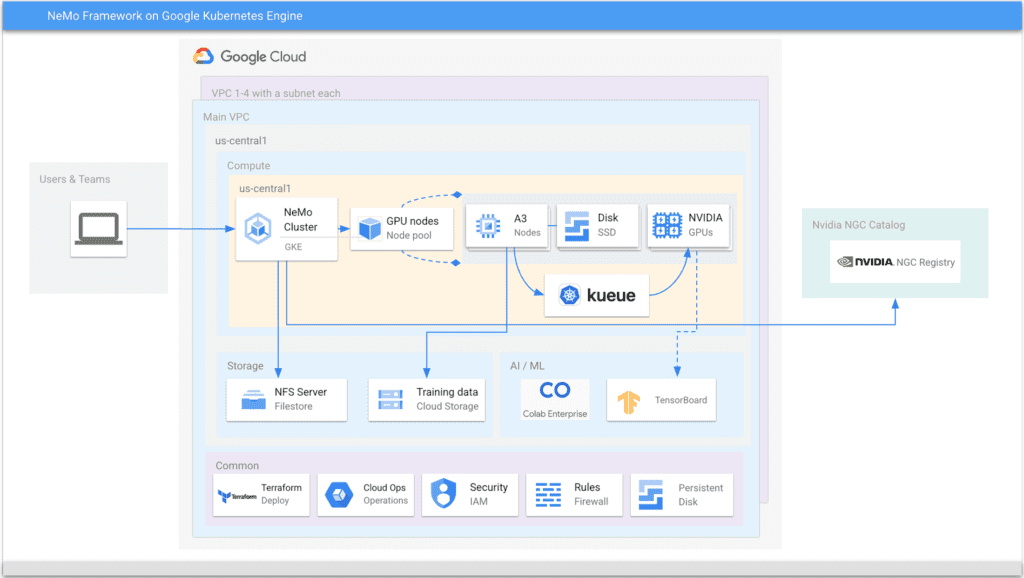

Let’s examine how Google Kubernetes Engine facilitates easy management of the underlying infrastructure with the aid of Figure 1:

Calculate

- A single NVIDIA H100 or A100 Tensor Core GPU can be divided into multiple instances, each of which has high-bandwidth memory, cache, and compute cores. These GPUs are known as multi-instance GPUs (MIG).

- GPUs that share time: A single physical GPU node is utilized by several containers to maximize efficiency and reduce operating expenses.

Keepsake

- High throughput and I/O requirements for local SSD

- GCS Fuse: permits object-to-file operations

Creating a network

- Network performance can be enhanced by using the GPUDirect-TCPX NCCL plug-in, a transport layer plugin that allows direct GPU to NIC transfers during NCCL communication.

- To improve network performance between GPU nodes, use a Google Virtual Network Interface Card (gVNIC).

Queuing

In an environment with limited resources, Kubernetes’ native job queueing system is used to coordinate task execution through completion.

Communities, including other Independent Software Vendors (ISVs), have embraced GKE extensively to land their frameworks, libraries, and tools. GKE democratizes infrastructure by enabling AI model development, training, and deployment for teams of various sizes.

Architecture for solutions

According to industry trends in AI and ML, models get significantly better with increased processing power. With the help of NVIDIA GPUs and Google Cloud’s products and services, GKE makes it possible to train and serve models at a scale that leads the industry.

The Reference Architecture, shown in Figure 2 above, shows the main parts, common services, and tools used to train the NeMo large language model with Google Kubernetes Engine.

Nvidia Nemo LLM

- A managed node pool with the A3 nodes to handle workloads and a default node pool to handle common services like DNS pods and custom controllers make up a GKE Cluster configured as a regional or zonal location.

- Eight NVIDIA H100 Tensor Core GPUs, sixteen local SSDs, and the necessary drivers are included in each A3 node. The Cloud Storage FUSE allows access to Google Cloud Storage as a file system, and the CSI driver for Filestore CSI allows access to fully managed NFS storage in each node.

- Batching in Kueue for workload control. When using a larger setup with multiple teams, this is advised.

- Every node has a filestore installed to store outputs, interim, and final logs for monitoring training effectiveness.

- A bucket in Cloud Storage holding the training set of data.

- The training image for the NeMo framework is hosted by NVIDIA NGC.

- TensorBoard can be used to view training logs mounted on Filestore and analyze the training step times and loss.

- Common services include Terraform for setup deployment, IAM for security management, and Cloud Ops for log viewing.

A GitHub repository at has an end-to-end walkthrough available in it. The walkthrough offers comprehensive instructions on how to pre-train NVIDIA’s NeMo Megatron GPT using the NeMo framework and set up the aforementioned solution in a Google Cloud Project.

Continue on

BigQuery is frequently used by businesses as their primary data warehousing platform in situations where there are enormous volumes of structured data. To train the model, there are methods for exporting data into Cloud Storage. In the event that the data is not accessible in the desired format, BigQuery can be read, transformed, and written back using Dataflow.

In summary

Organizations can concentrate on creating and implementing their models to expand their business by utilizing GKE, freeing them from having to worry about the supporting infrastructure. Custom generative AI model building is a great fit for NVIDIA NeMo. The scalability, dependability, and user-friendliness needed to train and serve models are provided by this combination