Apache Spark tutorial

Large data volumes can be handled with standard SQL by BigQuery’s highly scalable and powerful SQL engine, which also provides advanced features like BigQuery ML, remote functions, vector search, and more. To expand BigQuery data processing beyond SQL, you might occasionally need to make use of pre-existing Spark-based business logic or open-source Apache Spark expertise. For intricate JSON or graph data processing, for instance, you might want to use community packages or legacy Spark code that was written before BigQuery was migrated. In the past, this meant you had to pay for non-BigQuery SKUs, enable a different API, utilize a different user interface (UI), and manage inconsistent permissions. You also had to leave BigQuery.

Google was created an integrated experience to extend BigQuery’s data processing capabilities to Apache Spark in order to address these issues, and they are pleased to announce the general availability (GA) of Apache Spark stored procedures in BigQuery today. BigQuery users can now create and run Spark stored procedures using BigQuery APIs, allowing them to extend their queries with Spark-based data processing. It unifies Spark and BigQuery into a unified experience that encompasses billing, security, and management. Code written in Scala, Java, and PySpark can support Spark procedures.

Here are the comments from DeNA, a BigQuery customer and supplier of internet and artificial intelligence technologies”A seamless experience with unified API, governance, and billing across Spark and BigQuery is provided by BigQuery Spark stored procedures. With BigQuery, they can now easily leverage our community packages and Spark expertise for sophisticated data processing.

PySpark

Create evaluate and implement PySpark code within BigQuery Studio

To create, test, and implement your PySpark code, BigQuery Studio offers a Python editor as part of its unified interface for all data practitioners. In addition to other options, procedures can be configured with IN/OUT parameters. Iteratively testing the code within the UI is possible once a Spark connection has been established. The BigQuery console displays log messages from underlying Spark jobs in the same context for debugging and troubleshooting purposes. By providing Spark parameters to the process, experts in Spark can further fine-tune Spark execution.

PySpark SQL

After testing, the process is kept in a BigQuery dataset, and it can be accessed and controlled in the same way as your SQL procedures.

Apache Spark examples

Utilizing a large selection of community or third-party packages is one of Apache Spark’s many advantages. BigQuery Spark stored procedures can be configured to install packages required for code execution.

You can import your code from Google Cloud Storage buckets or a custom container image from the Container Registry or Artifact Registry for more complex use cases. Customer-managed encryption keys (CMEK) and the use of an existing service account are examples of advanced security and authentication options that are supported.

BigQuery billing combined with serverless execution

With this release, you can only see BigQuery fees and benefit from Spark within the BigQuery APIs. Our industry-leading Serverless Spark engine, which enables serverless, autoscaling Spark, is what makes this possible behind the scenes. But when you use this new feature, you don’t have to activate Dataproc APIs or pay for Dataproc. Pay-as-you-go (PAYG) pricing for the Enterprise edition (EE) will be applied to your usage of Spark procedures. All BigQuery editions, including the on-demand model, have this feature. Regardless of the edition, you will be charged for Spark procedures with an EE PAYG SKU. See BigQuery pricing for further information.

What is Apache Spark?

For data science, data engineering, and machine learning on single-node computers or clusters, Apache Spark is a multi-language engine.

Easy, Quick, Scalable, and Unified

Apache Spark Actions

Apache Spark java

Combine batch and real-time streaming data processing with your choice of Python, SQL, Scala, Java, or R.

SQL analysis

Run distributed, fast ANSI SQL queries for ad hoc reporting and dashboarding. surpasses the speed of most data warehouses.

Large-scale data science

Utilize petabyte-scale data for exploratory data analysis (EDA) without the need for downsampling

Machine learning with apache spark quick start guide

On a laptop, train machine learning algorithms, and then use the same code to scale to thousands of machines in fault-tolerant clusters.

The most popular scalable computing engine

- Thousands use Apache Spark, including 80% of Fortune 500 companies.

- Over 2,000 academic and industrial contributors to the open source project.

Ecosystem

Assisting in scaling your preferred frameworks to thousands of machines, Apache Spark integrates with them.

Spark SQL engine: internal components

An advanced distributed SQL engine for large-scale data is the foundation of Apache Spark.

Flexible Query Processing

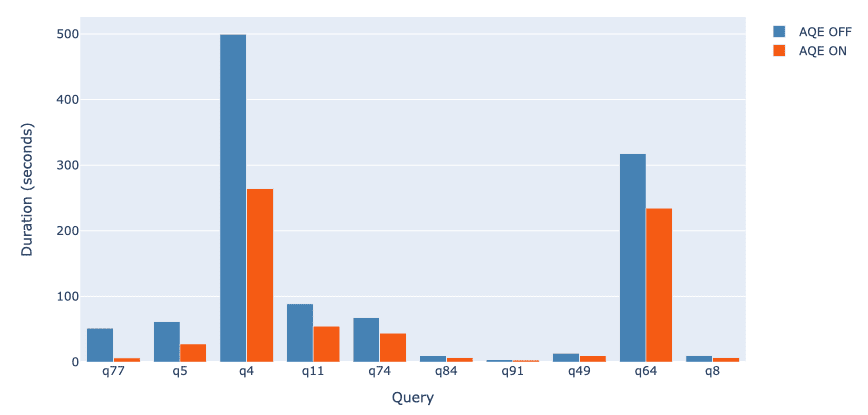

Runtime modifications to the execution plan are made by Spark SQL, which automatically determines the quantity of reducers and join algorithms.

Assistance with ANSI SQL

Make use of the same SQL that you are familiar with.

Data both organized and unstructured

Both structured and unstructured data, including JSON and images, can be handled by Spark SQL.