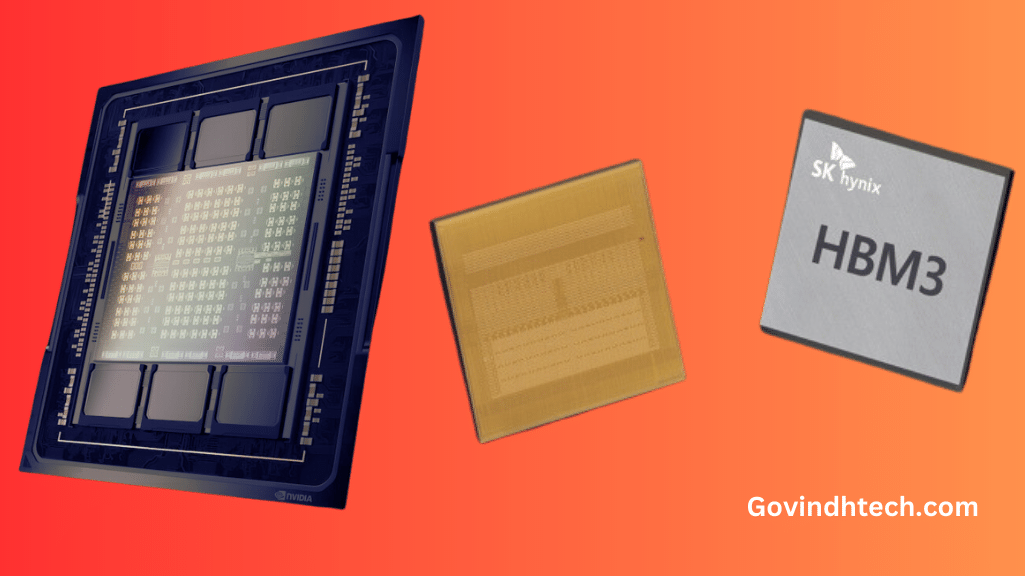

SK Hynix announced its 5th Generation High-Bandwidth Memory (HBM) solution called HBM3E, which offers faster data transfer rates. The HBM3E memory is expected to have an 8.0Gbps transfer rate and is set to sample in the second half of this year, with mass production starting in the first half of 2024. This new memory technology is seen as a suitable choice for future NVIDIA GPUs targeting the artificial intelligence (AI) and high-performance computing (HPC) segments.

There have been indications that NVIDIA itself has requested a sample of SK Hynix‘s HBM3E memory. NVIDIA and SK Hynix have previously partnered in providing the first HBM3 and HBM2E products. The current generation of GPUs from NVIDIA, codenamed Hopper, already utilizes HBM3 memory with capacities of up to 80 GB, and there are rumors of a 120 GB variant launching later this year.

The current HBM3 stacks operate at effective clock speeds of up to 3.2 Gbps, so an 8.0 Gbps stack would offer a bandwidth of up to 5.12 TB/s on the same 5120-bit wide bus interface used by Hopper. This represents a 2.5x increase in memory bandwidth speeds. The current HBM3 DRAM comes in 12-Hi stacks, allowing for capacities of up to 24 GB with speeds of up to 6.4 Gbps.

-

AtomMan X7 Ti, Minisforum’s New Intel-powered elite Mini PC

Discover the cutting-edge processor of AtomMan X7 Ti Mini PC and how it enhances performance. Unleash the power of this innovative technology.

-

AlphaFold 3 Predicts All Life’s Molecules And Relations

Learn how AlphaFold 3 predicts molecular interactions, shaping the future of drug discovery and bioengineering from Google DeepMind

-

Titan Text Premier: The New Interactive Gen AI Powerhouse

Experience unprecedented writing prowess with Titan Text Premier. Elevate your content creation to new heights effortlessly!

While the full potential of HBM3 DRAM has not yet been realized, NVIDIA may choose to skip HBM3 altogether and opt for HBM3E in their upcoming GPUs, codenamed “Blackwell.” SK Hynix is expected to secure a major partnership deal with NVIDIA, as the latter holds a dominant position in the HPC and AI markets with over 90% market share. In case of any supply issues with HBM3, NVIDIA can also consider Samsung, which is preparing its own Snowbolt HBM3P memory offering up to 5 TB/s bandwidth per stack.

Intel and AMD will be competing with NVIDIA through their upcoming Falcon Shores and Instinct MI300X GPU offerings. However, these competitors will need to be tested and go through production before significant volumes are available. By that time, NVIDIA’s next-generation GPUs will likely already be on the horizon.