Mistral NeMo 12B

Today, Mistral AI and NVIDIA unveiled Mistral NeMo 12B, a brand-new, cutting-edge language model that is simple for developers to customise and implement for enterprise apps that enable summarising, coding, a chatbots, and multilingual jobs.

The Mistral NeMo model provides great performance for a variety of applications by fusing NVIDIA’s optimised hardware and software ecosystem with Mistral AI‘s training data knowledge.

Guillaume Lample, cofounder and chief scientist of Mistral AI, said, “NVIDIA is fortunate to collaborate with the NVIDIA team, leveraging their top-tier hardware and software.” “With the help of NVIDIA AI Enterprise deployment, They have created a model with previously unheard-of levels of accuracy, flexibility, high efficiency, enterprise-grade support, and security.”

On the NVIDIA DGX Cloud AI platform, which provides devoted, scalable access to the most recent NVIDIA architecture, Mistral NeMo received its training.

The approach was further advanced and optimised with the help of NVIDIA TensorRT-LLM for improved inference performance on big language models and the NVIDIA NeMo development platform for creating unique generative AI models.

This partnership demonstrates NVIDIA’s dedication to bolstering the model-builder community.

Providing Unprecedented Precision, Adaptability, and Effectiveness

This enterprise-grade AI model performs accurately and dependably on a variety of tasks. It excels in multi-turn conversations, math, common sense thinking, world knowledge, and coding.

Mistral NeMo analyses large amounts of complicated data more accurately and coherently, resulting in results that are relevant to the context thanks to its 128K context length.

Mistral NeMo is a 12-billion-parameter model released under the Apache 2.0 licence, which promotes innovation and supports the larger AI community. The model also employs the FP8 data format for model inference, which minimises memory requirements and expedites deployment without compromising accuracy.

This indicates that the model is perfect for enterprise use cases since it learns tasks more efficiently and manages a variety of scenarios more skillfully.

Mistral NeMo provides performance-optimized inference with NVIDIA TensorRT-LLM engines and is packaged as an NVIDIA NIM inference microservice.

This containerised format offers improved flexibility for a range of applications and facilitates deployment anywhere.

Instead of taking many days, models may now be deployed anywhere in only a few minutes.

As a component of NVIDIA AI Enterprise, NIM offers enterprise-grade software with specialised feature branches, stringent validation procedures, enterprise-grade security, and enterprise-grade support.

It offers dependable and consistent performance and comes with full support, direct access to an NVIDIA AI expert, and specified service-level agreements.

Businesses can easily use Mistral NeMo into commercial solutions thanks to the open model licence.

With its compact design, the Mistral NeMo NIM can be installed on a single NVIDIA L40S, NVIDIA GeForce RTX 4090, or NVIDIA RTX 4500 GPU, providing great performance, reduced computing overhead, and improved security and privacy.

Cutting-Edge Model Creation and Personalisation

Mistral NeMo’s training and inference have been enhanced by the combined knowledge of NVIDIA engineers and Mistral AI.

Equipped with Mistral AI’s proficiencies in multilingualism, coding, and multi-turn content creation, the model gains expedited training on NVIDIA’s whole portfolio.

Its efficient model parallelism approaches, scalability, and mixed precision with Megatron-LM are designed for maximum performance.

3,072 H100 80GB Tensor Core GPUs on DGX Cloud, which is made up of NVIDIA AI architecture, comprising accelerated processing, network fabric, and software to boost training efficiency, were used to train the model using Megatron-LM, a component of NVIDIA NeMo.

Availability and Deployment

Mistral NeMo, equipped with the ability to operate on any cloud, data centre, or RTX workstation, is poised to transform AI applications on a multitude of platforms.

NVIDIA thrilled to present Mistral NeMo, a 12B model created in association with NVIDIA, today. A sizable context window with up to 128k tokens is provided by Mistral NeMo. In its size class, its logic, domain expertise, and coding precision are cutting edge. Because Mistral NeMo is based on standard architecture, it may be easily installed and used as a drop-in replacement in any system that uses Mistral 7B.

To encourage adoption by researchers and businesses, we have made pre-trained base and instruction-tuned checkpoints available under the Apache 2.0 licence. Quantization awareness was incorporated into Mistral NeMo’s training, allowing for FP8 inference without sacrificing performance.

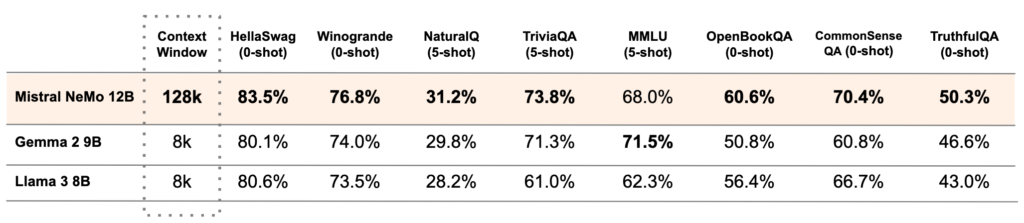

The accuracy of the Mistral NeMo base model and two current open-source pre-trained models, Gemma 2 9B and Llama 3 8B, are compared in the accompanying table.

Multilingual Model for the Masses

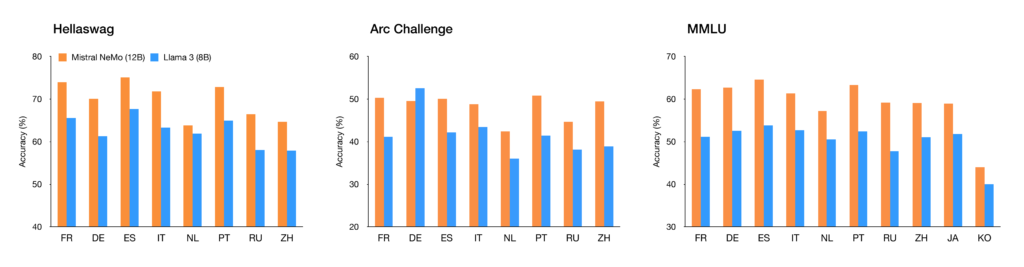

The concept is intended for use in multilingual, international applications. Hindi, English, French, German, Spanish, Portuguese, Chinese, Japanese, Korean, Arabic, and Spanish are among its strongest languages. It has a big context window and is educated in function calling. This is a fresh step in the direction of making cutting-edge AI models available to everyone in all languages that comprise human civilization.

Tekken, a more efficient tokenizer

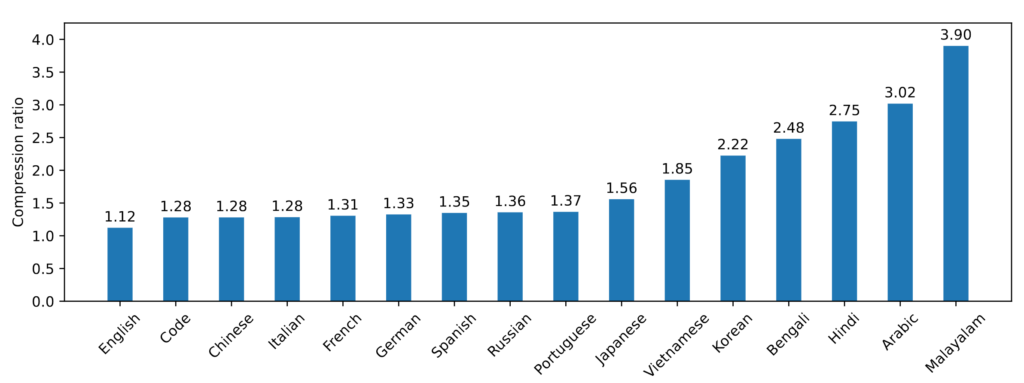

Tekken, a new tokenizer utilised by Mistral NeMo that is based on Tiktoken and was trained on more than 100 languages, compresses source code and natural language text more effectively than SentencePiece, the tokenizer used by earlier Mistral models. Specifically, it has about a 30% higher compression efficiency for Chinese, Italian, French, German, Spanish, and Russian source code. Additionally, it is three times more effective at compressing Arabic and Korean, respectively. For over 85% of all languages, Tekken demonstrated superior text compression performance when compared to the Llama 3 tokenizer.

Instruction adjustment

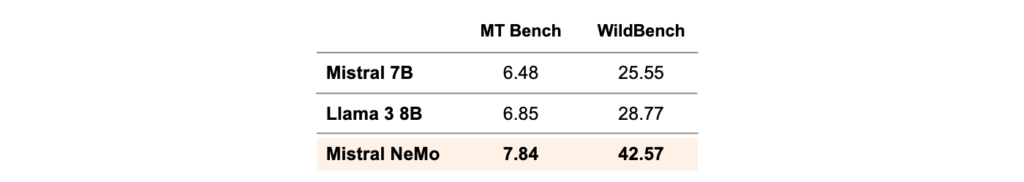

Mistral NeMO went through a process of advanced alignment and fine-tuning. It is far more adept at reasoning, handling multi-turn conversations, following exact directions, and writing code than Mistral 7B.