Mistral is pleased to present the next iteration of their flagship model, Mistral Large 2, today. Mistral Large 2 is far more proficient in mathematics, logic, and code production than its predecessor. It also offers sophisticated function calling capabilities and far better linguistic support.

The most recent generation is still pushing the limits of performance, speed, and cost effectiveness. Mistral Large 2 is made available on la Platform and has been enhanced with additional functionalities to make the development of creative AI apps easier.

Large 2

With a 128k context window, Mistral Large 2 is compatible with more than 80 coding languages, including Python, Java, C, C++, JavaScript, and Bash, and it supports dozens of languages, including Arabic, Hindi, French, German, Spanish, Italian, Portuguese, and Chinese.

Mistral Large 2’s size of 123 billion parameters allows it to run at high throughput on a single node; it is intended for single-node inference with long-context applications in mind. Mistral is making Mistral Large 2 available for use and modification for non-commercial and research purposes under the terms of the Mistral Research License. A Mistral Commercial License must be obtained by getting in touch with them in order to use Mistral Large 2 for commercial purposes that call for self-deployment.

General performance

In terms of performance / cost of serving on assessment parameters, Mistral Large 2 establishes new benchmarks. Specifically, on MMLU, the pretrained version attains an accuracy of 84.0% and establishes a new benchmark on the open models’ performance/cost Pareto front.

Code and Reasoning

After using Codestral 22B and Codestral Mamba, Mistral trained a significant amount of code on Mistral Large 2. Mistral Large 2 performs on par with top models like GPT-4o, Claude 3 Opus, and Llama 3 405B, and it significantly outperforms the preceding Mistral Large.

Also, a lot of work went into improving the model’s capacity for reasoning. Reducing the model’s propensity to “hallucinate” or produce information that sounds reasonable but is factually inaccurate or irrelevant was one of the main goals of training. This was accomplished by fine-tuning the model to respond with greater caution and discernment, resulting in outputs that are dependable and accurate.

The new Mistral Large 2 is also programmed to recognise situations in which it is unable to solve problems or lacks the knowledge necessary to give a definite response. This dedication to precision is seen in the better model performance on well-known mathematical benchmarks, showcasing its increased logic and problem-solving abilities:

Direction after & Alignment

Mistral Large 2’s ability to follow instructions and carry on a conversation was significantly enhanced. The new Mistral Large 2 excels at conducting lengthy multi-turn talks and paying close attention to directions.

Longer responses typically result in higher results on various standards. Conciseness is crucial in many business applications, though, as brief model development leads to faster interactions and more economical inference. This is the reason Mistral worked so hard to make sure that, if feasible, generations stay brief and direct.

Varieties in Language

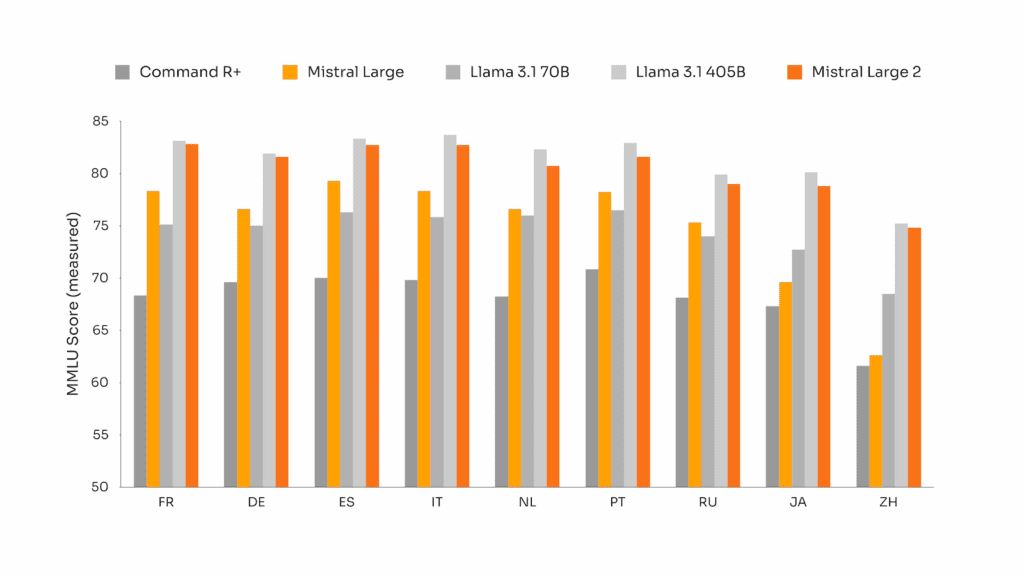

Working with multilingual documents is a significant portion of today’s corporate use cases. A significant amount of multilingual data was used to train the new Mistral Large 2, despite the fact that most models are English-centric. It performs exceptionally well in Hindi, Arabic, Dutch, Russian, Chinese, Japanese, Korean, English, French, German, Spanish, Italian, Portuguese, and Dutch. The performance results of Mistral Large 2 on the multilingual MMLU benchmark are shown here, along with comparisons to Cohere’s Command R+ and the previous Mistral Large, Llama 3.1 models.

Use of Tools and Function Calling

Mistral Large 2 can power complicated commercial applications since it has been trained to handle both sequential and parallel function calls with ease. It also has improved function calling and retrieval skills.

Check out Mistral Large 2 on the Platform

Today, you can test Mistral Large 2 on le Chat and utilise it via la Plateforme under the name mistral-large-2407. Mistral is using a YY.MM versioning scheme for all of their models, therefore version 24.07 is available, and the API name is mistral-large-2407. HuggingFace hosts and makes available weights for the teach model.

Two general-purpose models, Mistral Nemo and Mistral Large, and two specialised models, Codestral and Embed, are the focal points of Mistral’s consolidation of the offerings on la Plateforme. All Apache models (Mistral 7B, Mixtral 8x7B and 8x22B, Codestral Mamba, Mathstral) are still available for deployment and fine-tuning using Mistral SDK mistral-inference and mistral-finetune, even as they gradually phase out older models on la Plateforme.

Mistral is expanding the fine-tuning options on la Plateforme with effect from today on: Mistral Large, Mistral Nemo, and Codestral are now covered.

Use cloud service providers to access Mistral models

Mistral is excited to collaborate with top cloud service providers to introduce the new Mistral Large 2 to a worldwide customer base. Specifically, today they are growing the collaboration with Google Cloud Platform to enable the models from Mistral AI to be accessed on Vertex AI using a Managed API. Right now, Vertex AI, Azure AI Studio, Amazon Bedrock, and IBM Watsonx.ai are all offering the best models from Mistral AI.

Timeline for Mistral AI models’ availability