Microsoft Phi-3.5-mini-instruct

Azure offers unprecedented model flexibility and a flexible and extensive toolchain to meet modern enterprises’ unique, complex, and diversified needs as developers develop and deploy AI applications at scale. This powerful combination of models and tooling lets developers construct highly personalized solutions based on their organization’s data. Azure is pleased to announce enhancements that enable developers to create AI solutions with increased choice and freedom using the Azure AI toolchain:

- Phi models now include a Mixture of Experts (MoE) model and 20+ languages.

- Azure AI model service and AI21 Jamba 1.5 Large.

- Integrated Azure AI Search vectorization to optimize the retrieval augmented generation (RAG) workflow with data prep and embedding.

- Custom generative extraction models in Azure AI Document Intelligence allow accurate extraction of custom fields from unstructured documents.

- Text to Speech (TTS) Avatar, a feature of Azure AI Speech service, brings natural-sounding voices and photorealistic avatars to life across languages and voices, improving customer engagement and experience.

- VS Code addon for Azure Machine Learning’s general release.

- Conversational PII Detection Service in Azure AI Language is now available.

Phi-3.5 SLMs

Phi model family with additional languages and higher throughput

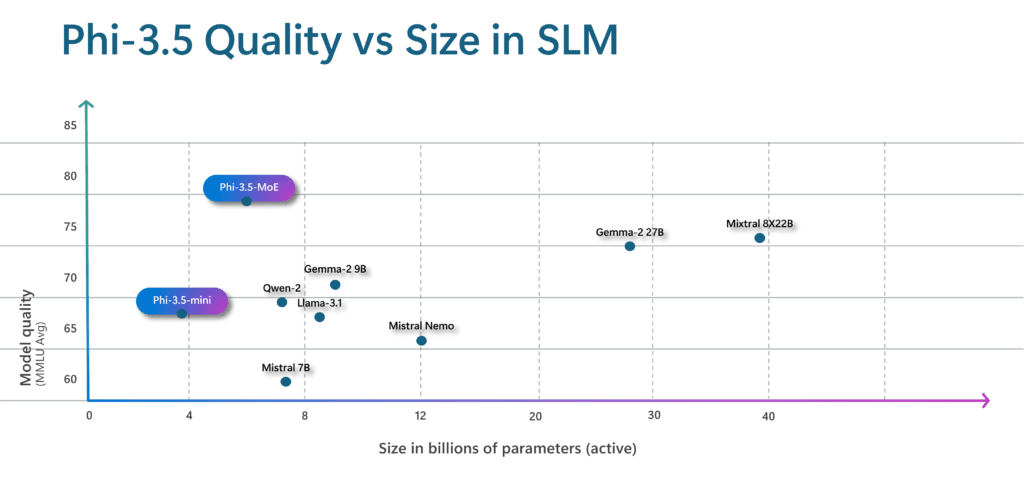

Phi-3.5-MoE

Phi-3.5-MoE, a Mixture of Experts model, is new to Phi. New model combines 16 smaller experts for better model quality and decreased latency. The MoE model has 42B parameters, however it only utilizes 6.6B active parameters since it may specialize a subset of parameters (experts) during training and employ the appropriate experts at runtime. This technique gives consumers the speed and computational efficiency of a small model with the subject expertise and higher-quality results of a larger model. Learn how Azure improved Azure AI translation performance and quality with a Mixture of Experts architecture.

Phi-3.5-mini

The Phi-3.5-mini tiny model is also announced. Multilingual, the new MoE model and small model support over 20 languages. The additional languages let users interact with the model in their preferred language.

Even with new languages, Phi-3.5-mini is just 3.8B parameters.

Phi models are used by conversational intelligence company CallMiner for their speed, accuracy, and security.

Guidance is added to the Phi-3.5-mini serverless endpoint to improve output predictability and application structure. The proven open-source Python module Guidance (with 18K+ GitHub stars) lets developers describe in a single API call the model’s programmatic constraints for structured output in JSON, Python, HTML, SQL, or whatever the use case requires. Guidance lets you limit the model to pre-defined lists (e.g., medical codes), restrict outputs to context-based quotations, or follow any regex to avoid expensive retries. Guidance leads the model token by token in the inference stack, improving output quality and reducing cost and latency by 30-50% for highly structured scenarios.

Phi vision model

Phi-3.5-vision

The Phi vision model is being updated to handle multi-frames. Phi-3.5-vision (4.2B parameters) can reason over numerous input images, enabling new scenarios like image comparison.

Microsoft supports safe and responsible AI development with a strong range of tools and capabilities as part of Azure’s product strategy.

Phi model developers can use Azure AI assessments to assess quality and safety using built-in and custom metrics, guiding mitigations. Prompt shields and protected material identification are included into Azure AI Content Safety. These capabilities can be applied to models like Phi using content filters or incorporated into programs using a single API. Once in production, developers can monitor application quality, safety, and data integrity, utilizing real-time alerts for rapid interventions.

AI21 Jamba

Jamba 1.5 on Azure AI models and AI21 Jamba 1.5 Large are available as services

Azure is delighted to present two new open models, Jamba 1.5 Large and Jamba 1.5, in the Azure AI model catalog to give developers the widest selection of models. Long-context processing is effective with the Jamba architecture, which combines Mamba and Transformer layers.

The most sophisticated Jamba models are the 1.5 Large and 1.5, according to AI21. The Hybrid Mamba-Transformer architecture balances speed, memory, and quality by using Mamba layers for short-range dependencies and Transformer layers for long-range dependencies. Thus, this family of models excels in managing extended contexts for financial services, healthcare, life sciences, retail, and CPG.

RAG simplification for generative AI

Azure integrate data preparation and embedding to streamline RAG workflows. RAG is used in generative AI applications to add private organization data without retraining the model. RAG uses vector and hybrid retrieval to return data-driven, relevant information to queries. Vector search requires extensive data preprocessing. To leverage data in your copilot, your app must ingest, process, enrich, embed, and index it from numerous sources.

Integrating vectorization in Azure AI Search is now available. Integrated vectorization streamlines and automates these operations. Integrating embedding models for automatic vector indexing and querying lets your application maximize data potential.

Integration vectorization boosts developer productivity and allows companies to offer turnkey RAG systems for new projects, allowing teams to quickly build an application specific to their datasets and needs without having to design a custom deployment.

Integrated vectorization streamlines procedures for SGS & Co, a global brand impact organization.

Custom field extraction in Document Intelligence

Build and train a custom generative model in Document Intelligence to extract custom fields from unstructured documents with high accuracy. This new feature employs generative AI to extract user-specified fields from several visual templates and document types. You can start with five training papers. Automatic labeling saves time and effort on manual annotation, findings are grounded when relevant, and confidence ratings can quickly filter high-quality extracted data for downstream processing and minimize manual review time when developing a bespoke generative model.

Create engaging encounters with prebuilt and custom avatars

TTS Avatar

Azure is pleased to announce that Azure AI Speech service’s Text to Speech (TTS) Avatar is now broadly accessible. This solution improves client engagement and experience by bringing natural-sounding voices and photorealistic avatars to life in multiple languages. Developers can improve efficiency, create personalized and engaging consumer and employee experiences, and innovate with TTS Avatar.

The TTS Avatar service offers developers pre-built avatars with a selection of natural-sounding voices and Azure Custom Neural Voice to generate custom synthetic voices. Company branding can be added to photorealistic avatars. Fujifilm uses TTS Avatar with NURA, the first AI-powered health screening facility.

Responsible AI use and development are Azure’s primary priorities as Azure launch this technology. Custom Text to Speech Avatar is a restricted service with security measures. The system adds invisible watermarks to avatar outputs. Watermarks allow approved users to check if a video was generated using Azure AI Speech’s avatar feature.

Azure is also provide guidance for TTS avatar responsible use, including how to increase transparency in user interactions, identify and reduce prejudice or harmful synthetic content, and connect with Azure AI Content Safety. In this transparency note, Azure outline TTS Avatar’s technology, capabilities, approved use cases, use case selection considerations, restrictions, fairness considerations, and best practices for system performance. Developers and content makers using prebuilt and bespoke TTS Avatar features must apply for access and follow Azure’s code of conduct.

Use Azure Machine Learning with VS Code

VS Code

Azure is excited to release the Azure Machine Learning VS Code addon. Build, train, deploy, debug, and manage Azure Machine Learning machine learning models from your favorite VS Code desktop or online setup with the plugin. VNET support, IntelliSense, and Azure Machine Learning CLI integration make the extension production-ready. Learn about the extension on this IT community blog.

Protect user privacy

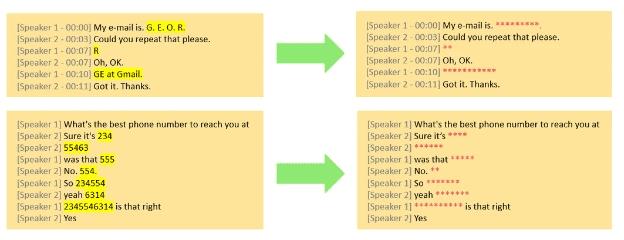

Azure AI Language

Today, Azure is delighted to announce the general release of Conversational PII Detection Service in Azure AI Language, improving Azure AI’s capacity to recognize and redact sensitive material in talks in English. This solution improves data privacy and security for enterprise generative AI app developers. The Conversational PII redaction service enhances the Text PII service by identifying, categorizing, and redacting sensitive information like phone numbers and email addresses in unstructured text. This Conversational PII model is designed for conversational inputs like meeting and phone transcriptions.

Manage Azure OpenAI Service PTUs yourself

Azure is recently announced enhancements to Azure OpenAI Service, including the ability to manage quota deployments without account team support, allowing you to request Provisioned Throughput Units (PTUs) more quickly. Azure is also released OpenAI’s latest model on 8/7, which added Structured Outputs like JSON Schemas to the GPT-4o and GPT-4o small models. Developers who validate and format AI outputs into JSON Schemas benefit from structured outputs.