PaliGemma

At Google, Google Cloud team think that teamwork and open research can spur innovation, so they’re happy to see that Gemma has received millions of downloads in the short time since its release.

This positive reaction has been tremendously motivating, as developers have produced a wide range of projects. From Octopus v2, an on-device action model, to Navarasa, a multilingual variant for Indic languages, developers are demonstrating Gemma’s potential to produce useful and approachable AI solutions.

Google cloud’s development of CodeGemma, with its potent code completion and generation capabilities, and RecurrentGemma, which provides effective inference and research opportunities, has likewise been driven by this spirit of exploration and invention.

The Gemma family of open models is composed of lightweight, cutting-edge models that are constructed using the same technology and research as the Gemini models. With the release of PaliGemma, a potent open vision-language model (VLM), and the launch of Gemma 2,Google cloud is thrilled to share more news about their plans to grow the Gemma family. Furthermore, they’re strengthening their dedication to responsible AI by releasing upgrades to our Responsible Generative AI Toolkit, which give developers fresh and improved resources for assessing model safety and screening out inappropriate content.

Presenting the Open Vision-Language Model, PaliGemma

Inspired by PaLI-3, PaliGemma is a potent open vector loader. PaliGemma is built on open components, such as the Gemma language model and the SigLIP vision model, and is intended to achieve class-leading fine-tune performance on a variety of vision-language tasks. This covers word recognition in images, object detection and segmentation, visual question answering, and captioning of images and short videos.

Google Cloud can offer numerous resolutions of both pretrained and fine-tuned checkpoints, in addition to checkpoints tailored to a variety of tasks for instant investigation.

PaliGemma is accessible through a number of platforms and tools to encourage open exploration and research. With free choices like Kaggle and Colab notebooks, you can start exploring right now. In order to fund their work, academic academics who want to advance the field of vision-language study can also apply for Google Cloud credits.

Start using PaliGemma right now. PaliGemma may be easily integrated using Hugging Face Transformers and JAX, and is available on GitHub, Hugging Face models, Kaggle, Vertex AI Model Garden, and ai.nvidia.com (accelerated using TensoRT-LLM). (Keras merger is about to happen) This Hugging Face Space is another way that you can communicate with the model.

Presenting Gemma 2: Efficiency and Performance of the Future

Google Cloud is excited to share the news that Gemma 2, the next generation of Gemma models, will soon be available. Gemma 2 boasts a revolutionary architecture intended for ground-breaking performance and efficiency, and will be offered in new sizes to accommodate a wide range of AI developer use cases.

Benefits include:

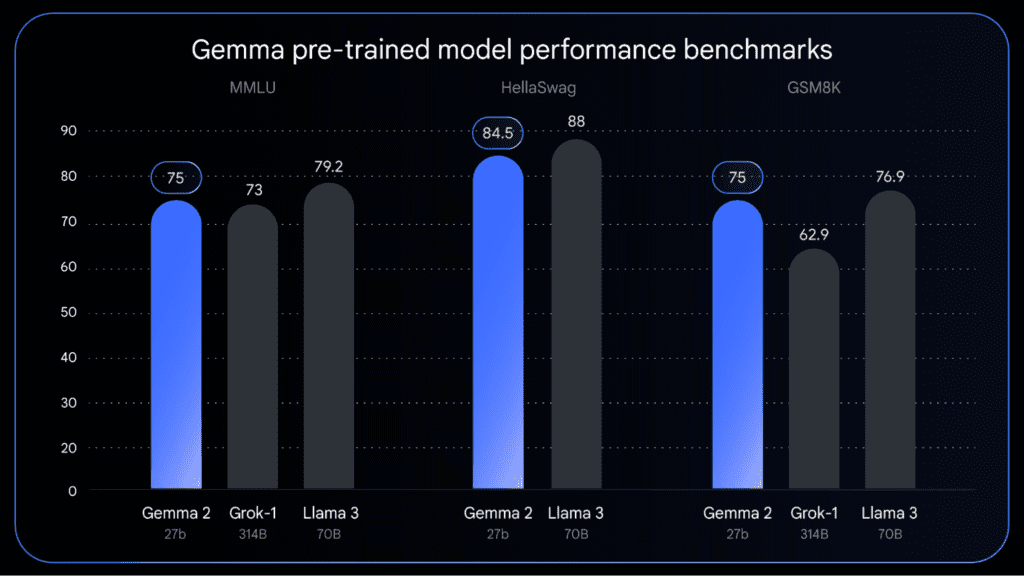

- Class Leading Performance: With less than half the size and performance comparable to Llama 3 70B, Gemma 2 operates at 27 billion parameters. This ground-breaking efficiency raises the bar for open models.

- Lower Deployment Costs: Gemma 2 fits on less than half the compute of similar models because to its effective design. The 27B model may operate effectively on a single TPU host in Vertex AI or is optimised to work on NVIDIA GPUs, making deployment more affordable and accessible for a larger variety of users.

- Flexible Toolchains for Tuning: Gemma 2 will give developers powerful tools for tuning across a wide range of platforms and resources. Gemma 2 can be fine-tuned more easily than ever before with the help of community tools like Axolotl and cloud-based solutions like Google Cloud. Additionally, you can maximise performance and deploy across a range of hardware configurations thanks to our own JAX and Keras, as well as smooth partner interaction with Hugging Face and NVIDIA TensorRT-LLM.

Gemma 2 is still pretraining. This chart shows performance from the latest Gemma 2 checkpoint along with benchmark pretraining metrics.

Watch this page for Gemma 2’s formal release in the upcoming weeks!

Increasing the Toolkit for Responsible Generative AI

Because of this, Google cloud is making the LLM Comparator open source as part of their Responsible Generative AI Toolkit to assist developers in conducting more thorough model evaluations. A new interactive and visual tool for doing efficient side-by-side evaluations to gauge the reliability and quality of model answers is the LLM Comparator. Visit their demo to witness the LLM Comparator in action, which compares Gemma 1.1 to Gemma 1.0.

It is Google Cloud aim that this tool will further the goal of the toolkit, which is to assist developers in creating AI applications that are safe, responsible, and inventive.

Google Cloud is committed to creating a cooperative atmosphere where state-of-the-art AI technology and responsible development coexist as Google cloud grow the Gemma family of open models. Google Cloud can’t wait to see what you create with these new resources and how Google cloud can work together to influence AI in the future.