Google Cloud Pub Sub

Many businesses employ a multi-cloud architecture to support their operations, for various reasons, such as avoiding provider lock-in, boosting redundancy, or using unique offerings from various cloud providers.

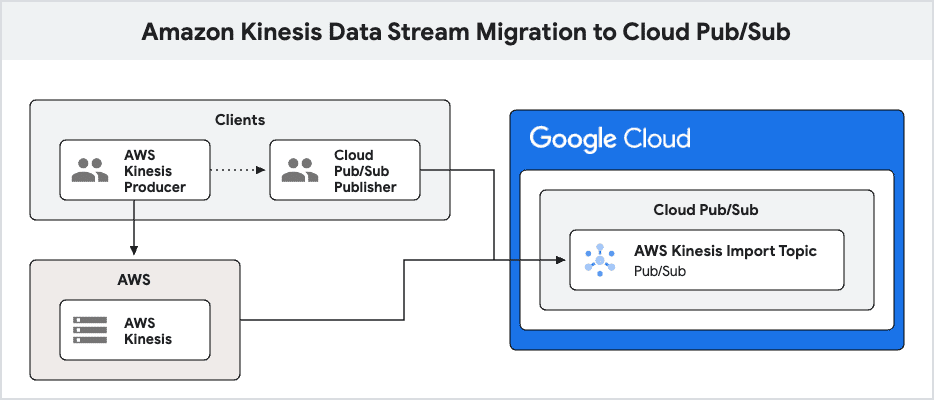

BigQuery, which provides a fully managed, AI-ready multi-cloud data analytics platform, is one of Google Cloud’s most well-liked and distinctive offerings. With BigQuery Omni’s unified administration interface, you can query data in AWS or Azure using BigQuery and view the results in the Google Cloud panel. Then, Pub/Sub introduces a new feature that enables one-click streaming ingestion into Pub/Sub from external sources: import topics. This is useful if you want to integrate and transport data between clouds in real-time. Amazon Kinesis Data Streams is the first external source that is supported. Let’s examine how you can use these new import topics in conjunction with Pub/Sub’s BigQuery subscriptions to quickly and easily access your streaming AWS data in BigQuery.

Google Pub Sub

Learning about important subjects

Pub/Sub is an asynchronous messaging service that is scalable and allows you to separate services that generate messages from those that consume them. With its client libraries, Pub/Sub allows data to be streamed from any source to any sink and is well-integrated into the Google Cloud environment. BigQuery and Cloud Storage can receive data automatically through export subscriptions supported by Pub/Sub. Pub/Sub may send messages to any arbitrary publicly visible destination, such as on Google Compute Engine, Google Kubernetes Engine (GKE), or even on-premises. It is also naturally linked with Cloud Functions and Cloud Run.

Amazon Kinesis Data Streams

While export subscriptions enable the uploading of data to BigQuery and Cloud Storage, import topics offer a fully managed and efficient method of reading data from Amazon Kinesis Data Streams and ingesting it directly into Pub/Sub. This greatly reduces the complexity of setting up data pipelines between clouds. Additionally, import topics offer out-of-the-box monitoring for performance and health visibility into the data ingestion processes. Furthermore, automated scalability is provided by import topics, which removes the requirement for human setting to manage variations in data volume.

Import topics facilitate the simple movement of streaming data from Amazon Kinesis Data Streams into Pub/Sub and enable multi-cloud analytics with BigQuery. On an arbitrary timeline, the Amazon Kinesis producers can be gradually moved to Pub/Sub publishers once an import topic has established a connection between the two systems.

Please be aware that at this time, only users of Enhanced Fan-Out Amazon Kinesis are supported.

Using BigQuery to analyse the data from your Amazon Kinesis Data Streams

Now, picture yourself running a company that uses Amazon Kinesis Data Streams to store a highly fluctuating volume of streaming data. Using BigQuery to analyse this data is important for analysis and decision-making. Initially, make an import topic by following these detailed procedures. Both the Google Cloud console and several official Pub/Sub frameworks allow you to create an import topic. After selecting “Create Topic” on the console’s Pub/Sub page

Important

Pub/Sub starts reading from the Amazon Kinesis data stream as soon as you click “Create,” publishing messages to the import topic. When starting an import topic, there are various precautions you should take if you already have data in your Kinesis data stream to avoid losing it. Pub/Sub may drop messages published to a topic if it is not associated with any subscriptions and if message retention is not enabled. It is insufficient to create a default subscription at the moment of topic creation; these are still two distinct processes, and the subject survives without the subscription for a short while.

There are two ways to stop data loss:

- To make a topic an import topic, create it and then update it:

- Make a topic that isn’t importable.

- Make sure you subscribe to the subject.

- To make the subject an import topic, update its setup to allow ingestion from Amazon Kinesis Data Streams.

- Check for your subscription and enable message retention:

- Make an import topic and turn on message retention.

- Make sure you subscribe to the subject.

- Look for the subscription to a timestamp that comes before the topic is created.

Keep in mind that export subscriptions begin writing data the moment they are established. As a result, going too far in the past may produce duplicates. For this reason, the first option is the suggested method when using export subscriptions.

Create a BigQuery subscription by going to the Pub/Sub console’s subscriptions page and selecting “Create Subscription” in order to send the data to BigQuery:

By continuously observing the Amazon Kinesis data stream, Pub/Sub autoscales. In order to keep an updated view of the stream’s shards, it periodically sends queries to the Amazon Kinesis ListShards API. Pub/Sub automatically adjusts its ingestion configuration to guarantee that all data is gathered and published to your Pub/Sub topic if changes occur inside the Amazon Kinesis data stream (resharding).

To ensure ongoing data intake from the various shards of the Amazon Kinesis data stream, Pub/Sub uses the Amazon Kinesis Subscribe ToShard API to create a persistent connection for any shard that either doesn’t have a parent shard or whose parent shard has already been swallowed. A child shard cannot be consumed by Pub/Sub until its parent has been fully consumed. Nevertheless, since messages are broadcast without an ordering key, there are no rigorous ordering guarantees. By copying the data blob from each Amazon Kinesis record to the data field of the Pub/Sub message before it is published, each unique record is converted into its matching Pub/Sub message. Pub/Sub aims to optimise each Amazon Kinesis shard’s data read rate.

You can now query the BigQuery table directly to confirm that the data transfer was successful. Once the data from Amazon Kinesis has been entered into the table, a brief SQL query verifies that it is prepared for additional analysis and integration into larger analytics workflows.

Keeping an eye on your cross-cloud import

To guarantee seamless operations, you must monitor your data ingestion process. Three new metrics that google cloud has added to Pub/Sub allow you to assess the performance and health of the import topic. They display the topic’s condition, the message count, and the number of bytes. Ingestion is prevented by an incorrect setup, an absent stream, or an absent consumer unless the status is “ACTIVE.” For an exhaustive list of possible error situations and their corresponding troubleshooting procedures, consult the official documentation. These metrics are easily accessible from the topic detail page, where you can also view the throughput, messages per second, and if your topic is in the “ACTIVE” status.

In summary

For many businesses, functioning in various cloud environments has become routine procedure. You should be able to utilise the greatest features that each cloud has to offer, even if you’re using separate clouds for different aspects of your organisation. This may require transferring data back and forth between them. It’s now simple to transport data from AWS into Google Cloud using Pub/Sub. Visit Pub/Sub in the Google Cloud dashboard to get started, or register for a free trial to get going right now.