Three years ago, we founded Vertex AI to provide the best AI/ML platform for speeding AI workloads one platform, every ML tool a business needs. Vertex AI now supports generative AI, provides developer friendly tools for developing applications for popular gen AI use cases, and offers over 100 big models from Google, open-source contributors, and others.

While data science and machine learning engineering remain our focus, Vertex AI now has many developers using AI for the first time. We’re excited to introduce several new Vertex AI technologies that may help data scientists and enterprises manage MLOps better.

Google’s Colab Enterprise managed service combines Colab notebook simplicity with enterprise level security and compliance. It goes public preview today and GA in September. Colab Enterprise lets data scientists accelerate AI processes with full Vertex AI platform features, BigQuery connectivity for direct data access, and code completion and creation.

Ray on Vertex AI increases open source support for AI workload scaling. MLOps for gen AI are being improved with tuning across modalities, model assessment, and a new Vertex AI Feature Store with embeddings. Clients can use these functions throughout the AI/ML lifecycle, from prototyping and experimenting to deploying and maintaining models. Examine how these announcements may help firms improve their AI strategy.

Google Cloud integration for Colab boosts collaboration and productivity

Data scientists work in silos on local laptops without security, governance, or AI/ML hardware to experiment, collaborate, and iterate on foundation models in notebooks.

Colab, a browser-based cloud-based Jupyter notebook developed by Google Research, allows project and insight sharing, includes charts, pictures, HTML, LaTeX, and Python code, and has over 7 million monthly active users. As 67% of Fortune 100 companies use Colab, we’re excited to offer these great features with Google Cloud’s enterprise-ready security, data management, and dependability.

Data scientists can start soon without setup. Vertex AI-powered Colab Enterprise offers Model Garden, tuning tools, configurable computing resources and machine types, data science, and MLOps tooling.

BigQuery Studio’s notebook experience, a unified, collaborative workspace, simplifies data discovery, exploration, analysis, and prediction with Colab Enterprise. Open a BigQuery notebook to explore and prepare data, then open it in Vertex AI to work with specialized AI infrastructure and tools in Colab Enterprise. Data is available everywhere teams work. Sharing notebooks across team members and settings removes data-AI barriers in Colab Enterprise.

Flexible with more open-source support

Tensorflow, PyTorch, scikit-learn, and XGBoost help data science teams with Vertex AI. Today, we’re excited to support Ray, an open-source unified computing platform for AI and Python applications.

Managed, enterprise-grade security improves productivity, cost, and operational efficiency with Ray on Vertex AI. Ray on Vertex AI integrates well into Colab Enterprise, Training, Predictions, and MLOps workflows.

Data scientists can use Vertex AI’s Model Garden to learn how Colab Enterprise and Ray on Vertex AI work together using open-source models like Llama 2, Dolly, Falcon, Stable Diffusion, and others. One click opens these models in a Colab Enterprise notebook for experimenting, tweaking, and prototyping. Ray on Vertex AI supports reinforcement learning and is scalable and performant, making it ideal for open-gen AI model training and computational resource optimization. By connecting a Ray cluster to a Colab Enterprise laptop, Ray on Vertex AI lets teams quickly expand training jobs. The notebook simplifies model deployment to a managed, autoscaling endpoint.

The simplicity of Ray on Vertex AI is attracting consumers

Order volume growth, commodity shortages, and customer purchasing trends have plagued the supply chain. Data and AI must drive businesses. Matthew Haley, Lead AI Scientist, and Murat Cubuktepe, Senior AI Scientist, at materials handling, software, and services firm Dematic, said Ray on Vertex makes scaling up training and training machine and reinforcement learning agents to optimize across warehouse operating conditions easier. We can help customers save money during supply chain disruptions with Vertex AI.”

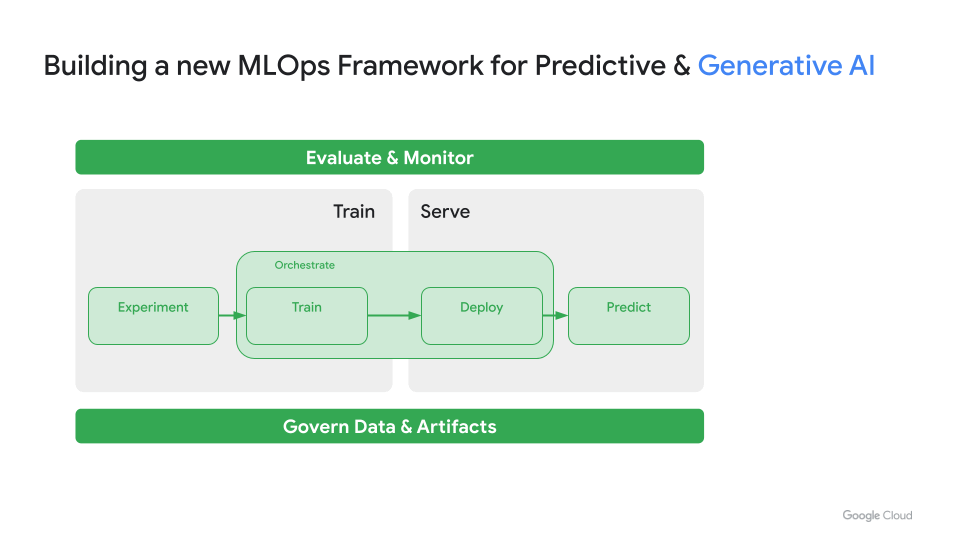

Complete MLOps in gen AI

Customers often ask Gen AI to change MLOps needs. MLOps investments should be kept. Customizing models with corporate data, maintaining them in a central repository, coordinating processes with pipelines, deploying models in production using endpoints or batch processing, and monitoring them are still good practices.

New AI challenges may require businesses to adjust their MLOps strategy, including:

- Gen AI needs more infrastructure for training, adjusting, and serving as model sizes increase.

- New multi-task model customization methods include prompt engineering, supervised tuning, and human-input reinforcement learning.

- Prompts, pipeline tuning, and embeddings need Gen AI governance.

- Monitoring output: Responsible AI features must check output for safety and production usage recitation.

- Curating and linking business data: Many use cases require fresh, relevant data from internal systems and corpora. Citing facts boosts results confidence.

- Performance evaluation: Unlike predictive ML, content or task evaluation is complicated

We created an enterprise-ready MLOps Framework for predictive and gen AI to help you overcome these challenges.

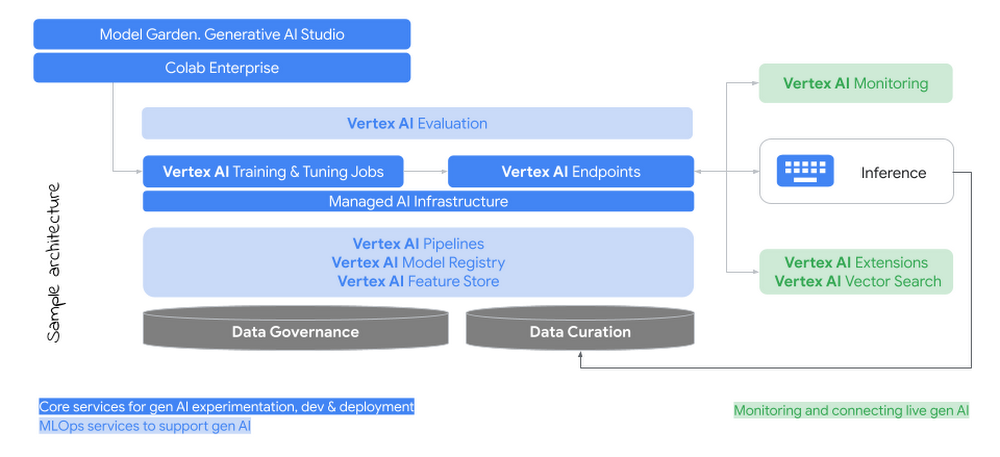

With two decades of experience running advanced AI/ML workloads at scale channeled into Vertex AI, many of our capabilities are suitable for gen AI. Teams can choose GPUs and TPUs for large model AI Infrastructure to achieve their price and performance goals. Vertex AI Pipelines can tune RLHF pipelines. Model Registry oversees predictive and gen AI model lifecycles. Data scientists can reuse artifacts for CI/CD version control and deployment.

Newer capabilities prepare production-level gen AI. Google Cloud is the only hyperscale cloud with curated open source, first party, and third party models. Supervised tuning, RLHF, Google’s Responsible AI Principles-based security, safety, and bias features, and recently announced external data source and capability expansions are supported.

Adding new functionality to MLOps for gen AI today:

- Tuning across modalities:We’re releasing PaLM 2 Text’s supervised tuning and RLHF’s public preview. We offer Style Tuning for Imagen, so companies can create brand-specific or creative graphics with as few as 10 reference images.

- Model evaluation: Automatic Metrics, which evaluates a model using a defined task and “ground truth” dataset, and Automatic Side by Side, which uses a large model to evaluate multiple models being tested, help organizations continuously evaluate model quality.

- Feature Store with support for embeddings:Vertex AI Feature Store, now built on BigQuery, provides embedding support to reduce data duplication and maintain data access policies. Vector embeddings data type in Feature Store simplifies real-time storage, management, and retrieval of unstructured data.

The new Vertex AI Feature Store capabilities are a major operational win for our team, said Wayfair Senior Machine Learning Engineer Gabriele Lanaro. Overall improvements are expected in areas like:

- Inference and Training – Fast, intuitive BigQuery operations simplify our MLOps pipeline and let data scientists experiment.

- Feature Sharing– BigQuery datasets with familiar permission management help us catalog our data with existing tools.

- Embeddings Support – Our in-house vector embeddings are used for many applications, including scam detection by encoding client behavior. Vectors becoming first-class citizens in Vertex AI Feature Store is amazing. More MLOp jobs help our teams launch models faster by reducing system maintenance.”

Gen AI MLOps Reference Architecture

Your AI platform is becoming more important as AI becomes more integrated into firms and more people use AI. We want enterprise-ready AI to innovate today.

Documentation helps establish Colab Enterprise. Learn about managing gen AI with Google’s AI Adoption Framework’s new AI Readiness Quick Check.

[…] announcing Generative AI support on Vertex AI less than six months ago, we’ve been thrilled and humbled to see innovative use cases from […]

[…] it must be widely available and easy to integrate into various systems. Our customers may use Vertex AI Search and Conversation, available today, to simplify generative search and chat apps. These products let […]

[…] next-generation AI applications is offered by integrations with the open source AI ecosystem and Google Cloud’s Vertex AI […]

[…] monitoring them with responsible AI toolchains. Customers who started with MLOps will realise that MLOps practises prepare them for […]