Azure powers intelligent services that have recently caught our attention, such as Microsoft Copilot, Bing, and Azure OpenAI Service. Large language models (LLMs) are the secret sauce behind these services, which enable a plethora of applications such as Microsoft Office 365, chatbots, and search engines with generative AI. Microsoft have only begun to scrape the surface when it comes to the transcendental newest LLMs, which are bringing about a generational shift in how we use artificial intelligence in their daily lives and think about its evolution. It is essential to develop more competent, equitable, foundational LLMs that can accurately gather and convey data.

How Microsoft uses LLMs to their fullest potential

But developing new LLMs or enhancing the precision of already-existing ones is a difficult task. Massively computationally powerful supercomputers are needed to develop and train enhanced LLMs. It is critical that these supercomputers’ hardware and software be used effectively and at scale, not sacrificing performance. Here, the Azure cloud’s massive supercomputing capacity truly shines, and breaking the previous scale record for LLM training is important.

For customers to quickly bring the most complex AI use cases to market, they require dependable and efficient infrastructure. Microsoft goal is to fulfill these demands by constructing cutting-edge infrastructure. The most recent MLPerf 3.1 Training results demonstrate their constant dedication to developing high-caliber, high-performance cloud platforms in order to achieve unmatched efficiency when training large numbers of LLMs. The goal is to stress each and every system component with enormous workloads and speed up the build process to get excellent quality.

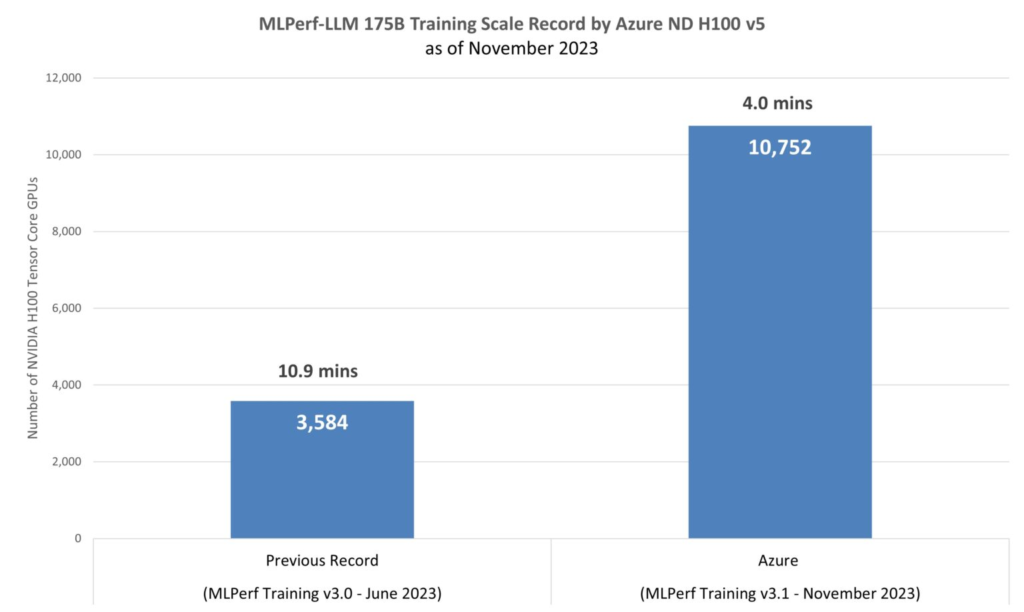

On 1,344 ND H100 v5 virtual machines (VMs), which represent 10,752 NVIDIA H100 Tensor Core GPUs connected by the NVIDIA Quantum-2 InfiniBand networking infrastructure, the GPT-3 LLM model and its 175 billion parameters were trained to completion in four minutes (as illustrated in Figure 1). Almost real-world datasets are used in this training workload, which restarts from 2.4 terabytes of checkpoints that roughly resemble a production LLM training scenario. The workload puts a strain on the Tensor Cores of the H100 GPUs, the direct-attached Non-Volatile Memory Express disks, and the NVLink interface, which enables quick communication between the cross-node 400Gb/s InfiniBand fabric and the high-bandwidth memory in the GPUs.

Azure has made remarkable progress in optimizing the size of training, as evidenced by its largest contribution in the history of MLPerf Training. The benchmarks developed by MLCommons demonstrate the capabilities of contemporary AI software and hardware, highlighting the ongoing progress that has been made and eventually pointing the way toward even more potent and effective AI systems. MLCommons Executive Director David Kanter

Microsoft’s dedication to efficiency

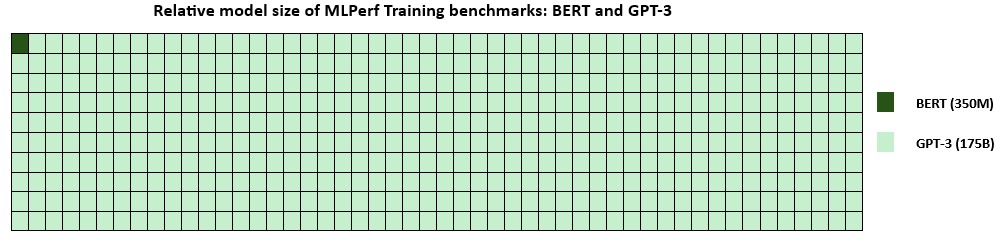

Microsoft unveiled the ND H100 v5-series in March 2023, surpassing their previous record in 5.4 minutes to train a 350 million parameter Bidirectional Encoder Representations from Transformers (BERT) language model. This led to a four-fold increase in the amount of time needed to train BERT in just 18 months, demonstrating Microsoft ongoing efforts to provide their users with the greatest performance possible.

The massive language model GPT-3, which has 175 billion parameters and is part of the MLPerf Training benchmarking package, produced the results today. This model is 500 times larger than the BERT model, which was previously benchmarked (figure 2). The most recent training time from Azure beat the previous record from MLPerf Training v3.0 by a factor of 2.7. The v3.1 submission emphasizes how training time and cost may be reduced by developing a model that faithfully captures the workloads of modern AI applications.

The virtualization’s power

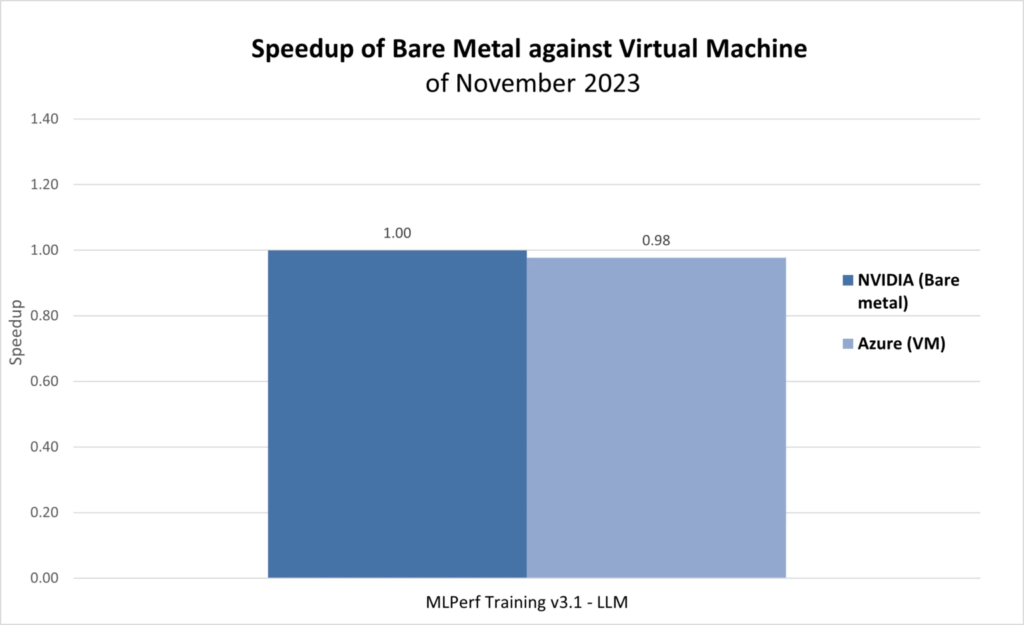

With 10,752 NVIDIA H100 Tensor Core GPUs, NVIDIA’s contribution to the MLPerf Training v3.1 LLM benchmark yielded a training time of 3.92 minutes. Compared to the NVIDIA bare-metal submission, which offers the best-in-class virtual machine performance across all offerings of HPC instances in the cloud, this translates to just a 2 percent increase in training time in Azure VMs (figure 3).

Comparative training timeframes between the NVIDIA submission on the bare-metal platform (3.1-2007) and Azure on virtual machines (3.1-2002) for the model GPT-3 (175 billion parameters) from MLPerf Training v3.1.

Figure 3: Comparative training times between the NVIDIA submission on the bare-metal platform (3.1-2007) and Azure on virtual machines (3.1-2002) for the model GPT-3 (175 billion parameters) from MLPerf Training v3.1.

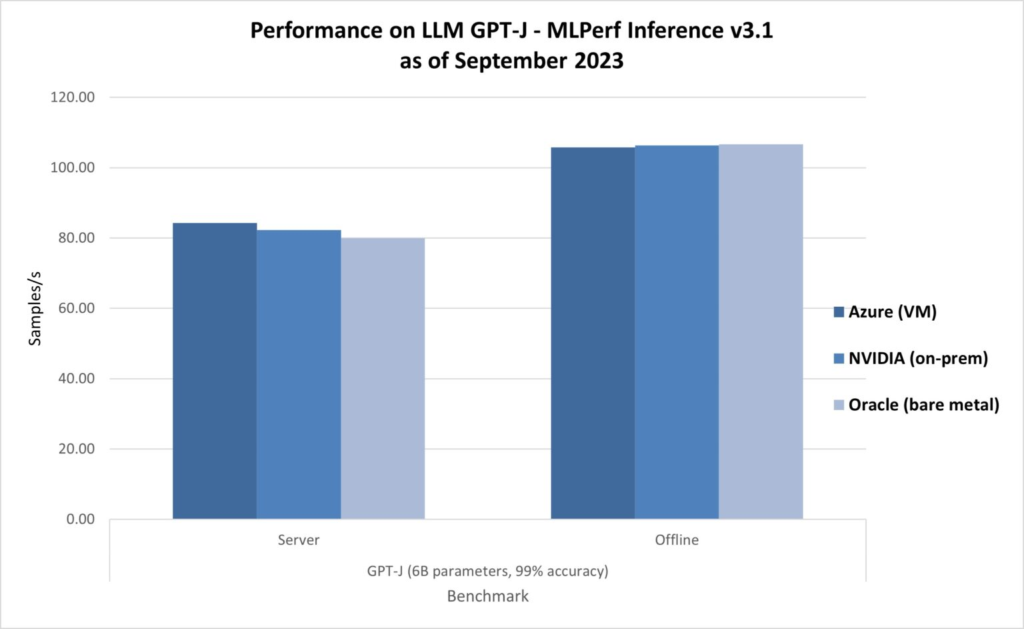

As demonstrated in MLPerf Inference v3.1, the most recent outcomes in AI Inferencing on Azure ND H100 v5 VMs also demonstrate leadership results. Echoing the efficiency of virtual machines, the ND H100 v5-series delivered 0.99x-1.05x relative performance compared to the bare-metal submissions on the identical NVIDIA H100 Tensor Core GPUs (figure 4).

Performance comparison between the NVIDIA H100 Tensor Core GPUs (3.1-0107 and 3.1-0121) offered on-premises and as bare metal for the ND H100 v5-series (3.1-0003). With the GPT-J benchmark from MLPerf Inference v3.1, all results were obtained in the offline and server situations, with an accuracy of 99 percent.

Figure 4: The ND H100 v5-series (3.1-0003) performance is contrasted with the NVIDIA H100 Tensor Core GPUs (3.1-0107 and 3.1-0121) offered on-site and in bare metal. With the GPT-J benchmark from MLPerf Inference v3.1, all results were obtained in the offline and server situations, with an accuracy of 99 percent.

In conclusion, the Azure ND H100 v5-series, designed for performance, scalability, and adaptability, provides the best AI infrastructure available, with remarkable throughput and low latency for both training and inferencing operations in the cloud.

[…] 13B, is now available on Amazon Bedrock. With this launch, Llama 2, Meta’s next-generation LLM, now has a fully managed API available for the first time through Amazon Bedrock, a public cloud […]

[…] Moving hybrid cloud to adaptive cloud with Azure […]

[…] Today, NVIDIA revealed platform-wide optimisations aimed at speeding up Meta Llama 3, the most recent iteration of the large language model (LLM). […]