Today, ASUS revealed details of its most recent Computex 2024 presentation, which will feature a wide array of AI servers for uses ranging from creative storage solutions to generative AI. The ultimate ASUS AI solution, the all-new ASUS ESC AI POD with the NVIDIA GB200 NVL72 system, is the focal point of this event. The most recent ASUS NVIDIA MGX-powered systems are also on show, such as the NVIDIA GB200 NVL2-equipped ESC NM1-E1, the NVIDIA GH200 Grace Hopper Superchip-equipped ESR1-511N-M1, and the latest ASUS solutions embedded with the NVIDIA HGX H200 and Blackwell GPUs.

ASUS is dedicated to providing complete software services and solutions, from edge computing deployments to hybrid servers. In an effort to promote the wider adoption of AI across a range of businesses, ASUS showcases its expertise in server infrastructure, storage, data centre architecture, and secure software platforms.

Presenting the ASUS ESC AI POD (NVIDIA GB200 NVL72 with ESC NM2N721-E1)

ASUS’s extensive experience in creating AI servers with unmatched efficiency and performance has been strengthened by its partnership with NVIDIA. The NVIDIA Blackwell-powered scale-up larger-form-factor system, the ESC AI POD with NVIDIA GB200 NVL72, is one of the showcase’s centrepieces. With the help of a symphony of GPUs, CPUs, and switches, the entire rack solution can perform in real-time inference and trillion-parameter LLM training at lightning-fast speeds. To achieve the best AI processing performance, it has the newest NVIDIA GB200 Grace Blackwell Superchip and fifth-generation NVIDIA NVLink technology. It also offers liquid-to-liquid and liquid-to-air cooling options.

ASUS ESC AI POD

Superchips NVIDIA GB200 NVL72 36 NVIDIA Grace CPUs

- 36 NVIDIA Grace CPUs Superchips

- 72 NVIDIA Blackwell Tensor Core GPUs

- 5th Gen NVIDIA NVLink technology

- Supports trillion-parameter LLM inference and training with NVIDIA

- Scale-up ecosystem-ready

- ASUS Infrastructure Deployment Manager

- End-to-end services

The Blackwell GPU Breakthrough from NVIDIA

With 72 NVIDIA Blackwell GPUs totaling 208 billion transistors, the ASUS ESC AI POD is a powerful device. NVIDIA Blackwell GPUs are made comprised of a single unitary GPU and two reticle-limited dies connected chip-to-chip at a rate of 10 terabytes per second (TB/s).

- The LLM inference and energy efficiency are as follows: training 1.8T MOE 4096x HGX H100 scaled over IB vs. 456x GB200 NVL72 scaled over IB; TTL = 50 milliseconds (ms) real time; FTL = 5s; 32,768 input/1,024 output. Size of cluster: 32,768

- A workload for database joins and aggregations using Snappy and Deflate compression, based on a TPC-H Q4 query. x86, H100 single GPU, and single GPU from GB200 NLV72 against Intel Xeon 8480+ custom query implementations

- Performance forecast is subject to modification.

Transcend Hardware

ASUS Software-based Approach

Surpassing rivals, ASUS specialises in creating customised data centre solutions and offering end-to-end services from edge computing deployments to hybrid servers. We go above and above by providing enterprise with software solutions, rather than merely hardware. Our software-driven methodology includes remote deployment and system verification, guaranteeing smooth operations to accelerate AI development.

Exceptionally Quick

NVIDIA GB200 NVL72 features fifth-generation NVLink technology.

Nine switches may connect to the NVLink ports on each of the 72 NVIDIA Blackwell GPUs within a single NVLink domain thanks to the NVIDIA NVLink Switch’s 144 ports and 14.4 TB/s switching capabilities.

In a single compute, NVLink connectivity attempts to guarantee a direct connection to every GPU.

Increase Productivity, Reduce Heat

Cooling liquid

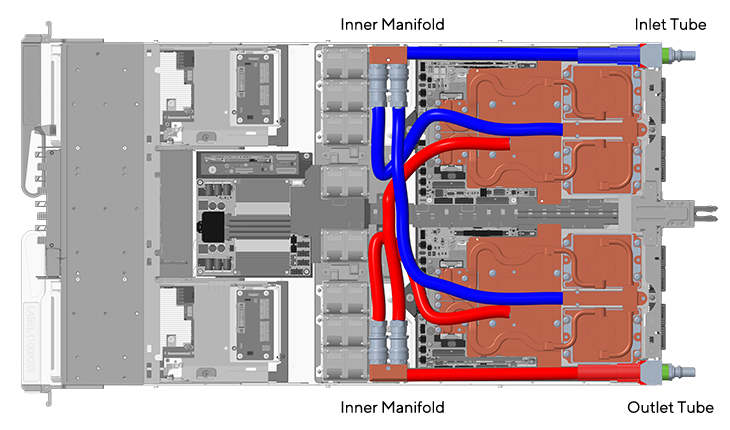

The single computing tray of the ASUS ESC AI POD’s hot and cold water flow

It’s systems maximise cooling effectiveness starting with the ASUS ESC AI POD single-cabinet, continuing through the whole data centre, and ending with the cooling water tower, which completes the water cycle. To guarantee efficient heat dissipation, provide a selection of liquid-to-liquid or liquid-to-air cooling options.

Boosting AI performance with scalable ASUS MGX solutions

As an effort to support the development of generative AI applications, ASUS has unveiled a comprehensive range of servers built on the NVIDIA MGX architecture. These include the 1U ESR1-511N-M1 and the 2U ESC NM1-E1 and NM2-E1 with NVIDIA GB200 NVL2 servers. Using the power of the NVIDIA GH200 Grace Hopper Superchip, the ASUS NVIDIA MGX-powered product is made to support high-performance computing, deep learning (DL) training and inference, data analytics, and seamless and quick data transfers for large-scale AI and HPC applications.

Customised ASUS HGX system to satisfy HPC requirements

The newest HGX servers from ASUS, ESC N8 and ESC N8A, are designed for HPC and AI and are powered by NVIDIA Blackwell Tensor Core GPUs and 5th Gen Intel Xeon Scalable processors, respectively. An improved thermal solution helps these to operate at peak efficiency and reduce PUE. These potent NVIDIA HGX servers, designed for advances in AI and data science, have a unique one-GPU-to-one-NIC arrangement for maximum throughput in compute-intensive operations.

Solutions for software-defined data centres

Beyond the ordinary to provide top-notch hardware and supplying comprehensive software solutions tailored to company demands, ASUS outperforms competitors in the creation of customised data centre solutions. ASUS services ensure seamless operations, which are crucial for speeding up the development of artificial intelligence. They range from system verification to remote deployment. This consists of a single, integrated package that comprises resource allocations, scheduling, web portal, and service operations.

Furthermore, to provide best-of-breed networking capabilities for generative AI infrastructures, ASUS AI server systems with integrated NVIDIA BlueField-3 SuperNICs and DPUs enable the NVIDIA Spectrum-X Ethernet networking platform. NVIDIA NIM inference microservices, a component of the NVIDIA AI Enterprise software platform for generative AI, can streamline the development process for generative AI applications and accelerate the runtime of generative AI in addition to optimising the enterprise generative AI inference process. By working with independent software vendors (ISVs) like APMIC, which specialise in large language models and enterprise applications, ASUS is also able to provide cloud or enterprise customers with tailored generative AI solutions.

By finding the ideal mix between hardware and software, ASUS enables its customers to accelerate their research and innovation projects. As ASUS makes more advancements in the server space, its data centre solutions and ASUS AI Foundry Services are being used in important locations throughout the world.