Vertex AI Search Platform

Introduction

Sometimes you find a feature so simple and useful that you wonder how you survived without it, Vertex AI’s Grounding with Google Search is one of those benefits.

In this blog article, I describe why you need grounding with large language models (LLMs) and how Vertex AI’s Grounding with Google Search may help with minimal work on your behalf.

Why grounding with LLMs?

You could be asking: LLMs are fantastic, why do I even need grounding?

Although LLMs excel at creating material, there are a few areas in which they lack:

- Fake content (hallucinations) can occur in generated material.

- Content that has been generated may not be current or stale.

- Generated content contains no citation to its source.

- Your personal information is unrelated to generated content (based solely on publicly available information).

In order to overcome these constraints and create more dependable and trustworthy generative AI systems, grounding LLMs is crucial.

Grounding in Vertex AI

Google Cloud’s Vertex AI Agent Builder supports multiple grounding solutions depending on public or private data:

Grounding Overview

Grounding, in generative AI, is the capacity to link model output to credible information sources. If you provide models with access to specific data sources, then grounding tethers their output to these inputs and limits the likelihood of fabricating content. This is particularly relevant in situations when precision and reliability are significant.

Grounding delivers the following benefits:

- Reduces model hallucinations, which are occasions where the model generates stuff that isn’t factual.

- Anchors model responses to specific information.

- Increases the generated content’s usefulness and credibility.

In Vertex Generative AI, you can ground supported model output in two basic ways:

- Grounding using Google Search

- Ground to your own data

Note: It’s encouraged to use English for the prompts.

Grounding using Google Search

When you utilise Grounding with Google Search, it links the Google model to the internet. Since non-grounded generative AI model responses are deemed fresh material based on learnt patterns, you won’t obtain factual responses which are time specific, for example, inquiring what the weather prediction is in your prompt. Since the internet provides access to existing and fresh information, a grounded prompt can provide you nuanced and particular prompt responses, with minimum inaccuracy, and with its sources cited.

Here’s an example contrasting a non-grounded prompt response versus a grounded response:

| Ungrounded Gemini | Grounding with Google Search |

|---|---|

| Prompt: What is the 401k contribution limit? Response: For 2023, the annual contribution limit for 401(k) plans is $22,500, up from $20,500 in 2022. Catch-up contributions for individuals aged 50 and over will remain at $7,500. | Prompt: What is the 401k contribution limit? Response: Here’s the deal with 401(k) contribution limits in 2024: The basic limit for employee contributions is $23,000 for 2024. That’s up from $22,500 in 2023. If you’re 50 or older, you can also contribute an extra $7,000 in catch-up contributions |

When you utilise Grounding with Google Search, you have to present search entry points in production and in your apps. See Search entry points for requirements for utilising Grounding with Google Search.

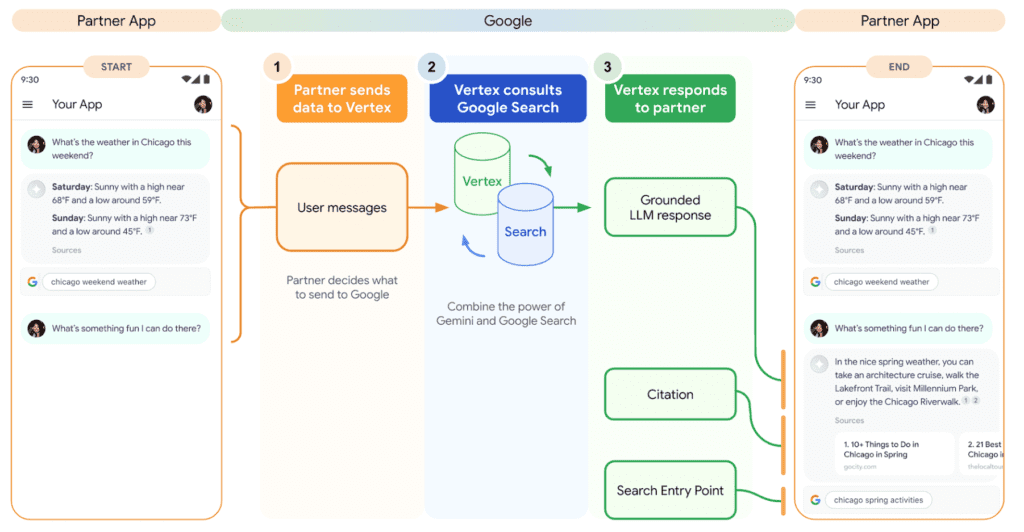

The following figure illustrates how to use the Gemini API’s Grounding feature with Google Search:

Ground to your own data

Vertex AI Search can be used as a datastore so that language models can be grounded to your own text data. With Vertex AI Search you integrate your own data, regardless of format, to refine the model output. Supported data types include:

- Website data: Utilise your website’s material directly.

- Structured data: Use organized, queryable data, for example, BigQuery data.

- Structured content (media): Integrate structured media content for rich outcomes.

- Data from external sources should be seamlessly integrated into structured content for third-party data sources.

- Unstructured data: Utilize raw, unformatted data.

The model’s performance goes beyond its training set when you ground it to your unique set of data. By linking to predefined data repositories within Vertex AI Search, the grounded model can deliver more accurate and relevant results, and responses directly tied to your use case.

A private, non-grounded prompt response and a grounded response are contrasted in the following example:

| Ungrounded Gemini | Private grounded Gemini |

|---|---|

| Prompt: What’s my company’s PTO policy? Response: I can’t definitively answer questions about your specific company’s PTO policy. PTO policies are set by individual companies and can vary depending on your company’s size, location and industry. | Prompt: What’s my company’s PTO policy? Response: Your company has an unlimited PTO policy. Approval is still required: Your manager must approve or deny requests based on workload, coverage, and company needs. |

Vertex AI Search python

How to use Google Search to code for grounding

It’s actually very simple to code anything as strong as Grounding with Google Search, despite what you might believe.

From our Python documentation, here is an example: gemini_grounding_example.py. To use the Google Search Retrieval tool in the generate content call, simply define it as follows:

tool = Tool.from_google_search_retrieval(grounding.GoogleSearchRetrieval())

prompt = “When is the next total solar eclipse in US?”

response = model.generate_content(

prompt,

tools=[tool],

generation_config=GenerationConfig(

temperature=0.0,

),

)

It’s not only Python that uses grounding. This is the C# file GroundingWebSample.cs, where the Google Search Retrieval tool is defined in the produce content request:

var generateContentRequest = new GenerateContentRequest

{

Model = $”projects/{projectId}/locations/{location}/publishers/{publisher}/models/{model}”,

GenerationConfig = new GenerationConfig

{

Temperature = 0.0f

},

Contents =

{

new Content

{

Role = “USER”,

Parts = { new Part { Text = “When is the next total solar eclipse in US?” } }

}

},

Tools =

{

new Tool

{

GoogleSearchRetrieval = new GoogleSearchRetrieval()

}

}

};

Vertex AI Search pricing

A per-request charging approach is employed by Vertex AI Search, also referred to as Vertex AI Search for Retail API. The summary is as follows:

Count of Search API Requests: $2.50 is paid for every 1,000 requests.

The major cost of Vertex AI Search is the per-request cost; however, additional fees may apply based on the underlying infrastructure utilised to query the API.

The cost of further Vertex AI services may differ. To find the most recent Vertex AI price details, it’s a good idea to consult the official Google Cloud documentation.