The AMD Instinct MI300 Features

Drives in the industry that are pushing the boundaries of innovation to address the various and intricate requirements of high performance computing (HPC) and artificial intelligence (AI) applications are the continuous growth of data and the demand for real-time results. While high levels of computation, storage, huge memory capacity, and high memory bandwidth are requirements shared by HPC and AI systems, their applications differ slightly.

While HPC applications need processing power at higher precisions and resources that can handle large amounts of data and execute complex simulations required by scientific discoveries, weather forecasting, and other data-intensive workloads, AI applications need significant computing speed at lower precisions with big memory to scale generative AI, train models, and make predictions.

With two different variants tailored to these particular AI and HPC requirements, the newest line of AMD accelerators, the AMD Instinct MI300 Series, uses the third-generation Compute DNA (AMD CDNA 3) architecture. Partners can create and swiftly implement scalable solutions across all contemporary workloads with the upgraded AMD ROCm 6 open production-ready software platform, enabling them to tackle some of the most significant problems facing the globe with remarkable efficiency.

The AMD CDNA 3 Architecture

Embracing improved packaging to enable heterogeneous integration and deliver excellent performance, the AMD CDNA 3 architecture (Figure 1 below) represents a fundamental shift for AMD’s accelerated computing strategy. Customers can now have the density and power efficiency needed to take on the most difficult computing challenges of our time by adopting a new computing paradigm that changes the way CPUs and GPUs are coupled together. With up to eight vertically stacked compute dies and four I/O dies (IOD) integrated into a heterogeneous package that is connected by the 4th Gen Infinity Architecture, the completely redesigned architecture fundamentally repartitions the compute, memory, and communication elements of the processor.

The newest AMD Instinct MI300 Series family also features eight stacks of high-bandwidth memory integrated for improved speed, efficiency, and programmability. This makes it simple to build AMD Instinct accelerator variations with CPU and GPU chiplet technologies to satisfy various workload needs. The world’s first high-performance, hyper-scale class APU is the AMD Instinct MI300A APU, which consists of three “Zen 4” x86 CPU compute dies tightly coupled with six GPU accelerator compute dies (XCD) of the 3rd generation AMD CDNA architecture. All of these dies share a single pool of virtual and physical memory in addition to an AMD Infinity Cache memory with exceptionally low latency.

AMD Instinct MI300A APU: Designed with HPC in mind

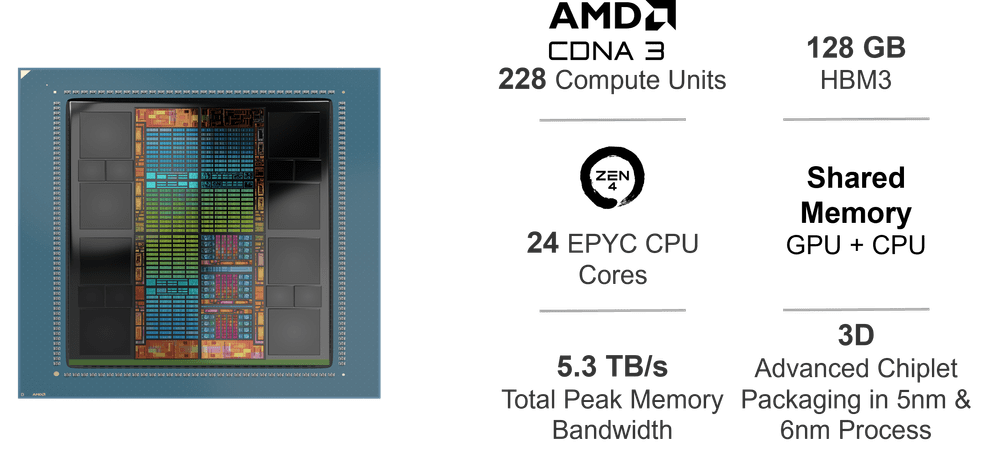

Large dataset handling is a key feature of the AMD Instinct MI300A APU accelerator, which makes it perfect for sophisticated analytics and computationally demanding modeling. In order to integrate 3D-stacked “Zen 4” x86 CPUs and AMD CDNA 3 GPU XCDs onto a single package with high-bandwidth memory (HBM), the MI300A (Figure 2 below) makes use of a novel 3D chiplet design. The AMD Instinct MI300A APU boasts 14,592 GPU stream processors in addition to 24 CPU cores. Numerous of the largest, most scalable data centers and supercomputers in the world are anticipated to use this advanced architecture to power their MI300A accelerators for accelerated HPC and AI applications.

This will enable the scientific and engineering communities to contribute to further advancements in a variety of fields, including healthcare, energy, transportation, climate science, and more. Powering the upcoming El Capitan supercomputer, which is anticipated to be among the fastest in the world when it goes online, is part of this. It has two exaflops.

Cutting-Edge AI is the focus of the AMD Instinct MI300X Accelerator

Large language models and other cutting-edge AI applications needing large-scale inference and training on vast data sets are intended for the AMD Instinct MI300X (Figure 3 below) accelerator. In order to achieve this, two extra AMD CDNA 3 XCD chiplets and an additional 64GB of HBM3 memory were added to the MI300X, which also replaced the three “Zen 4” CPU chiplets installed on the MI300A. This results in an improved GPU that can handle larger AI models, with up to 192GB of memory.

It is possible for cloud providers and enterprise users to do more inference jobs per GPU by running larger language models directly in memory. This can also reduce the total number of GPUs needed, speed up inference performance, and minimize the total cost of ownership (TCO).

Available, Tested, and Prepared

With interoperability for industry software frameworks, the AMD ROCm6 open-source software platform is designed to maximize the performance of AMD Instinct MI300 accelerators for HPC and AI workloads. ROCm, which may be tailored to your individual requirements, is a set of drivers, development tools, and APIs that enable GPU programming from low-level kernel to end-user applications. Within a free, open-source, integrated software ecosystem with strong security measures, you can create, work together on, test, and implement your apps.

After the software is built, it can be moved between multiple vendors’ accelerators or between different inter-GPU connectivity architectures, all without requiring a specific device. ROCm is especially well-suited to computer-aided design (CAD), artificial intelligence (AI), GPU-accelerated high performance computing (HPC), and scientific computing. For HPC, AI, and machine learning applications, the Infinity Hub now offers a selection of sophisticated GPU software containers and deployment guides that will help you expedite system deployments and time to insights.

In conclusion

The expansion of data and the demand for immediate outcomes will keep pushing the limits of AI and HPC applications. In order to democratize AI and promote industry-wide innovation, differentiation, and cooperation with their partners and customers, AMD will persist in promoting an open-source policy that spans from single server solutions to the biggest supercomputers in the world.

The AMD Instinct MI300 Series accelerators, the ROCm 6 software platform, and the next-generation AMD CDNA 3 architecture are designed to optimize your applications to help you achieve your objectives, whether you’re getting ready to conduct the next scientific simulation on a supercomputer or figuring out the most cost-effective shipping route. Await news from AMD system solution partners in the near future.

[…] AMD and Dell Instinct Accelerators are launching a portfolio extension for Dell Generative AI Solutions, […]

[…] Read more on Govindhtech.com […]

[…] Learn extra on Govindhtech.com […]