AMD recently made several announcements at its Data Center and AI Technology Premiere event in San Francisco, California. The company unveiled new products including the Instinct MI300A processors, which feature 3D-stacked CPU and GPU cores on the same package along with HBM (High Bandwidth Memory). They also introduced the MI300X model, a GPU-only variant with eight accelerators and a staggering 1.5TB of HBM3 memory. Additionally, AMD presented its 5nm EPYC Bergamo processors(Ryzen 9 Pro 7945 , Ryzen 7 Pro 7745 and Ryzen 5 Pro 7645) designed for cloud native applications and EPYC Genoa-X processors featuring up to 1.1GB of L3 cache. These three products are currently available, while the EPYC Sienna processors for telco and edge applications are expected to arrive in the second half of 2023.

By combining its Alveo and Pensando networking and DPUs, AMD now offers a comprehensive lineup of products tailored for AI workloads, directly competing with market leader Nvidia and Intel, which also offers AI-acceleration solutions across various product ranges.

AMD Instinct MI300:

The focus of this article is on the MI300 series, but more information about other AMD products will be added shortly. The MI300A is a data center APU that integrates 24 Zen 4 CPU cores, a CDNA 3 graphics engine, and eight stacks of HBM3 memory, resulting in a chip with 146 billion transistors, making it AMD’s largest chip produced. The MI300A will power the El Capitan supercomputer, set to be the world’s fastest when it becomes operational later this year.

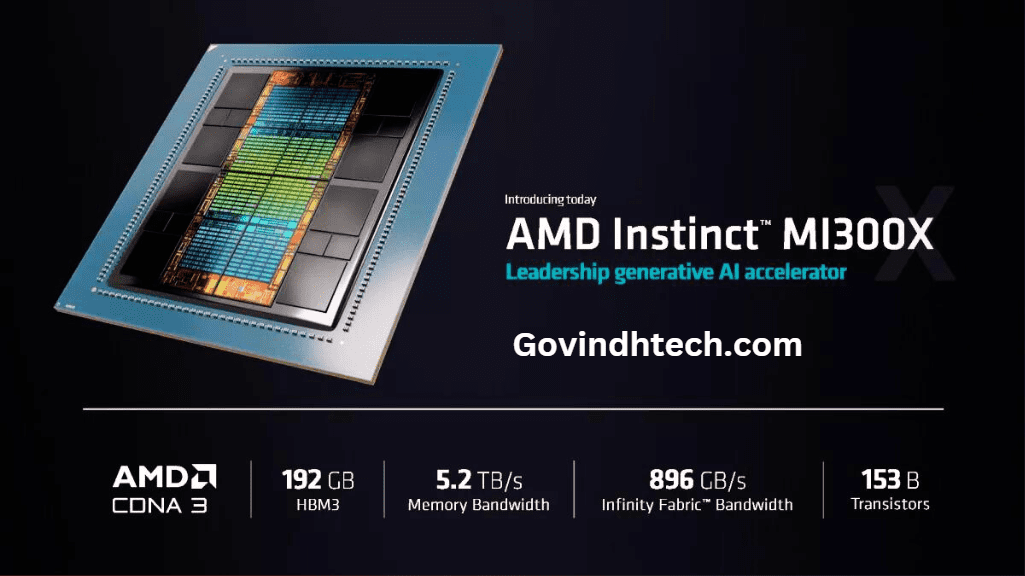

AMD also introduced the MI300X, a GPU-only variant optimized for large language models (LLMs). With 192GB of HBM3 memory spread across 24GB HBM3 chips, the MI300X can run LLMs up to 80 billion parameters, setting a record for a single GPU. The chip provides high memory bandwidth and HBM density, surpassing Nvidia’s H100 in these aspects.

The MI300A operates in a single memory domain and NUMA (Non-Uniform Memory Access) domain, ensuring uniform access memory for all CPU and GPU cores. In contrast, the MI300X employs coherent memory between its GPU clusters, reducing data movement between the CPU and GPU and improving performance, latency, and power efficiency.

AMD also announced the AMD Instinct Platform, which combines eight MI300X GPUs on a single server motherboard with a total of 1.5TB HBM3 memory. This platform follows the Open Compute Project (OCP) standards, promoting faster deployment compared to Nvidia’s proprietary MGX platforms.

The MI300A (CPU+GPU) model is currently in the sampling phase, while the MI300X and the 8-GPU Instinct Platform will start sampling in the third quarter and launch in the fourth quarter. More details about these products are expected to be revealed in the coming hours.

[…] improvements in terms of GPU cores, addressable memory, memory bandwidth, and transistor count. The MI300X has a TDP of 750 W, which is the highest-rated TDP ever in its form factor, but it’s important […]