PostgreSQL 16 Features

Cloud SQL clients may now use PostgreSQL 16, which adds new features for deeper insights into database operations and increased usability, in an effort to improve usability and help with informed decision-making.

Few of the PostgreSQL 16 version’s highlights in this blog article, including:

- Enhancements in the observability

- Enhancements in performance

- Efficiency of hoover

- Enhancements in Replication

Each of these topics deserves a closer examination.

Enhancements in observability

Database observability is crucial because it gives operators insights into how resources are being used, enabling them to optimise resource consumption. These are some significant improvements to observability that were included to PostgreSQL 16.

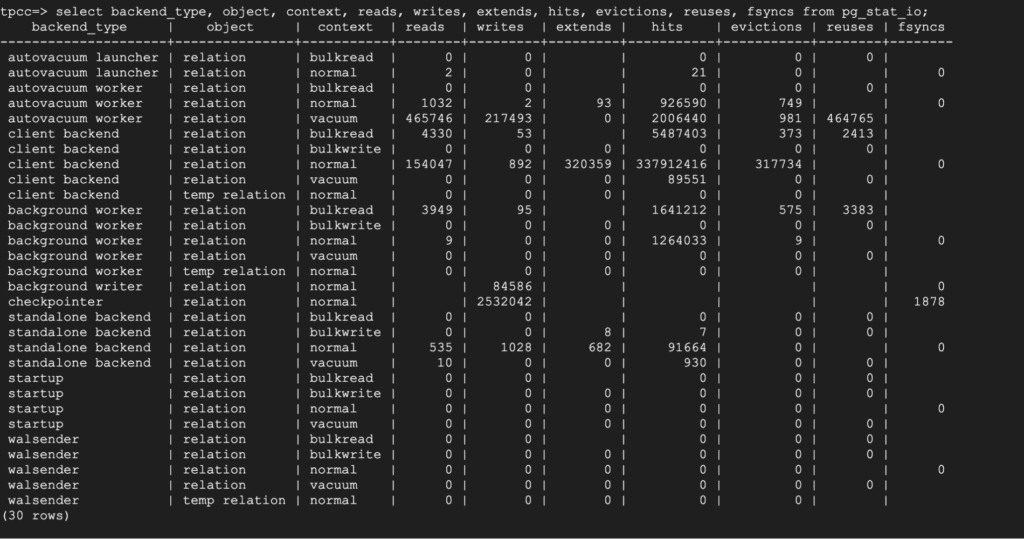

PG_STAT_IO

With PostgreSQL16, a new view called pg_stat_io is added, which offers insights into a PostgreSQL database’s input/output (IO) behaviour. With the help of this view, Google Cloud can decide how best to employ resources, maximise database performance, and guarantee the stability and scalability of the database system as a whole. The statistics for the entire instance are shown in this view.

What does this perspective suggest?

The statistics in the view are cumulative, just like in the majority of other pg_stat_* views. Note the values at the start and finish of the task in order to monitor changes in the pg_stat_io view over a given period of time.

This view mostly uses the columns in backend_type, io_context, and io_object to track the statistics.

One of the following can be a backend: client, background worker, checkpointer, standalone backend, autovacuum launcher, or autovacuum worker. The backend_type is a connection process. Depending on the load, the io_context is categorised as vacuum, bulk read, bulk write, or typical.

Reads, writes, extends, hits, evictions, and reuses are the actual statistics that need to be taken into account in order to determine the instance’s I/O state.

By analysing the evictions-to-hits ratio, They can keep an eye on the effectiveness of the shared buffers. When the hits for each context are significantly higher than the evictions, the buffer hit ratio is deemed effective.

Sequential scans are indicated by bulk writes and bulk reads. The effectiveness of ring buffers in this instance is demonstrated by the evictions, hits, and reuses for these.

The quantity of data that is read or written during the autovacuum or vacuum operation is another thing to watch. Io_context = “vacuum” and backend_type = “autovacuum worker” watch the autovacuum-related metrics. Backend_type is “standalone backend,” and io_context is “vacuum” for a vacuum process.

This is a picture of the vista:

Final table and index sequence and index scans

There are two additional columns in the views pg_stat_*_tables.

final_seq_scan

final_idx_scan

Are you curious about the last time an index or sequential scan occurred on your tables? Examine the recently added last_seq_scan and last_idx_scan columns in the pg_stat_*_tables.

These columns show the timestamp of the most recent sequential or index scan on the table. This can be useful in determining any problems with the “read query.”

Similarly, pg_stat_*_indexes now has the field last_idx_scan. The timestamp of the most recent use of the index is displayed in this column. The value for the index in this column allows us to make an informed judgement if Google Cloud were to drop an index.

Data on how frequently tuples are moved to a new page in order to get updates

New to the views pg_stat_*_tables is n_tup_newpage_upd.

Now that can examine this in the column n_tup_newpage_upd, Google Cloud can keep track of how many rows wind up in new heap pages when they conduct updates on a table.

This can show the elements that have contributed to the table’s expansion over time. The “fillfactor” selected for the table can also be verified using the value in this column. Google Cloud can determine whether or not the “fillfactor” is ideal by looking at the statistics in this column, particularly for updates that are anticipated to be “HOT.”

PostgreSQL 16 performance

For databases, performance is always of utmost importance. In a major version release, performance improvements are incorporated at a considerably faster rate than other enhancements. Here are a few PostgreSQL 16 performance enhancements.

Tables are deemed “HOT” if a table column alone contains the BRIN index.

Updates to a table with a BRIN index in PostgreSQL16 are now regarded as HOT since the table’s fillfactor is ideal. The setting “Fillfactor” is crucial for this update to be designated as “HOT.” Such a table can now be vacuumed quickly and with less resources thanks to this change.

Parallelizing OUTER or FULL joins

For selects involving very big tables joined by full or outer joins, this performance boost is particularly helpful. Rather than producing a merge or hash following a full heap fetch in PostgreSQL16, this will produce a parallel hash following a parallel seq scan for each table. It has demonstrated a significant improvement over PG15 in tests.

Enhancements for Vacuum

A major component of PostgreSQL MVCC is hoover. Table bloat is reduced by hoover, which releases space after removing the dead tuples. This keeps transaction wrap-around issues from occurring in the database. Here are several ways that PostgreSQL16‘s vacuum processes have been improved.

Enhanced performance of VACUUM operation for large tables

BUFFER_USE_LIMIT

The ring buffers allotted for VACUUM and ANALYSE operations can now be changed using the new server option “vacuum_buffer_usage_limit” in PostgreSQL 16, which has a default value of 256K. When the “BUFFER_USAGE_LIMIT” option is set during a VACUUM operation, the designated ring buffer size is allocated and the default value of “vacuum_buffer_usage_limit” is overridden. Vacuum operations can be sped up by increasing the “buffer_usage_limit,” however doing so may cause performance damage as it may remove buffers used by the primary workload from “shared_buffers.”

When cleaning really big tables, it is frequently preferable to use “buffer_usage_limit” to restrict the amount of ring buffers used for VACUUM processes. When approaching Txid wraparound, when finishing the VACUUM is crucial, this option can be utilised sparingly. When the VACUUM procedure includes ANALYSE, the combined use of the ring buffer size indicated in “buffer_usage_limit” is made. When the buffer usage limit is set to 0, the buffer access strategy is disabled. This can lead to the eviction of a large number of shared buffers, which degrades performance. The range of values for “buffer_usage_limit” is 128K to 16 GB.

Processing of only TOAST tables using VACUUM

Google Cloud can now only hoover TOAST tables that are connected to a relation in PostgreSQL 16. When set to FALSE, the option “process_toast” was originally added to disable vacuuming the TOAST table. If not, a relation’s main and TOAST tables were both subjected to vacuum. In PostgreSQL 16, They can either hoover the TOAST and main tables together or simply the one that is part of a relation, depending on the requirements. Depending on your needs, this enables improved control while vacuuming the primary, the toast, or both.

On-call logical decoding

Subscribers can connect to the read replicas rather than the primary database instance in PostgreSQL 16 since logical decoding is enabled on the read replica. This lessens the burden on the primary instance by dividing the workload between it and the replica. By doing this, the replica takes up the logical replication effort that was previously on the primary instance.

This is a significant boost in performance for the primary node, particularly when considering the number of logical replication slots that nodes have. Another benefit is that subscribers are unaffected by the change and can carry on as usual in the event of a replica offer. Note that, in contrast to earlier, any delay on the read replica will impact the logical subscriber later on.