Two new 8-GPU baseboard servers have been added to the GIGABYTE G593 series. Giga Computing, a GIGABYTE subsidiary and a leader in the industry for generative AI servers and cutting-edge cooling technologies, supports the NVIDIA HGX H200, a GPU platform that is perfect for large AI datasets, scientific simulations, and other memory-intensive workloads.

NVIDIA HGX H200

G593 Series for AI & HPC Scale-up Computing

The G593 series provides reliable, demanding performance in its small 5U chassis with high airflow for amazing compute density thanks to dedicated real estate for cooling GPUs.

The tried-and-true GIGABYTE G593 series server, designed specifically for an 8-GPU baseboard, is the ideal match for the NVIDIA H200 Tensor Core GPU, which uses the same amount of power as the air-cooled NVIDIA HGX H100-based systems. In comparison to the NVIDIA H100 Tensor Core GPU, the NVIDIA HGX H200 GPU delivers a significant boost in memory capacity and bandwidth to help reduce the memory bandwidth limits on AI, particularly AI inference. With a maximum capacity of 141GB of HBM3e memory and a memory bandwidth of 4.8TB/s, the NVIDIA HGX H200 GPU offers a 1.7X memory capacity increase and a 1.4X throughput improvement.

The new GIGABYTE servers have the same general design and optimised specifications, with the exception of the CPU platform, for all customer applications in accelerated computing. These servers will soon be listed together with more eligible GIGABYTE G593 series servers, such as four G593 series servers that have completed functional and performance tests and are NVIDIA-Certified Systems in the NVIDIA Qualified System Catalogue.

The high degree of networking and storage is included into the G593 series. Using NVIDIA GPUDirect Storage, one of the NVIDIA Magnum IO technologies, which provides a direct link from GPU memory to storage for increased bandwidth and lower latency, the series, which supports up to eight U.2 Gen5 NVMe SSDs, can process data quickly. There are twelve expansion slots available for NVIDIA InfiniBand or Ethernet networking via a NIC or DPU for connecting to other servers in the cluster or for data transport outside the data centre. There is a 4+2 3000W redundant power supply design to provide enough of power and continuous operation.

Combining for a Rack-scale Solution with GIGAPOD

It is impossible to exaggerate the significance of a well-designed computer cluster, particularly in light of the rapid advancements in AI and the demand for fresh perspectives in industry and academia. GIGA POD, a rack-scale solution that GIGABYTE introduced last year, has been a huge success when deployed with NVIDIA HGX systems. Thus far, NVIDIA HGX H100 has been integrated into all of these systems using the G593 series server. GIGA POD is expected to help provide even greater performance and efficiency for accelerated computing operations with the addition of support for the NVIDIA HGX H200 GPU.

Designed with AI and High-Performance Computing in mind Multiple GPUs with lightning-fast interconnections and a fully accelerated software stack are needed for AI, complicated simulations, and large datasets. To deliver the best application performance and the quickest time to insights, the NVIDIA HGX AI supercomputing platform combines the entire capability of NVIDIA GPUs, NVIDIA NVLink, NVIDIA networking, and fully optimised AI and high-performance computing (HPC) software stacks.

Superior Full-Stack Accelerated Computing Platform

NVIDIA Blackwell Tensor Core GPUs are integrated with high-speed interconnects in the NVIDIA HGX B200 and HGX B100, ushering in a new age of accelerated computation and generative artificial intelligence for data centres. Blackwell-based HGX systems are a premium accelerated scale-up platform that can handle workloads in generative AI, data analytics, and HPC that require up to 15X higher inference performance than the previous generation.

For the best AI performance, NVIDIA HGX offers sophisticated networking solutions at up to 400 gigabits per second (Gb/s) using NVIDIA Quantum-2 InfiniBand and Spectrum-X Ethernet. To support cloud networking, composable storage, zero-trust security, and GPU compute elasticity in hyperscale AI clouds, HGX also incorporates NVIDIA BlueField-3 data processing units (DPUs).

NVIDIA HGX AI Supercomputer

Designed with AI and high-performance computing in mind

Large datasets, intricate simulations, and artificial intelligence all demand several GPUs with lightning-fast connections and an entirely enhanced software stack. To deliver the best application performance and the quickest time to insights, the NVIDIA HGX AI supercomputing platform combines the entire capability of NVIDIA GPUs, NVIDIA NVLink, NVIDIA networking, and fully optimised AI and high-performance computing (HPC) software stacks.

Superior Full-Stack Accelerated Computing Platform

NVIDIA Blackwell Tensor Core GPUs are integrated with high-speed interconnects in the NVIDIA HGX B200 and HGX B100, ushering in a new age of accelerated computation and generative artificial intelligence for data centres. Blackwell-based HGX systems are a premium accelerated scale-up platform that can handle workloads in generative AI, data analytics, and HPC that require up to 15X higher inference performance than the previous generation.

For the best AI performance, NVIDIA HGX offers sophisticated networking solutions at up to 400 gigabits per second (Gb/s) using NVIDIA Quantum-2 InfiniBand and Spectrum-X Ethernet. To support cloud networking, composable storage, zero-trust security, and GPU compute elasticity in hyperscale AI clouds, HGX also incorporates NVIDIA BlueField-3 data processing units (DPUs).

Inference from Deep Learning: Effectiveness and Adaptability

Real-Time Deduction for the Upcoming Large Language Model Generation

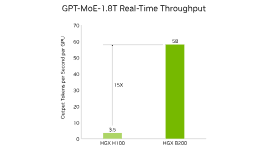

For huge models like GPT-MoE-1.8T, the HGX B200 achieves up to 15X greater inference performance compared to the previous NVIDIA Hopper generation. Utilising improvements from the Nemo Framework, TensorRT-LLM, and unique Blackwell Tensor Core technology, the second-generation Transformer Engine speeds up inference for Mixture-of-Experts (MoE) and large language models (LLMs).

Deep Learning Instruction: Effectiveness and Expandability

Superior Training Results

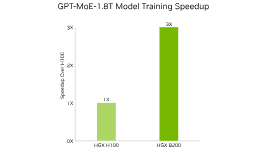

With 8-bit floating point (FP8) and increased precisions, the second-generation Transformer Engine allows for an astounding 3X faster training time for big language models, such as GPT-MoE-1.8T. NVIDIA Magnum IO software, InfiniBand networking, and fifth-generation NVLink, which offers 1.8TB/s of GPU-to-GPU connectivity, round out this innovation. When combined, these guarantee effective scalability for businesses and large-scale GPU computing clusters.

Enhancing HGX Through NVIDIA Networking

The modern computing unit is the data centre, and networking is essential to extending the performance of applications throughout it. When combined with NVIDIA Quantum InfiniBand, HGX offers exceptional performance and efficiency, ensuring that all available computing resources are fully utilised.

HGX works best with the NVIDIA Spectrum-X networking platform, which provides the best AI performance over Ethernet, in AI cloud data centres that employ Ethernet. For optimal resource utilisation and performance isolation, it has BlueField-3 DPUs and Spectrum-X switches. This allows it to produce consistent, predictable results for thousands of simultaneous AI operations at any scale. Zero-trust security and powerful cloud multi-tenancy are made possible by Spectrum-X. Israel-1, a hyperscale generative AI supercomputer built using Dell PowerEdge XE9680 servers based on the NVIDIA HGX 8-GPU architecture, BlueField-3 DPUs, and Spectrum-4 switches, is a reference design created by NVIDIA.

Nvidia HGX H200 Price

- Disclaimer: There are a number of factors that might cause the price of NVIDIA HGX H200 systems to fluctuate dramatically, including:

- Number of GPUs: The price increases with the number of GPUs included.

- Other parts: The cost can be affected by memory, storage, networking, and other hardware.

- Vendor: Prices and configurations vary across different sellers.

- Market conditions: Price can be affected by supply and demand.

Sample Costs

For a general idea, consider the following recent example:

Lenovo NVIDIA HGX H200: Approximately $289,000 was the price of a system with eight H200 GPUs.

NVIDIA HGX H200 Specs

NVIDIA HGX H200 is available in single baseboards with four or eight H200 or H100 GPUs or eight Blackwell GPUs. These powerful combinations of hardware and software lay the foundation for unprecedented AI supercomputing performance.

| HGX B200 | HGX B100 | |

|---|---|---|

| Form Factor | 8x NVIDIA B200 SXM | 8x NVIDIA B100 SXM |

| FP4 Tensor Core | 144 PFLOPS | 112 PFLOPS |

| FP8/FP6 Tensor Core | 72 PFLOPS | 56 PFLOPS |

| INT8 Tensor Core | 72 POPS | 56 POPS |

| FP16/BF16 Tensor Core | 36 PFLOPS | 28 PFLOPS |

| TF32 Tensor Core | 18 PFLOPS | 14 PFLOPS |

| FP32 | 640 TFLOPS | 480 TFLOPS |

| FP64 | 320 TFLOPS | 240 TFLOPS |

| FP64 Tensor Core | 320 TFLOPS | 240 TFLOPS |

| Memory | Up to 1.5TB | Up to 1.5TB |

| NVLink | Fifth generation | Fifth generation |

| NVIDIA NVSwitch | Fourth generation | Fourth generation |

| NVSwitch GPU-to-GPU Bandwidth | 1.8TB/s | 1.8TB/s |

| Total Aggregate Bandwidth | 14.4TB/s | 14.4TB/s |