Micron SSDs

Gen5 NVMe SSDs

Micron presented its industry-leading research on AI training model offload to NVMe, collaborating with teams at Dell and NVIDIA. In a Dell PowerEdge R7625 server equipped with Micron’s upcoming high-performance Gen5 E3.S NVMe SSD, the Data Centre Workload Engineering team at Micron tested Big Accelerator Memory (BaM) with GPU-initiated direct storage (GIDS) on the NVIDIA H100 Tensor Core GPU with assistance from Dell’s Technical Marketing Lab and NVIDIA’s storage software development team.

More Memory using NVMe?

The standard procedure for training huge models whose sizes are increasing quickly is to use as much HBM as possible on the GPU, followed by as much system DRAM. If a model cannot fit in HBM + DRAM, it is then parallelized over many NVIDIA GPU systems.

The cost of parallelizing training over numerous servers is high since data must travel over system and network links, which can quickly become bottlenecks. This is especially true for GPU utilisation and efficiency.

What if NVMe could be used as a third tier of “slow” memory by Micron to avoid having to divide an AI training job across many GPU systems? Exactly that is what BaM with GIDS accomplishes. It transfers the data and control routes to the GPU by replacing and streamlining the Gen5 NVMe SSD driver. How does that perform then?

Results of Baseline Performance

The open-source BaM implementation mentioned above includes the BaM Graph Neural Network (GNN) benchmark, which was used to execute all of the test results displayed.

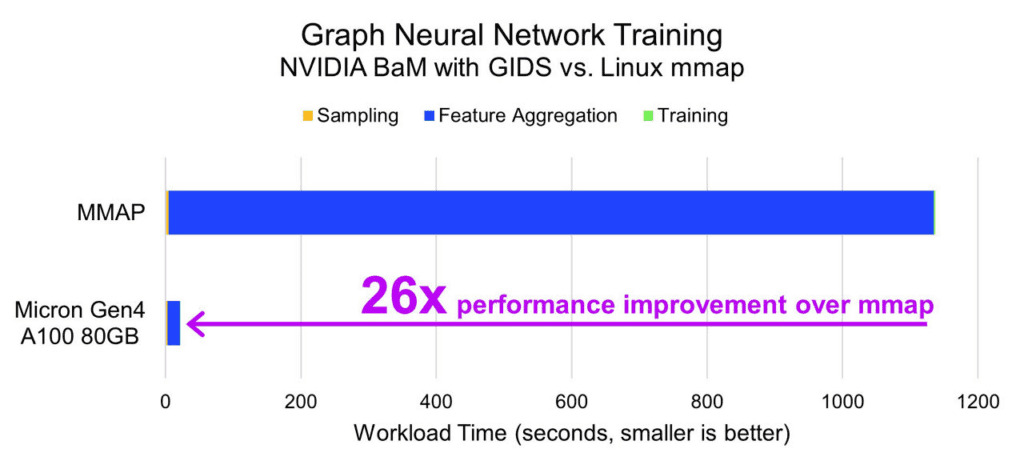

This initial test illustrates the results with and without BaM when GIDS is turned on. As a test example without particular storage software, a common implementation of Linux mmap was used to fault memory accesses through the CPU to storage.

Using a Micron 9400 Gen4 NVMe SSD and an NVIDIA A100 80GB Tensor Core GPU, the mmap test took 19 minutes. It took 42 seconds with BaM and GIDS deployed, a 26x increase in performance. The benchmark’s feature aggregation component, which depends on storage performance, shows that performance improvement.

Dell Laboratories’ Gen5 Performance

Micron aimed to demonstrate at GTC how successfully their future Gen5 NVMe SSD performed AI model offload. In order to obtain access to a Dell PowerEdge R7625 server with an NVIDIA H100 80GB PCIe GPU (Gen5x16), Micron teamed up with Dell’s Technical Marketing Labs. With their outstanding help, Micron successfully completed testing.

SSD performance affects feature aggregation. Its execution duration accounts for 80% of the whole runtime, and it improves by twice between Gen4 and Gen5 NVMe SSD. Training and sampling are dependent on the GPU; an NVIDIA A100 to an H100 Tensor Core GPU can enhance training performance five times. For this use case, high-performance Gen5 NVMe SSDs are necessary, and a pre-production sample of Micron SSD i.e. Gen5 NVMe SSD exhibits roughly double the performance of Gen4.

| GNN WORKLOAD PERFORMANCE | MICRON GEN5 H100 | MICRON GEN4 A100 | GEN5 VS GEN4 PERFORMANCE |

|---|---|---|---|

| Feature Aggregation (NVMe) | 18s | 25s | 2x |

| Training (GPU) | 0.73s | 3.6s | 5x |

| Sampling | 3s | 4.6s | 1.5x |

| End-to-End time (Total of Feature Aggregation + Training + Sampling) | 22.4s | 43.2s | 2x |

| GIDS + BaM Accesses/s | 2.87M | 1.5M | 2x |

What Is Micron SSD Being Affected by BaM With GIDS?

The typical Linux tools to view the IO metrics (IOPs, latency, etc.) are inoperable since BaM with GIDS substitutes the Gen5 NVMe SSD driver. After tracing the BaM using GIDS GNN training workload, Micron discovered some astonishing findings.

- BaM with GIDS operates at almost the drive’s maximum input/output speed.

- For GNN training, the IO profile is 99% tiny block reads.

- The SSD queue depth is 10-100 times greater than what Micron anticipates from a “typical” data centre CPU demand.

This is a new workload designed to maximise Gen5 NVMe SSD performance. Multiple streams can be managed by a GPU in parallel, and the BaM with GIDS software will optimise and manage latency, resulting in a workload profile that might not even be feasible to execute on a CPU.

In summary

As the AI sector develops, clever solutions for GPU system efficiency and utilisation become increasingly crucial. Larger AI issue sets can be solved more effectively with the help of software like BaM with GIDS, which will increase the efficiency of AI system resources. Extending model storage to Gen5 NVMe SSD will have an impact on training times, but this trade-off will enable larger, less time-sensitive training jobs to be completed on fewer GPU systems, hence increasing the effectiveness and total cost of ownership (TCO) of deployed AI gear.

Specifics of the Hardware and Software:

- Workload: Complete Training for IGBH and GIDS.

- The Data Centre Workload Engineering team at Micron measured the Gen5 NVMe SSD performance, whereas the NVIDIA storage software team measured the baseline (mmap) performance on a system that was comparable.

- Systems being evaluated:

- Gen4: NVIDIA A100-80GB GPU, Ubuntu 20.04 LTS (5.4.0-144), NVIDIA Driver 535.129.03, CUDA 12.3, DGL 2.0.0, Dual AMD EPYC 7713 64-core, 1TB DDR4, Micron 9400 PRO 8TB

- GL 2.0.0, CUDA 12.3, NVIDIA H100-80GB GPU, Ubuntu 20.04 LTS (5.4.0-144), NVIDIA Driver 535.129.03, Dell R7625, 2x AMD EPYC 9274F, 24-core, 1TB DDR5, Micron Gen5 NVMe SSD

- Work based on the publication “Introduction of GPU-Initiated High-Throughput Storage Access in the BaM System Architecture”