A Chat bot Framework That Allows for Configuration, Neural Chat

Just a Few Minutes Required to Create Your Very Own Chat bot

An easy-to-use application programming interface (API) is provided by Neural Chat, which is a configurable chat bot framework that is part of Intel Extension for Transformers.

With this API, it is possible to rapidly construct a chatbot on many architectures, such as Intel Xeon Scalable Processors and Intel Gaudi Accelerator. The fine-tuning, optimisation, and inference capabilities of NeuralChat are made possible by the fact that it is constructed on top of large language models (LLMs).

In addition to that, it provides customers with access to a vast library of plugins that can be used to make their chatbots more intelligent via the use of knowledge retrieval, more engaging through the use of voice, quicker through the use of query caching, and more secure through the use of guardrails.

Getting Things Underway

The Intel Extension for Transformers software package includes NeuralChat as one of its accessible components. To install it, you need just to execute the following command:

pip install intel-extension-for-transformers

Create a chatbot with the help of NeuralChat’s user-friendly Python application programming interface:

## create a chatbot on local

from intel_extension_for_transformers.neural_chat import build_chatbot, PipelineConfig

config = PipelineConfig()

chatbot = build_chatbot(config)

## use chatbot to do prediction

response = chatbot.predict(“Tell me about Intel Xeon Scalable Processors.”)

NeuralChat should be implemented as a service.

neuralchat_server start –config_file ./server/config/neuralchat.yaml

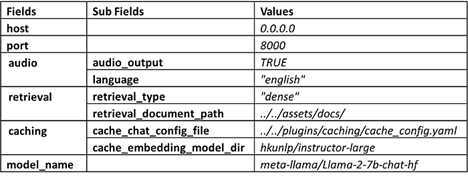

Because NeuralChat offers a default chatbot setup in the file neuralchat.yaml, you may personalise the manner in which this chatbot behaves by editing the following fields in the configuration file. These fields allow you to choose the LLM model and plugins to use.

If NeuralChat is started as a service, users will have the ability to submit requests to NeuralChat and get replies through curl:

curl -X POST -H “Content-Type: application/json” -d ‘{“prompt”: “Tell me about Intel Xeon Scalable Processors.”}’ http://127.0.0.1:80/v1/chat/completions

Plugins that Enhance the Chatbot’s Capabilities

The capabilities of the chatbot may be expanded with the use of plugins made available by NeuralChat. These plugins provide a diverse range of LLM services and features. In the chatbot pipeline, these plugins are deployed in order to do inference:

- Knowledge retrieval comprises of document indexing to effectively retrieve relevant information, including Dense Indexing based on LangChain and Sparse Indexing based on fastRAG document rankers to prioritise the most relevant replies. Both of these types of indexing are used by the knowledge retrieval process.

- The improvement in the chat response time may be attributed to query caching, which allows the fast way to retrieve the answer without LLM inference.

- Improvements to user prompts may be made possible via the use of automated prompt engineering, which is supported by prompt optimisation.

- Memory controller allows for effective use of available memory.

- The chatbot’s inputs and outputs are subjected to a sensitive content check, which is enabled by the safety checker.

You have the ability to activate, disable, or supply a plugin that is fully customisable in the following ways:

from intel_extension_for_transformers.neural_chat import build_chatbot, PipelineConfig, plugins

plugins.retrieval.enable = True

plugins.retrieval.args[“input_path”]=”./assets/docs/”

conf = PipelineConf(plugins=plugins)

chatbot = build_chatbot(conf)

Adjusting the Settings of a Chatbot

For text creation, summarization, code generation tasks, and even Text-To-Speech (TTS) models, NeuralChat enables the fine-tuning of a pre-trained LLM:

from intel_extension_for_transformers.neural_chat import finetune_model, TextGenerationFinetuningConfig

finetune_cfg = TextGenerationFinetuningConfig() # support other finetuning configs

finetuned_model = finetune_model(finetune_cfg)

Users will be able to customise their experiences by fine-tuning the models with their own confidential datasets using this method.

Improving a Chatbot’s Performance

Users are able to optimise chatbot inference by making advantage of NeuralChat’s several model optimisation tools, such as advanced mixed precision (AMP) and Weight Only Quantization.

from intel_extension_for_transformers.neural_chat import build_chatbot, AMPConfig

pipeline_cfg = PipelineConfig(optimization_config=AMPConfig())

chatbot = build_chatbot(pipeline_cfg)

In this manner, the pre-trained LLM will be optimised dynamically on the fly in order to speed up the inference process.

Observations to Conclude

You may now easily construct your own chatbot on several architectures with NeuralChat, which is now accessible to you. You may increase the intelligence of your chatbot via knowledge retrieval by using plugins. Plugins also allow you to make your chatbot more engaging through voice and more efficient through query caching.

You are strongly encouraged to give the creation of your own chatbot a go. Pull requests, bugs, and inquiries may all be sent to GitHub by users.

We strongly suggest that you investigate the many AI Tools and Framework optimisations that Intel has to offer.

[…] the conclusion that it was time to recruit a partner so that Ovum’s web and mobile app-based chat and scheduling solution could be scaled using AI. This decision was made in light of the fact that […]