Model Evaluation on Amazon Bedrock

IBM demonstrated the Amazon Bedrock model evaluation feature at AWS re:Invent 2023, and it is now freely accessible. This new feature allows you to choose the foundation model that produces the best results for your specific use case, which makes it easier for you to integrate generative AI into your application. Model assessments are important at every stage of development, as my colleague Antje noted in her piece (Evaluate, compare, and choose the best foundation models for your use case in Amazon Bedrock).

You now have evaluation tools at your disposal as a developer to create generative applications using artificial intelligence (AI). One way to get started is by trying out several models in a playground setting. Include automatic model evaluations to accelerate iterations. Then, you can include human evaluations to help assure quality when you get ready for an initial launch or limited release.

They’ll get to those shortly. During the preview, we got a tonne of fantastic and helpful input, which AWS used to refine the features of this new capability in time for today’s launch. Here are the fundamental steps as a quick reminder (for a thorough walk-through, see Antje’s post).

Make a Model Assessment Work – Choose a task type, an evaluation technique (human or automated), a foundation model from the list of options, and evaluation metrics. For an automated assessment, you can select toxicity, robustness, and accuracy; for a human evaluation, you can select any desired metrics (like friendliness, style, and brand voice conformance, for example). You can employ an AWS-managed team or your own work team if you decide to do a human evaluation. Together with a custom task type (not shown), there are four pre-built task kinds.

Once the job type has been selected, you may specify the metrics and datasets to be used in assessing the model’s performance. If you choose Text classification, for instance, you can assess robustness and/or accuracy in relation to either an internal or your own dataset:

As seen above, you have the option of creating a new dataset in JSON Lines (JSONL) format or using one that is already built in.

Every submission needs to have a prompt, and it may also have a category. For all human assessment settings and for some combinations of job types and metrics for automatic evaluation, the reference response is optional:

You can use product descriptions, sales literature, or customer support queries that are unique to your company and use case to construct a dataset (or consult your local subject matter experts). Real Toxicity, BOLD, TREX, WikiText-2, Gigaword, BoolQ, Natural Questions, Trivia QA, and Women’s Ecommerce Clothing Reviews are among of the pre-installed datasets. These datasets can be selected as needed because they are made to evaluate particular kinds of activities and metrics.

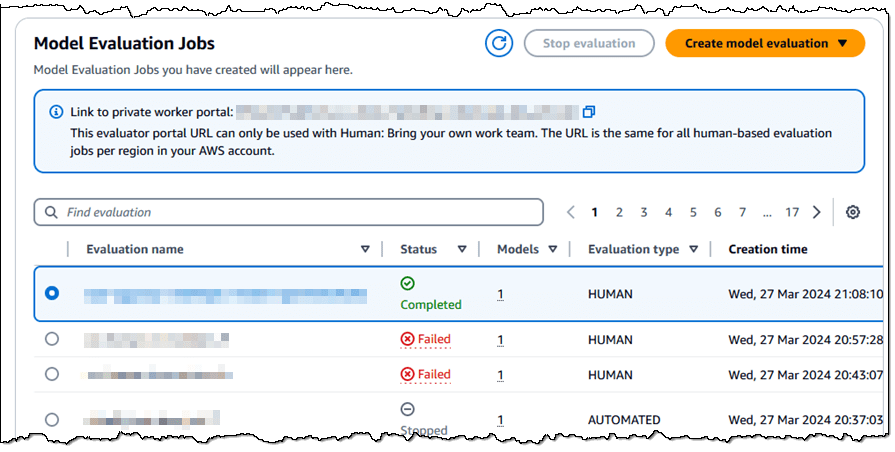

Run Model Evaluation Job: Begin the process and give it some time to finish. The status of every model evaluation job that you have is accessible through the console and the newly added GetEvaluationJob API function.

Obtain and Examine the Evaluation Report Obtain the report and evaluate the model’s performance using the previously chosen metrics. Once more, for a thorough examination of an example report, see Antje’s post.

Model Evaluation on Amazon Bedrock Features

Fresh Features for GA

Now that we have cleared all of that up, let’s examine the features that were introduced in advance of today’s launch:

Better Job Management: Using the console or the recently introduced model evaluation API, you may now halt a running job.

Model assessment API: Programmatic creation and management of model assessment tasks is now possible. There are the following features available:

CreateEvaluationJob: This function creates and executes a model evaluation job with the parameters—an evaluationConfig and an inferenceConfig—specified in the API request.

ListEvaluationJobs: This is a list of model evaluation jobs that may be filtered and sorted according to the evaluation job name, status, and creation time.

To retrieve a model evaluation job’s properties, including its status (InProgress, Completed, Failed, Stopping, or Stopped), use the GetEvaluationJob function. The evaluation’s findings will be saved at the S3 URI mentioned in the outputDataConfig field that was provided to CreateEvaluationJob when the job is finished.

StopEvaluationJob: Terminate an ongoing task. A job that has been interrupted cannot be continued; to run it again, a new one must be created.

During the preview, one of the most requested features was this model evaluation API. It can be used to conduct large-scale evaluations, possibly as a component of your application’s development or testing schedule.

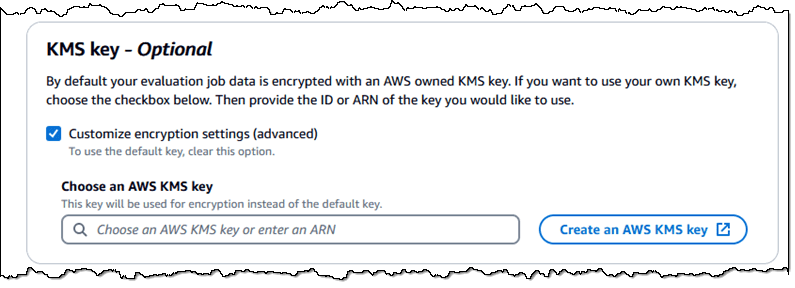

Enhanced Security – Your evaluation task data can now be encrypted using customer-managed KMS keys (if you do not select this option, AWS will use their own key for encryption):

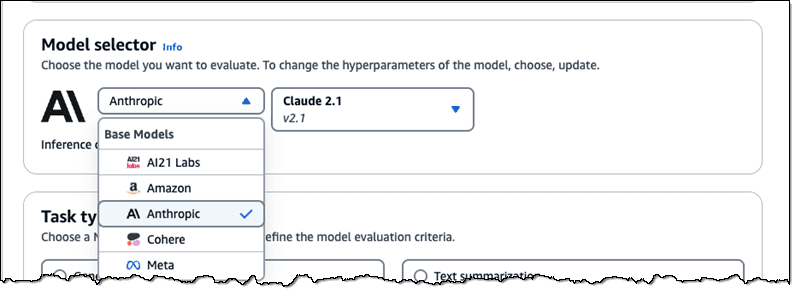

Access to More Models – You now have access to Claude 2.1:

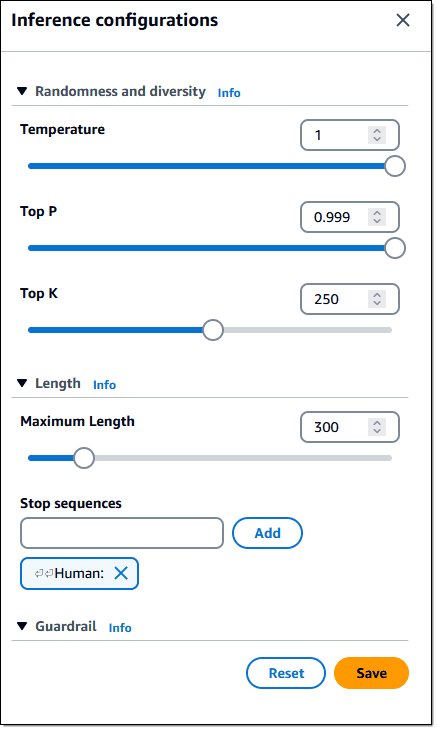

Once a model has been chosen, you can configure the inference settings that will be applied to the model evaluation task:

Important Information

Here are some interesting facts about this just added Amazon Bedrock feature:

Pricing: There is no extra fee for algorithmically generated scores; you only pay for the conclusions made during the model evaluation. A human worker submits an evaluation of a single prompt and its accompanying inference replies in the human evaluation user interface. If you employ human-based evaluation with your own team, you pay for the inferences and $0.21 for each completed task. Evaluations carried out by an AWS managed work team are priced according to the metrics, task categories, and dataset that are relevant to your assessment.