Guardrails for Amazon Bedrock

Guardrails for Amazon Bedrock, which was first made available in preview form at re:Invent 2023, is now generally available, as announced by AWS. You may add safeties to your generative artificial intelligence (generative AI) applications that are tailored to your use cases and ethical AI guidelines using Guardrails for Amazon Bedrock. Several its that are customised for various use cases can be made and applied to various foundation models (FMs), enhancing end-user experiences and standardizing safety measures throughout generative AI systems. All large language models (LLMs) in Amazon Bedrock, including refined models, are compatible with Guardrails for Amazon Bedrock.

In addition to FMs’ inherent capabilities, it for Bedrock provides industry-leading safety protection, enabling users to prevent up to 85% more dangerous content than certain foundation models on Amazon Bedrock currently offer. All large language models (LLMs) in Amazon Bedrock as well as fine-tuned models are compatible with Guardrails for Amazon Bedrock, the only responsible AI capability provided by a major cloud provider that lets users create and customise safety and privacy protections for their generative AI applications in a single solution.

Over a million people have benefited from Aha!’s software by using it to implement their product strategies. “AWS clients rely on us daily to establish objectives, gather client input, and produce graphic roadmaps,” said Dr. Chris Waters, Aha!’s co-founder and chief technology officer. This is the reason why a lot of AWS’s generative AI capabilities are powered by Amazon Bedrock.

Amazon Bedrock offers responsible AI features that let us prohibit hazardous content using it for Bedrock and maintain complete control over AWS information through its data protection and privacy rules. AWS has added to it by assisting product managers in gaining insights through the examination of user feedback. This is only the start. With confidence, AWS will keep developing its cutting-edge technology to support product development teams worldwide in prioritising what to build next.

Antje demonstrated how to utilise it in the preview article, defining a collection of themes that must be avoided within the context of your application and setting thresholds to filter material across dangerous categories. Two new safety categories have been added to the Content Filters feature: Misconduct, which detects illegal activity, and Prompt Attack, which detects jailbreak attempts and prompt injection. AWS also introduced significant new features, such as word filters that prevent inputs containing offensive and customised terms (e.g., dangerous phrases, rival names, and products) and sensitive information filters that identify and redact personally identifiable information (PII).

The Amazon Bedrock guardrails are positioned between the model and the application. It detects and assists in preventing content that fits into limited categories by automatically evaluating everything that enters the model from the application and everything that exits the model back to the application.

What is Guardrail?

Guardrails in Amazon Bedrock help govern generative AI model outputs and behaviour. These features aim to:

- Guardrails can screen hazardous AI content to improve safety.

- Enhance Privacy: They may also incorporate restrictions to prevent the AI from revealing sensitive data.

Guardrails provide boundaries and guide the AI, like bridge safety rails.

To set up Content filters and Denied subjects, you can study the instructions in the blog post for the preview release. Allow them to demonstrate the new features for you.

Fresh Attributes

Go to the AWS Management Console for Amazon Bedrock to set up the new features and construct guardrails before utilizing Guardrails for Amazon Bedrock. Select Guardrails from the Amazon Bedrock console’s navigation pane, and then select Create guardrail.

You input the Name and Description of the railing. To go to the Add sensitive information filters phase, select Next.

Sensitive and private information can be found in user inputs and FM outputs by using sensitive information filters. You can choose which entities should be redacted in outputs or prohibited in inputs based on the use cases. A list of preset PII kinds is supported by the sensitive information filter. Additionally, you can construct unique regex-based entities according to your requirements and use case.

Using the Booking ID as the Name and [0-9a-fA-F]{8} as the Regex pattern, you add two PII kinds (Name, Email) from the list.

In the Define blocked messaging stage, you select Next and type custom messages that will appear if your guardrail prevents the input or the model response. In the final step, you confirm the configuration and select Create guardrail.

Using the Test section, you navigate to the Guardrails Overview page and select the Anthropic Claude Instant 1.2 model. You choose Run after entering the following call Centre transcript in the Prompt field.

Guardrail activity reveals that the its were activated in three different situations. To review the specifics, use View Trace. You see that in the final answer, the guardrail hid the Name, Email, and Booking ID that it had detected.

Word filters are used to prevent inputs that contain offensive or customised words. The Filter profanity box is checked. The universal definition of profanity serves as the foundation for the profanity list of terms. You can also designate a maximum of 10,000 words that the guardrail will not allow to pass through. If any of these terms or phrases appear in your input or model response, a banned notice will appear.

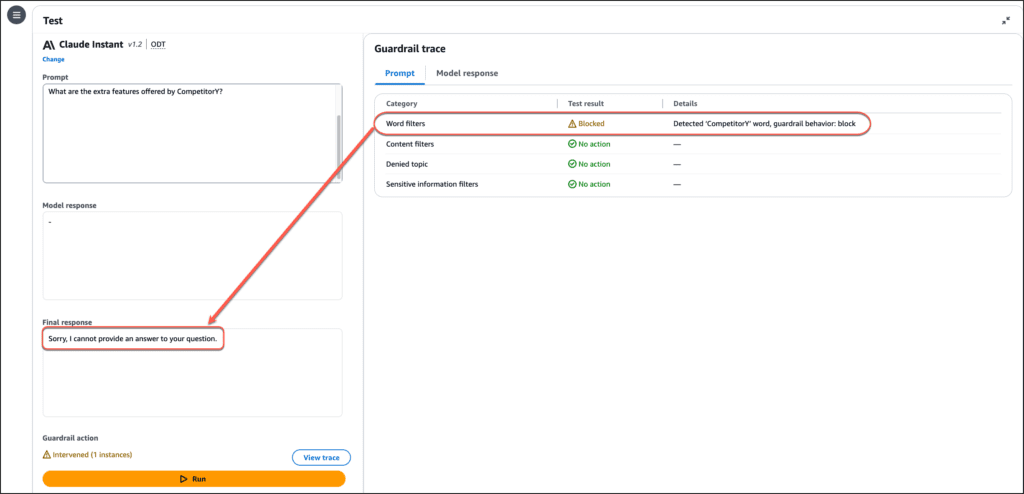

Select Custom words and phrases from the Word filters menu, then select Edit. To add a custom word CompetitorY, use the Add words and phrases manually method. If you need to submit a list of phrases, you can also use submit from an S3 object or Upload from a local file. In order to get back to your guardrail page, select Save and leave.

You type in a prompt with details about a made-up business and its rival, along with the inquiry. What additional features does CompetitorY provide? Run is your choice.

To review the specifics, use View Trace. You see that the policies you established caused the guardrail to intervene.

Currently accessible

Amazon Bedrock guardrails are currently offered in the US West (Oregon) and US East (N. Virginia) regions.