MediaTek NeuroPilot SDK

As part of its edge inference silicon plan, MediaTek stated at COMPUTEX 2024 that it will integrate NVIDIA TAO with MediaTek’s NeuroPilot SDK. With MediaTek’s support for NVIDIA TAO, developers will have an easy time integrating cutting-edge AI capabilities into a wide range of IoT applications that utilise the company’s state-of-the-art silicon. This will assist businesses of all sizes in realising edge AI’s promise in a variety of IoT verticals, such as smart manufacturing, smart retail, smart cities, transportation, and healthcare.

MediaTek’s NeuroPilot SDK Edge AI Applications

MediaTek created the extensive NeuroPilot SDK software toolkit and APIs to simplify the creation and implementation of AI applications on hardware that uses their chipsets. What NeuroPilot SDK has to provide is broken down as follows:

Emphasis on Edge AI

NeuroPilot SDK is based on the idea of “Edge AI,” which entails processing AI data locally on the device rather than on external servers. Better privacy, quicker reaction times, and less dependency on internet access are all made possible by this.

Efficiency is Critical

MediaTek’s NeuroPilot SDK is designed to maximise the efficiency of AI applications running on their hardware platforms, especially on their mobile System-on-Chip (SoC) processors. This results in longer battery life and more seamless operation for AI-powered functions on gadgets.

Agnostic towards Framework

NeuroPilot SDK is made to be independent of the underlying AI framework, even though it may include features unique to MediaTek hardware. For their apps, developers can use custom models or well-known frameworks like TensorFlow Lite thanks to this.

Tools and Resources for Development

The NeuroPilot SDK offers a wide range of tools and resources to developers, such as:

- APIs for utilising MediaTek smartphones’ AI hardware.

- To get started, use tutorials and sample code.

- Integration with various IDEs to facilitate a smooth workflow for development environments.

- Support and documentation to help developers along the way.

Latest Happenings

MediaTek declared in June 2024 that NeuroPilot SDK and NVIDIA’s TAO Toolkit are now integrated. Through the following, this collaboration seeks to further expedite development:

Pre-trained AI models

A massive library of pre-trained AI models for picture recognition and object detection saves developers time and effort.

Model optimization tools

Tools for improving pre-trained models for particular use cases and maximising performance on MediaTek hardware are known as model optimisation tools.

In general, MediaTek’s NeuroPilot SDK enables developers to design strong and effective AI apps for a variety of edge devices, such as wearables, smart home appliances, industrial machinery, and smartphones. NeuroPilot wants to be a major force behind Edge AI in the future by emphasising efficiency, ease of development, and a developing tool ecosystem.

NVIDIA TAO Tutorial

The NVIDIA TAO Toolkit: What Is It?

With transfer learning, you may expedite the development process and generate AI/machine learning models without requiring a horde of data scientists or mounds of data. With the help of this effective method, learning features from an existing neural network model may be instantaneously transferred to a newly customised one.

Based on TensorFlow and PyTorch, the open-source NVIDIA TAO Toolkit leverages the power of transfer learning to streamline the model training procedure and optimise the model for throughput of inference on nearly any platform. The outcome is a workflow that is extremely efficient. Utilise pre-trained or custom models, modify them for your own actual or synthetic data, and then maximise throughput for inference. All without the requirement for substantial training datasets or AI competence.

Principal Advantages

Educate Models Effectively

To save time and effort on manual tweaking, take advantage of TAO Toolkit’s AutoML feature.

Construct a Very Accurate AI

To build extremely accurate and unique AI models for your use case, leverage NVIDIA pretrained models and SOTA vision transformer.

Aim for Inference Optimisation

Beyond customisation, by optimising the model for inference, you can gain up to 4X performance.

Implement on Any Device

Install optimised models on MCUs, CPUs, GPUs, and other hardware.

A Top-Notch Solution for Your Vital AI Mission

As a component of NVIDIA AI Enterprise, an enterprise-ready AI software platform, NVIDIA TAO accelerates time to value while reducing the possible risks associated with open-source software. It offers security, stability, management, and support. NVIDIA AI Enterprise comes with three unique foundation models that have been trained on datasets that are profitable for businesses:

- The only commercially effective fundamental model for vision, NV-DINOv2, was trained on more than 100 million images by self-supervised learning. With such a little amount of training data,Their model may be rapidly optimised for a variety of vision AI tasks.

- PCB classification provides great accuracy in identifying missing components on a PCB and is based on NV-DINOv2.

- A significant number of retail SKUs can be identified using retail recognition, which is based on NV-DINOv2.

Starting with TAO 5.1, fundamental models based on NV-DINOv2 can be optimised for specific visual AI tasks.

- Get a complimentary 90-day trial licence for NVIDIA AI Enterprise.

- Use NVIDIA LaunchPad to explore TAO Toolkit and NVIDIA AI Enterprise.

Why It Is Important for the Development of AI

Customise your application with generative AI

A disruptive factor that will alter numerous sectors is generative AI. Foundation models that have been trained on a vast corpus of text, image, sensor, and other data are powering this. With TAO, you can now develop domain-specific generative AI applications by adjusting and customising these base models. Multi-modal models like NV-DINOv2, NV-CLIP, and Grounding-DINO can be fine-tuned thanks to TAO.

Implement Models across All Platforms

AI on billions of devices can be powered by the NVIDIA TAO Toolkit. For improved interoperability, the recently released NVIDIA TAO Toolkit 5.0 offers model export in ONNX, an open standard. This enables the deployment of a model on any computing platform that was trained using the NVIDIA TAO Toolkit.

AI-Powered Data Labelling

You can now name segmentation masks more quickly and affordably thanks to new AI-assisted annotation features. Mask Auto Labeler (MAL), a transformer-based segmentation architecture with poor supervision, can help in segmentation annotation as well as with tightening and adjusting bounding boxes for object detection.

Use Rest APIs to Integrate TAO Toolkit Into Your Application

Using Kubernetes, you can now more easily integrate TAO Toolkit into your application and deploy it in a contemporary cloud-native architecture over REST APIs. Construct a novel artificial intelligence service or include the TAO Toolkit into your current offering to facilitate automation across various technologies.

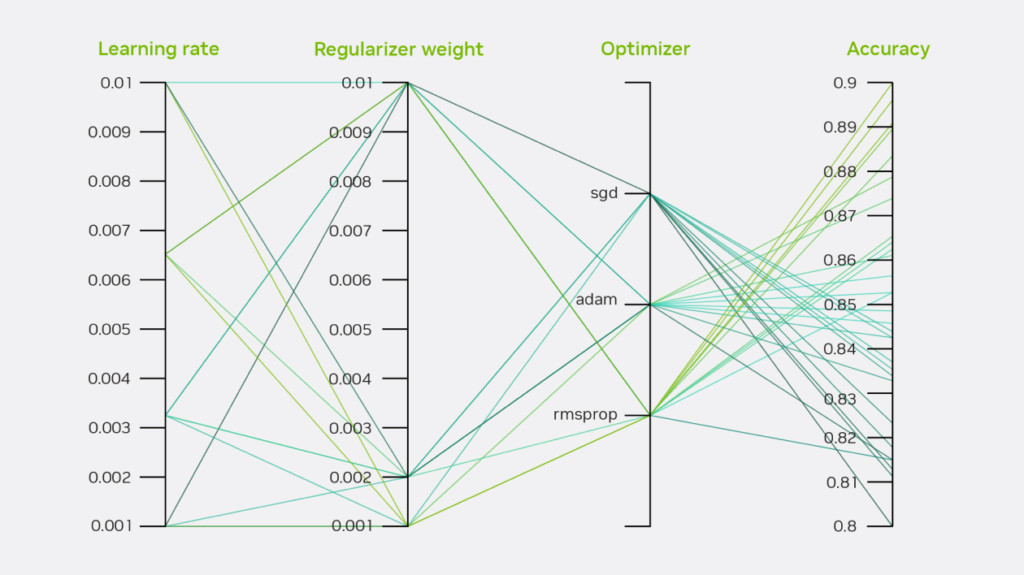

AutoML Makes AI Easier

AI training and optimisation take a lot of time and require in-depth understanding of which model to use and which hyperparameters to adjust. These days, AutoML makes it simple to train high-quality models without requiring the laborious process of manually adjusting hundreds of parameters.

Utilise Your Preferred Cloud Run

TAO’s cloud-native technology offers the agility, scalability, and portability required to manage and implement AI applications more successfully. Any top cloud provider’s virtual machines (VMs) can be used to implement TAO services, and they can also be used with managed Kubernetes services like Amazon EKS, Google GKE, or Azure AKS. To make infrastructure administration and scaling easier, it can also be utilised with cloud machine learning services like Azure Machine Learning, Google Vertex AI, and Google Colab.

TAO also makes it possible to integrate with a number of cloud-based and third-party MLOPs services, giving developers and businesses an AI workflow that is optimised. With the W&B or ClearML platform, developers can now monitor and control their TAO experiment and manage models.

Performance of Inference

Reach maximum inference performance on all platforms, from the cloud with NVIDIA Ampere architecture GPUs to the edge with NVIDIA Jetson solutions. To learn more about various models and batch size,

View the performance datasheet

| Model Arch | Resolution | Accuracy | Jetson Orin Nano | Jetson Orin Nx | Jetson Orin 64GB | A2 | T4 | L4 | L40 | H100 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| PeopleSemSegFormer | SegFormer | 512×512 | 91% mIoU | 6.6 | 9.7 | 24.2 | 23 | 40 | 83 | 210 | 454 |

| Retail Object Detection | DINO – FAN-B | 960×544 | 97% | 2.3 | 3.4 | 8.1 | 8.8 | 15.4 | 34 | 89 | 167 |

| DINO COCO | DINO – FAN-S | 960×544 | 72% mAP50 | 3.1 | 4.4 | 11.2 | 11.7 | 20 | 44 | 120 | 213 |

| GC-ViT ImageNet | GC-ViT-Tiny | 224×224 | 84% Top1 Accuracy | 75 | 110 | 293 | 336 | 517 | 1266 | 3118 | 6381 |

| OCRNet | ResNet50 – Bi-LSTM | 32×100 | 93% | 935 | 1373 | 3876 | 2094 | 3649 | 8036 | 18970 | 55720 |

| OCDNet | DCN-ResNet18 | 640×640 | 81% Hmean | 31 | 45 | 120 | 93 | 155 | 333 | 940 | 1468 |

| Optical Inspection | Siamese CNN | 2x512x128 | 100%/<1% FP | 399 | 482 | 1538 | 1391 | 2314 | 2821 | 10390 | 24110 |

Every year, MediaTek powers over two billion connected devices. The goal of the company’s edge silicon portfolio is to ensure that edge AI applications operate as effectively as possible while optimising their performance. Additionally, it incorporates the cutting-edge multimedia and connection technology from MediaTek. MediaTek’s edge inference silicon roadmap enables marketers to provide amazing AI experiences to devices across different price points, with chipsets for the premium, mid-range, and entry tiers.

On all MediaTek devices, big and small, with an intuitive UI and performance optimisation features, developers may advance the field of Vision AI with MediaTek’s NeuroPilot SDK integration with NVIDIA TAO. Additionally, NVIDIA TAO opens customised vision AI capabilities for a variety of solutions and use cases with over 100 ready-to-use pretrained models. Even in the case of developers without in-depth AI knowledge, it expedites the process of fine-tuning AI models and lowers development time and complexity.

“CK Wang, General Manager of MediaTek’s IoT business unit, stated, “Integrating NVIDIA TAO with MediaTek NeuroPilot will further broaden NVIDIA TAO aim of democratising access to AI, helping generate a new wave of AI-powered devices and experiences.” “With MediaTek’s Genio product line and these increased resources, it’s easier than ever for developers to design cutting-edge Edge AI products that differentiate themselves from the competition.”

The Vice President of Robotics and Edge Computing at NVIDIA, Deepu Talla, stated that “generative AI is turbocharging computer vision with higher accuracy for AIoT and edge applications.” “Billions of IoT devices will have access to the newest and best vision AI models thanks to the combination of NVIDIA TAO and MediaTek NeuroPilot SDK.”