LLM Chatbot Development

Hi There, Developers! We are back and prepared to “turn the volume up” by using Intel Optimized Cloud Modules to demonstrate how to use our 4th Gen Intel Xeon Scalable CPUs for GenAI Inferencing.

Boost Your Generative AI Inferencing Speed

Did you know that our newest Intel Xeon Scalable CPU, the 4th Generation model, includes AI accelerators? That’s true, the CPU has an AI accelerator that enables high-throughput generative AI inference and training without the need for specialized GPUs. This enables you to use CPUs for both conventional workloads and AI, lowering your overall TCO.

For applications including natural language processing (NLP), picture production, recommendation systems, and image identification, Intel Advanced Matrix Extensions (Intel AMX), a new built-in accelerator, provides better deep learning training and inference performance on the CPU. It focuses on int8 and bfloat16 data types.

Setting up LLM Chatbot

The 4th Gen Intel Xeon CPU is currently generally available on GCP (C3, H3 instances) and AWS (m7i, m7i-flex, c7i, and r7iz instances), in case you weren’t aware.

Let’s get ready to deploy your FastChat GenAI LLM Chabot on the 4th Gen Intel Xeon processor rather than merely talking about it. Move along!

Modules for Intel’s Optimized Cloud and recipes for Intel’s Optimized Cloud

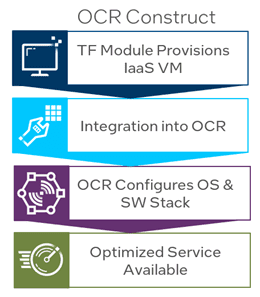

Here are a few updates before we go into the code. At Intel, we invest a lot of effort to make it simple for DevOps teams and developers to use our products. The creation of Intel’s Optimized Cloud Modules was a step in that direction. The Intel Optimized Cloud Recipes, or OCRs, are the modules’ companions, which I’d want to introduce to you today.

Intel Optimized Cloud Recipes: What are they?

The Intel Optimized Cloud Recipes (OCRs), which use RedHat Ansible and Microsoft PowerShell to optimize operating systems and software, are integrated with our cloud modules.

Here’s How We Go About It

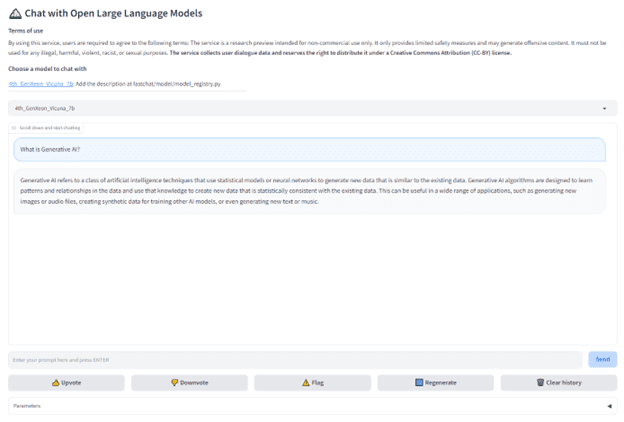

Enough reading; let’s turn our attention to using the FastChat OCR and GCP Virtual Machine Module. You will install your own generative AI LLM chatbot system on the 4th Gen Intel Xeon processor using the modules and OCR. The power of our integrated Intel AMX accelerator for inferencing without the need for a discrete GPU will next be demonstrated.

To provision VMs on GCP or AWS, you need a cloud account with access and permissions.

Implementation: GCP Steps

The steps below are outlined in the Module README.md (see the example below) for more information.

Usage

- Log on to the GCP portal

- Enter the GCP Cloud Shell (Click the terminal button on the top right of the portal page)

- Run the following commands in order:

git clone https://github.com/intel/terraform-intel-gcp-vm.git

cd terraform-intel-gcp-vm/examples/gcp-linux-fastchat-simple

terraform init

terraform apply

# Enter your GCP Project ID and “yes” to confirm

Running the Demo

- Wait approximately 10 minutes for the recipe to download and install FastChat and the LLM model before continuing.

- SSH into the newly created CGP VM

- Run: source /usr/local/bin/run_demo.sh

- On your local computer, open a browser and navigate to http://<VM_PLUBLIC_IP>:7860 .

Get your public IP from the “Compute Engine” section of the VM in the GCP console. - Or use the https://xxxxxxx.gradio.live URL that is generated during the demo startup (see on-screen logs)

“chat” and observe Intel AMX in operation after launching (Step 3), navigating to the program (Step 4), and “chatting” (Step 3).

Using the Intel Developer Cloud instead of GCP or AWS for deployment

A virtual machine powered by an Intel Xeon Scalable Processor 4th Generation can also be created using the Intel Developer Cloud.

For details on how to provision the Virtual Machine, see Intel Developer Cloud. After the virtual machine has been set up:

As directed by the Intel Developer Cloud, SSH onto the virtual machine.

To run the automated recipe and launch the LLM chatbot, adhere to the AI Intel Optimized Cloud Recipe Instructions.

GenAI Inferencing: Intel AMX and 4th Gen Xeon Scalable Processors

I hope you have a chance to practice generative AI inference! You can speed up your AI workloads and create the next wave of AI apps with the help of the 4th Gen Intel Xeon Scalable Processors with Intel AMX. You can quickly activate generative AI inferencing and start enjoying its advantages by utilizing our modules and recipes. Data scientists, researchers, and developers can all advance generative AI.

[…] determine which programs they’re eligible for, and contact them about them. An intelligent chatbot could help this user identify the customer application by skipping many questions about basic […]

[…] 365 Copilot is in a unique position to bring GenAI capabilities to employees directly within their existing workflows more quickly and with built-in […]

[…] in his life. This time, generative AI (GenAI) is used. The developments we’ll witness from GenAI will be comparable to the arrival of the PC Revolution , which brought previously unheard-of levels […]

[…] latest foundation model is often the focus, but building systems that use LLMs requires selecting the right models, designing architecture, orchestrating prompts, embedding them […]

[…] accessibility to the larger AI development community, and efficiency thanks to open-source large language models (LLMs) like Falcon-7B and Zephyr-7B. These are 7-billion parameter models, which are smaller than […]

[…] centralized domain controllers, edge sensors like LiDARs, radars, and cameras, and can even execute AI inference on massive data ingests. Different kinds of AI models, including feature tracking and […]

[…] AI relies on big language models (LLMs) created from humans’ massive unlabeled text. Artificial brains with billions of variables and often many networks create material for spoken queries that mimic human replies. ChatGPT and DALL-E, which generate realistic and imaginative text and graphics from user input, are popular LLMs. Although amazing, these LLMs demand a lot of computer power and data. Most run in the cloud to leverage huge computing and network capacity. […]