Model Monitoring Vertex AI

In order to provide a more adaptable, extensible, and consistent monitoring solution for models deployed on any serving infrastructure even those not affiliated with Vertex AI, such as Google Kubernetes Engine, Cloud Run, Google Compute Engine, and more Google is pleased to introduce the new Vertex AI Model Monitoring, a re-architecture of Vertex AI’s model monitoring features.

The goal of the newly released Vertex AI Model Monitoring is to help clients continuously monitor their model performance in production by centralizing the management of model monitoring capabilities. This is made possible by:

- Support for models (e.g., GKE, Cloud Run, even multi-cloud & hybrid-cloud) housed outside of Vertex AI

- Unified job management for online and batch prediction monitoring

- Simplified metrics visualization and setup linked to the model rather than the endpoint

This blog post will provide you with an overview of the fundamental ideas and functionalities of the recently released Vertex AI Model Monitoring, as well as demonstrate how to utilise it to keep an eye on your production models.

Overview of the recently developed Vertex AI Model Monitoring

Prediction request-response pairs, or input feature and output prediction pairs, are obtained and gathered in a standard model version monitoring procedure. These inference logs are used for planned or on-demand job monitoring to evaluate the model’s performance. The inference schema, monitoring metrics, objective thresholds, alert channels, and scheduling frequency can all be included in the design of the monitoring job. When anomalies are found, notifications can be delivered to the model owners via a variety of channels, including Slack, email, and more. The model owners can then look into the anomaly and begin a fresh training cycle.

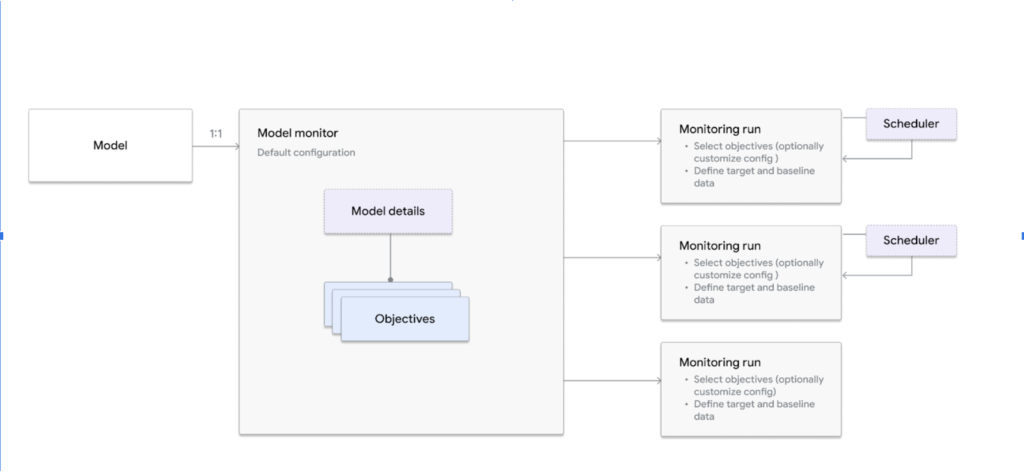

The model version with the Model Monitor resource and the related monitoring task with the Model Monitoring job resource are represented by the new Vertex AI Model Monitoring.

A particular model version in the Vertex AI Model Registry is represented as a monitoring representation by the Model Monitor. A set of monitoring objectives you specify for the model’s monitoring, as well as the default monitoring configurations for the production dataset (referred to as the reference dataset) and training dataset (referred to as the baseline dataset), can be stored in a Model Monitor.

One batch execution of the Model Monitoring setup is represented by a Model Monitoring task. Every task analyses input, computes a data distribution and related descriptive metrics, determines how much a data distribution of features and predictions deviates from the training distribution, and may even sound an alert based on thresholds you set. Additionally, a Model Monitoring job with one or more modifiable monitoring objectives can be scheduled for continuous monitoring over a sliding time window or run on demand.

Now that you are aware of some of the main ideas and features of the recently released Vertex AI Model Monitoring, let’s look at how you can use them to enhance the way your models are monitored in real-world scenarios. This blog post explains specifically how to monitor a referenced model that is registered but not imported into the Vertex AI Model Registry using the new Vertex AI Model Monitoring feature.

Using Vertex AI Model Monitoring to keep an eye on an external model

Assume for the moment that you develop a customer lifetime value (CLV) model to forecast a customer’s value to your business. The model is being used in production within your own environment (e.g., GKE, GCE, Cloud Run), and you would like to keep an eye on its quality using the recently released Vertex AI Model Monitoring.

The baseline and target datasets must first be prepared in order to use the new Vertex AI Model Monitoring for monitoring a model that is not housed on Vertex AI (also known as a referenced model). These datasets in this case reflect the production values that correspond to the training feature values at a specific point in time (the target dataset) and the baseline dataset.

After that, you can put the dataset in BigQuery or Cloud Storage. Vertex AI Model Monitoring is compatible with multiple data sources, including BigQuery and Cloud Storage.

Subsequently, you designate the related Model Monitor and register a Reference Model in the Vertex AI Model Registry. You can select the model to monitor as well as the related model monitoring schema, which contains the feature name and related data types, using the Model Monitor. Additionally, for governance and repeatability, the generated model monitoring artefacts will be documented in the Vertex AI ML Metadata using a Reference Model. This is an illustration of how to use the Python Vertex AI SDK to develop a Model Monitor.

You are now ready to define and execute the Model Monitoring job after creating the Model Monitor and preparing your baseline and target datasets. You must select what to monitor and how to monitor the model in the event of an unforeseen event when you define the Model Monitoring job. The new Vertex AI Model Monitoring allows you to specify multiple monitoring goals for what needs to be monitored.

Utilize the newly released Vertex AI Model Monitoring to monitor input feature drift, output prediction, and feature attribution data drift for all kinds of data-numerical, categorical, and feature against a threshold that you specify. You can assess the difference between the training (baseline) and production (target) feature distributions using Jensen-Shannon divergence and L-infinity distance metrics, depending on the type of data your features are made of. As a result, an alert will sound whenever the distance disparities for any feature exceed the corresponding threshold.

Regarding model monitoring, you may choose to schedule recurring jobs for continuous monitoring or execute a single monitoring operation using the new Vertex AI Model Monitoring feature. Additionally, you may evaluate monitoring results using Gmail and Slack, two notification channels supported by the new Vertex AI Model Monitoring.

How Telepass uses the new Vertex AI Model Monitoring to keep an eye on ML models

Telepass Italy

Telepass has become a prominent player in the quickly changing toll and mobility services market in Italy and several other European nations. Telepass decided to use MLOps in order to strategically expedite the development of machine learning solutions in recent years. Telepass has successfully deployed a solid MLOps platform during the last year, allowing for the deployment of multiple ML use cases.

As of right now, the Telepass team has been using this MLOps framework to create, test, and smoothly implement over 80 training pipelines that run on a monthly basis through continuous deployment. More than ten different use cases are covered by these pipelines, such as data-driven customer clustering methods, propensity modelling for customised client interactions, and accurate churn prediction for projecting customer attrition.

Notwithstanding these successes, Telepass recognised that they were missing an event-driven re-training mechanism that was triggered by abnormalities in the distribution of data, as well as a system for detecting feature drift. As one of the first users of Vertex AI Model Monitoring, Telepass teamed together with Google Cloud to solve this need and incorporate monitoring into their current MLOps framework to automate the re-training procedure.

According to Telepass:

By integrating the new Vertex AI Model Monitoring strategically with Google’s existing Vertex AI infrastructure, Google team has reached previously unheard-of levels of model quality assurance and MLOps efficiency. Google continuously improve their performance and meet or beyond the expectations of our stakeholders by enabling prompt retraining.”

Notice of Preview

The “Pre-GA Offerings Terms” found in the General Service Terms section of the Service Specific Terms apply to this product or feature. Pre-GA features and goods are offered “as is” and may only have a restricted amount of support. See the descriptions of the launch stages for further details. Please wait until Vertex AI Model Monitoring is generally available (GA) before relying on it for business-critical workloads or handling private or sensitive data, even if it is designed to monitor production model serving.