Intel Xeon 6 E-core

Intel revealed state-of-the-art technologies and architectures today at Computex that have the potential to significantly accelerate the AI ecosystem from the data centre, cloud, and network to the edge and PC. With increased processing power, cutting-edge power efficiency, and an affordable total cost of ownership (TCO), clients may now take use of the full potential of AI systems.

AI Data Centres Benefit from Intel Xeon 6 Processors

Companies are under increasing pressure to update their outdated data centre systems in order to maximise physical floor and rack space, save costs, meet sustainability targets, and develop new digital capabilities throughout the organisation as digital transformations pick up speed.

With both Intel Xeon 6 E-core (Efficient-core) and P-core (Performance-core) SKUs to address the wide range of use cases and workloads, from AI and other high-performance compute needs to scalable cloud-native applications, the Xeon 6 platform and processor family as a whole were designed with these issues in mind. Built on a shared software stack and an open ecosystem of hardware and software manufacturers, E-cores and P-cores share a compatible architecture.

The Intel Xeon 6 E-core, code-named Sierra Forest, is the first of the Xeon 6 CPUs to be released and will be available starting today. The Xeon 6 P-cores, also known as Granite Rapids, should be released the following quarter.

The Intel Xeon 6 E-core processor offers good performance per watt and a high core density, allowing for efficient computing at much lower energy costs. For the most demanding high-density, scale-out workloads, such as cloud-native apps and content delivery networks, network microservices, and consumer digital services, the enhanced performance with higher power efficiency is ideal.

Furthermore, when compared to 2nd Gen Intel Xeon processors on media transcoding tasks, Intel Xeon 6 E-core enormous density advantages allow for rack-level consolidation of 3-to-1, giving clients a rack-level performance gain of up to 4.2x and performance per watt gain of up to 2.6×1. Xeon 6 processors free up computational capability and infrastructure for creative new AI applications by consuming less power and rack space.

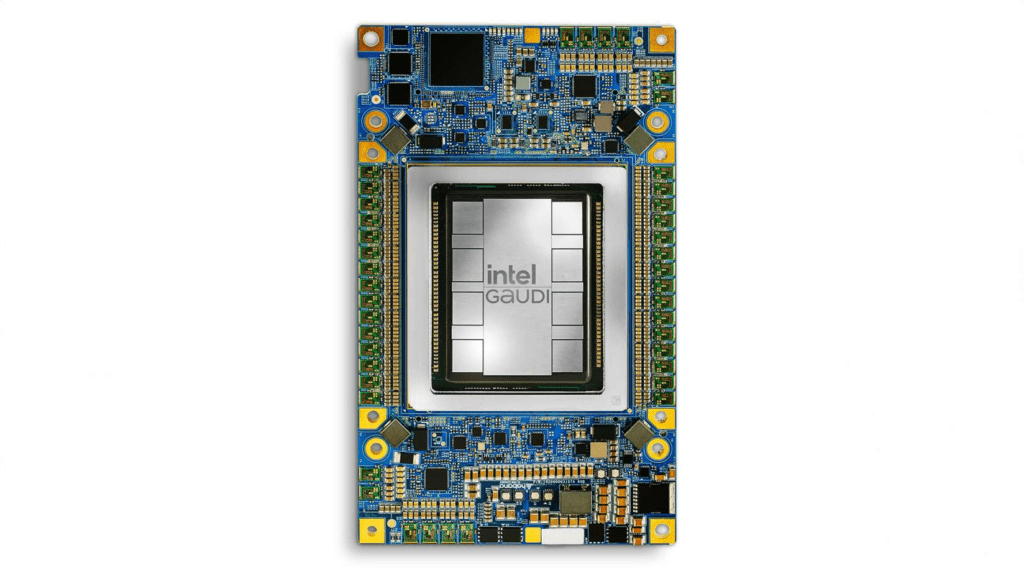

Intel Gaudi AI Accelerators Improve GenAI Performance at Lower Cost

These days, it’s getting cheaper and quicker to use generative AI. The industry standard for infrastructure, x86 runs at scale in almost all data centre environments and provides the basis for integrating AI capabilities while guaranteeing affordable interoperability and the enormous advantages of an open community of developers and users.

When used in conjunction with Intel Gaudi AI accelerators, which are specifically intended for AI applications, Intel Xeon processors make the best CPU head node for AI workloads. When combined, these two provide a potent solution that blends in well with the current infrastructure.

For training and inference of large language models (LLM), the Gaudi architecture is the only MLPerf-bench marked substitute for Nvidia H100 that offers customers the desired GenAI performance at a price-performance advantage that offers choice, quick deployment, and a lower total cost of operation.

System providers can purchase a basic AI kit for $65,000, which includes eight Intel Gaudi 2 accelerators and a universal baseboard (UBB). This kit is anticipated to be one-third less expensive than equivalent competitor platforms. Eight Intel Gaudi 3 accelerators with a UBB will be included in a kit that will retail for $125,000; this is around two-thirds less than comparable competition platforms.

With the help of Intel Gaudi 3 accelerators, businesses will be able to extract more value from their unique data by achieving notable performance gains for training and inference workloads on top GenAI models. According to projections, Intel Gaudi 3 in a 8,192-accelerator cluster will provide up to 15% better training throughput for a 64-accelerator cluster compared to Nvidia H100 on the Llama2-70B model and up to 40% faster time-to-train compared to the equal size Nvidia H100 GPU cluster. Furthermore, it is anticipated that Intel Gaudi 3 will provide up to two times quicker inferencing on average when compared to Nvidia H100 while running widely used LLMs like Mistral-7B and Llama-70B.

Intel is working with at least ten of the leading international system providers, including six new companies that just stated they will be releasing Intel Gaudi 3, to make these AI systems widely accessible. Leading system providers Dell, HPE, Lenovo, and Supermicro now have more production options thanks to new partners Asus, Foxconn, Gigabyte, Inventec, Quanta, and Wistron.

Revolutionary laptop AI architecture triples compute and power efficiency

Intel is expanding its AI presence outside of the data centre, both in the PC and at the edge. Intel has been enabling enterprise choice for decades with more than 200 million CPUs deployed to the ecosystem and more than 90,000 edge deployments.

Intel is leading the charge in this category-creating moment as the AI PC category is revolutionising every facet of the computing experience today. The goal now is to create edge devices that learn and change in real time, anticipating user requirements and preferences and ushering in a completely new era of productivity, efficiency, and creativity. It is no longer just about faster processing speeds or sleeker designs.

By 2028, 80% of PC sales are expected to come from AI models, according to Boston Consulting Group. Intel reacted swiftly, enabling over 100 independent software vendors (ISVs), 300 features, and support for 500 AI models throughout its Core Ultra platform, to provide the best hardware and software platform for the AI PC.

Building swiftly on these unparalleled benefits, the company today unveiled the Lunar Lake architecture, which serves as the flagship processor for the upcoming AI PC generation. Lunar Lake is expected to provide up to 40% reduced SoC power and more than three times the AI compute, thanks to a significant leap in graphics and AI processing power and an emphasis on capability-efficient compute performance for the thin-and-light market. It is anticipated to ship in 2024’s third quarter, just in time for the Christmas shopping season.

The brand-new architecture of Lunar Lake will allow for:

- The new Performance-cores (P-cores) and Efficient-cores improve performance and energy efficiency.

- A fourth-generation Intel NPU with 48 tera-operations per second (TOPS) AI capabilities. Improves in generative AI are made possible by this potent NPU, which provides up to 4x AI compute compared to the previous iteration.

- The brand-new X2 GPU cores for visuals and the X Matrix Extension (XMX) arrays for AI are combined in the Battlemage GPU design. The new XMX arrays provide a second AI accelerator with up to 67 TOPS of performance for exceptional throughput in AI content production, while the X2 GPU cores increase gaming and graphics performance by 1.5x over the previous version.

- Amazing laptop battery life is made possible by an innovative compute cluster, an advanced low-power island, and Intel innovation that manages background and productivity tasks extremely well.

Intel is already shipping at scale, delivering more AI PC processors through the first quarter of 2024 than all competitors combined, while others get ready to join the AI PC market. More than 80 distinct AI PC designs from 20 original equipment manufacturers (OEMs) will be powered by Lunar Lake. This year, Intel anticipates putting more than 40 million Core Ultra processors on the market.