As large language models (LLMs) have become widely used, users have learned how to utilize the apps that access them. These days, artificial intelligence systems can produce, synthesize, translate, categorize, and even talk. After learning from preexisting artefacts, IBM can create replies to prompts using tools in the generative AI field.

The edge and limited device space is one that has not seen a lot of innovation. Although some local AI apps on mobile devices have incorporated language translation capabilities, IBM is still a long way from reaching the stage when LLMs are profitable independent of cloud providers.

Smaller Language Models(SLMs)

Smaller models, on the other hand, might revolutionise next-generation AI capabilities for mobile devices. Let’s look at these answers through the lens of a hybrid artificial intelligence paradigm.

The fundamentals of LLMs

The unique class of AI models driving this new paradigm are called LLMs. NLP, or natural language processing, makes this possible. Developers employ vast amounts of data from multiple sources, including the internet, to train LLMs. Their size is a result of processing billions of parameters.

Although LLMs have extensive subject-matter knowledge, their application is restricted to the training set of data. This implies that they aren’t always “up to date” or precise. Large-scale machines (LLMs) are usually hosted on the cloud due to their size, necessitating the deployment of robust hardware with numerous GPUs.

This implies that businesses cannot employ LLMs out of the box when trying to mine information from their proprietary or confidential business data. They need to either build their own models or include their data with public LLMs in order to produce summaries, briefings, and answers to specific inquiries. Retrieval augmentation generation, or the RAG pattern, is a technique for adding one’s own data to an LLM. It is a general AI design pattern that enriches the LLM with outside data.

Small Language Models

Is smaller better?

Businesses in specialised industries, such as telecoms, healthcare, or oil and gas, are laser-focused. Smaller models would be more appropriate for them, even though normal gen AI scenarios and use cases can and can benefit them.

Personalized offers in service delivery, AI-powered chatbots for improved customer experience, and AI assistants in contact centres are a few common use cases in the telecom industry, to name a few. Enterprise data (rather than a public LLM) is most suited for use cases that assist telcos in enhancing network performance, boosting spectral efficiency in 5G networks, or pinpointing specific bottlenecks within their network.

This leads us to the conclusion that smaller is preferable. Small Language Models (SLMs) that are “smaller” than LLMs are now available. Whereas LLMs are taught on hundreds of billions of parameters, SLMs are trained on tens of billions. What’s more, SLMs are taught using data particular to a given domain. Despite having less contextual knowledge, individuals excel in the area they have chosen.

These models can be hosted in an enterprise’s data centre rather than the cloud because to their reduced size. SLMs may even be able to operate at scale on a single GPU chip, saving thousands of dollars in annual computational expenses. However, as chip design advances, it becomes more difficult to distinguish between applications that should only be operated in a corporate data centre or on the cloud.

Businesses may wish to run these SLMs in their data centres for a variety of reasons, including cost, data protection, and data sovereignty. The majority of businesses dislike having their data stored in the cloud. Performance is another important factor. Inferencing and computing are handled by Gen AI at the edge, which is faster and more secure than using a cloud provider because it happens as close to the data as feasible.

It is important to remember that SLMs are perfect for deployment on mobile devices and in contexts with limited resources because they demand less processing power.

An IBM Cloud Satellite site, which has a safe, fast connection to IBM Cloud housing the LLMs, could provide as an example of an on-premises location. Telcos could provide this option to their customers and host these SLMs at their base stations. All that has to be done is make the best use of GPUs, as this reduces the distance that data must travel and increases bandwidth.

To what extent can you shrink?

Let us return to the initial inquiry on the compatibility of these models with mobile devices. The mobile gadget could be a car, a robot, or even an expensive phone. Manufacturers of devices have found that running LLMs requires a large amount of bandwidth. Smaller variants known as “tiny LLMs” can be used locally on medical equipment and smartphones.

Developers build these models using methods such as low-rank adaptation. They maintain a low number of trainable parameters while allowing users to fine-tune the models to specific requirements. Indeed, a TinyLlama project exists on GitHub.

Manufacturers of chips are working on creating chips that, through knowledge distillation and picture diffusion, can operate a condensed version of LLMs. Gen-AI tasks are carried out by edge devices with the help of neuro-processing units (NPUs) and system-on-chips (SOCs).

Solution architects ought to take into account the current state of technology, even when certain of these ideas are not yet operational. Working together and as SLMs and LLMs could be a good way to go. To offer a customised client experience, businesses can choose to develop their own AI models or employ the smaller, more niche models that are already available for their sector.

Hybrid AI

Could hybrid AI be the solution?

Though small LLMs on mobile edge devices are appealing and running SLMs on-premises looks feasible, what if the model needs a bigger corpus of data to react to certain prompts?

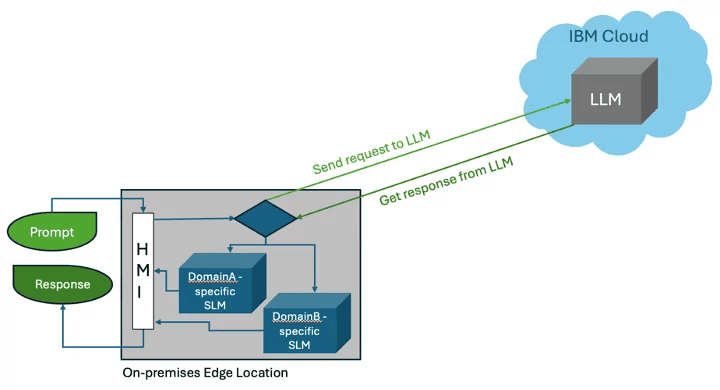

Cloud computing that is hybrid provides the best of both worlds. Could AI models be subject to the same rules? This idea is depicted in the graphic below.

The hybrid AI model may offer the opportunity to leverage LLM on the public cloud in situations where smaller models are inadequate. It is reasonable to allow this kind of technology. Businesses could use domain-specific SLMs to protect their data on-site, and when needed, they could access LLMs hosted in the public cloud. Spreading generative AI workloads this way appears to be a more effective manner as mobile devices with SOC become more competent.

The open source Mistral AI Model is now accessible on the Watson platform, according to a recent announcement from IBM. Comparing IBM’s small LLM to regular LLMs, it performs better and uses less resources while maintaining the same level of effectiveness. IBM also unveiled the Granite 7B model, which is a member of their reliable and well-curated family of foundation models.

IBM argue that instead of attempting to build their own generic LLMs, which they can readily access from multiple providers, enterprises should concentrate on building small, domain-specific models with internal enterprise data to differentiate their core competency and use insights from their data.

Not always is bigger better

One company that might gain greatly from using this hybrid AI strategy is the telco industry. Because they may function as both providers and consumers, they play a special role. Healthcare, oil rigs, logistics firms, and other businesses may face similar situations. Are the telecom companies ready to leverage Gen AI effectively? Do they have a time-series model that matches the data, even though IBM know they have a tonne of it?

IBM has a multimodel approach for AI models in order to support every possible use case. Larger isn’t necessarily better because specialized models perform better than general-purpose models that require less infrastructure.