Introduction

High-resiliency SSD architecture requires additional components, such as FDP and Latency Monitor.

Micron recently wrote two blogs on seemingly unrelated topics, but after speaking with colleagues in the industry, come to the conclusion that they are more related than Micron initially thought. This blog will examine latency mitigation in more detail, as well as how approaches like NVM Express’s Flexible Data Placement (FDP) and OCP Storage Workgroup’s Latency Monitor (LM) can both be viewed as vertically integrated high-resiliency ingredients.

Review: Vertical Integration in Resilience

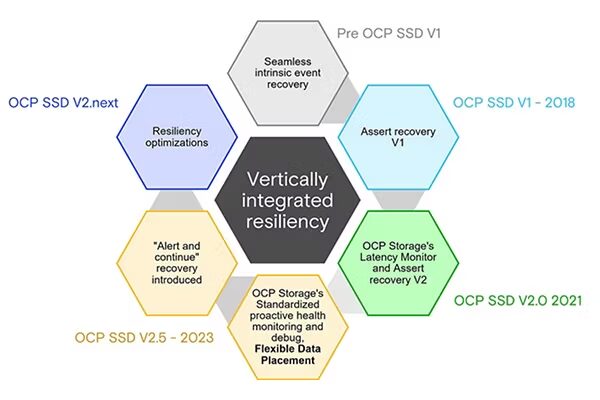

In his blog post “Working to revolutionize SSD resiliency with shift-left approach, “Micron provided a summary of every component related to the host and storage device that goes into producing high resiliency. From the first release of the OCP SSD specification, Version 1, to the third version, Version 2.5, today, there has been a gradual addition of resilience components. The topics of panic recovery, panic detection, and standardized telemetry were covered. But like to get more into two of them: 1) Flexible Data Placement and 2) Latency Monitor.

NVM Express FDP reduces tail latency, improving Vertically Integrated Resiliency

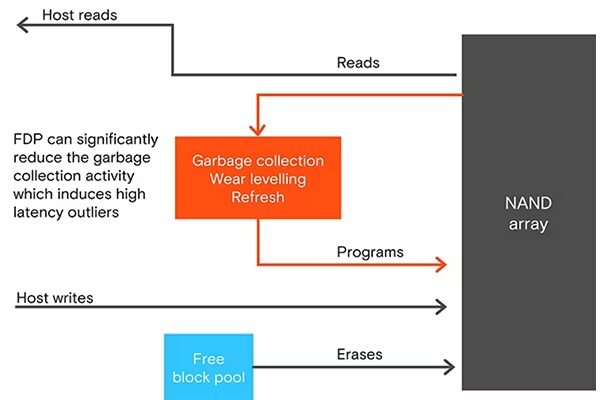

FDP for increased SSD resilience makes sense and is evident given that reducing write amplification extends host applications’ endurance reach. His colleagues and Micron have talked about this a lot in the industry and in past writings on the Micron blog. As was mentioned, it also demonstrates a variety of settings in which Flexible Data Placement can be applied to enhance performance. This is not shocking. Similar to less waste collection, the industry’s actual workloads can get better.

NVMe may include flexible data placement (FDP), according to Google and Meta This feature reduces write amplification (WA) when many apps write, change, and read data on the same device. Two benefits of lower WA for these companies are more usable capacity and maybe longer gadget life.

We devised an experiment to test FDP’s usefulness. This test runs simultaneous flexible input/output tester (fio) workloads on a 7.68TB Micron 7450 PRO SSD partitioned into four equal (1.92TB) namespaces.3 Sequential write workloads vary in block size (4K, 16K, 64K, 256K). We also run identical workloads separately on four 1.92TB Micron 7450 PRO SSDs, which we consider the best FDP implementation because all application data has dedicated NAND space and is not interleaved.

Can FDP assist with latency outlier mitigation and is it a feature of Vertically Integrated Resiliency? his earlier blog post, “Why latency in data centre SSDs matters and how Micron became best in class,” covered the logic underlying the severe performance problems scale-out solutions face. From latency outliers and the methods Micron used to provide a world-class solution. It did not, however, assume vertical integration or how an FDP solution that was vertically integrated would reduce latency even more.

Given that the primary function of FDP is to decrease the amount of garbage collection (GC), it is logical to assume that Flexible Data Placement would also lessen latency outliers. Naturally, latency variations will still occur even with no GC and a WAF=1, as a result of various channel dies, read-only planes, host-initiated programs, and erases. Nevertheless, there is a higher chance of collisions and thus larger latency outlier affects when the write amplification is approximately 2-3 or higher. To what extent, though, can FDP improve latency outliers?

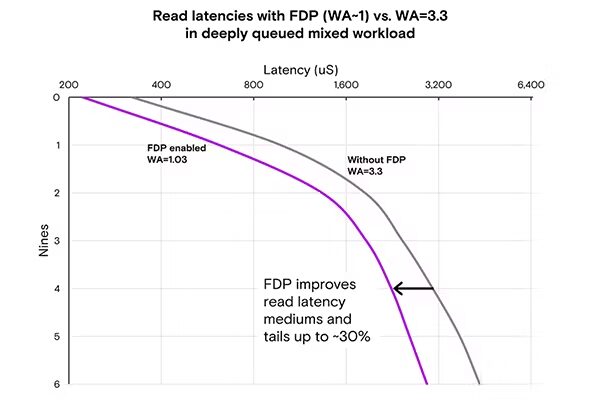

Let’s examine a full pressure 70% read, 30% write 4KiB random workload and depict read latency distributions using the industry-standard methods that Micron covered in depth in his latency blog. Covering the non-FDP casing is simple and compliant with industry standards. A new namespace was created and just a portion of the entire capacity was preconditioned before running the 70/30 workload in order to measure a WA of a proxy to the FDP best case. An 8TB Micron 7500 SSD was preconditioned to 1TB in this instance, and until the physical media filled and GC began, IOs were limited to that 1TB (to prevent reading unmapped LBAs).

The read latency distribution is shown in Figure 3 below without any write pressure and then with continuous write pressure. It has been shown by numerous previous research that Flexible Data Placement WA is around 1, meaning that tail latency fluctuation can be reduced by up to 30%.

The Latency Monitor of the OCP Storage Workgroup improves SSD resilience

In his earlier blog post on latency mitigation, Micron SSD described how there is a low-chance but high-impact effect of tail latency on the overall delay when database queries are divided into several sub-queries that execute in parallel. That blog post covered the difficulty of identifying and resolving latency outlier sources. The method for proving that the latency outliers are expected was not covered.

At the system level, tools such as FIO are able to construct the necessary latency histograms and timestamp each transaction. Nevertheless, generating system-level real-time block traces and searching for outliers would be computationally prohibitively costly in practical applications given that modern SSDs may achieve millions of IOPS. And even if they could, the SSD debug information might be lost by the time the host notices the outlier and transmits a Telemetry Host-Initiated request with the required debug data.

This was something personally encountered while working on the FMS 2017 article; it was shown that the SSD controller had sufficient processing capacity to monitor latency, record the arrival and departure times of each command, produce intricate histograms, and initiate assertions if values were excessive. Then, internal data collection took place in preparation for subsequent extraction and analysis. The SSD produced all of the data that was shown internally.

Version 2 of the OCP Datacenter NVMe SSD Specification featured the SSD Latency Monitor for the first time, formalizing the concept based on Meta’s work and certain important requirements. In summary, every command that is egressed is timestamped, and its corresponding ingress timestamp is compared to determine the delay. Timestamp-based host-configurable histogram buckets are generated. In order to mitigate the latency caused by the host’s request for a debug log, an internal debug log is saved for future vendor inspection as soon as a command latency threshold is surpassed at command egress. It is also feasible to debug latency anomalies with even more information once OCP Storage’s Standardized Telemetry is implemented.

Vineet Parekh and Venkat Ramesh from Meta talked on integrating latency monitoring into their fleet and gave an example of a major problem they found at the Storage Developer Conference in 2022. They made the observation that, if every ship in their fleet, operating at 1000 IOPS, had one-second 9-nine outliers, it would result in over 5000 latency incidents every day! Parekh and Ramesh provided an example of a troublesome SSD drive that was successfully debugged using Latency Monitor. The latency outlier issue had been unsolvable for months prior to the deployment of the latency monitor.

Finding host latency problems that were previously attributed to the storage device is another advantage of Latency Monitor. Two recent instances come to mind. One was a latency outlier-related Linux issue that led to an extension of an NVMe specification upgrade. The other was covered in a blog post by his coworker Sayali Shirode at Micron, who demonstrated via the use of a Latency Monitor that a measured latency anomaly WAS NOT brought on by the SSD in issue.

In conclusion

Micron is adopting the architecture and design of High Resiliency SSDs. Micron discussed how a “shift left” is necessary for a resilience revolution in his blog post from November 2023. In addition to vertical integration to cover host and vendor interactions, this ecosystem requires measurement and detection. Some elements, such as panic recovery, panic reporting, and a significant decrease in tail latencies, have already been mentioned. Aimed to draw attention to two components that integrate these two concepts.

- To remove latency outliers from SSD devices and the software ecosystem as a whole, OCP’s Latency Monitor feature is essential.

- Performance and durability are improved by data placement, particularly Flexible Data Placement which also reduces latency outliers by up to 30%.

Two more closing observations

- I’m facilitating a Hyperscale Applications session at FMS, and Meta will be providing further information about their experience implementing the Latency Monitor at Scale. Please come along. This autumn, Micron want to have some fantastic discussions about FDP at conferences.

- The vendor-neutral and seamless OCP Storage plugin for NVMe-CLI allows for the configuration and reporting of the Latency Monitor and Flexible Data Placement (FDP) in addition to other important inputs like decoding standardized telemetry.