How can you be certain that your large language models (LLMs) are producing the intended outcomes? How to pick the best model for your use case? Evaluating LLM results with Generative AI is difficult. Although these models are highly effective in many different activities, it is important to know how well they work in your particular use case.

An overview of a model’s capabilities can be found in leaderboards and technical reports, but selecting the right model for your requirements and assigning it to the right tasks requires a customised evaluation strategy.

In response to this difficulty, Google cloud launched the Vertex Gen AI Evaluation Service, which offers a toolset comprising Google cloud collection of well-tested and easily understandable techniques that is tailored to your particular use case. It gives you the ability to make wise choices at every stage of the development process, guaranteeing that your LLM apps perform to their greatest capacity.

This blog article describes the Vertex Gen AI Evaluation Service, demonstrates how to utilise it, and provides an example of how Generali Italia implemented a RAG-based LLM Application using the Gen AI Evaluation Service.

Using the Vertex Gen AI Evaluation Service to Assess LLMs

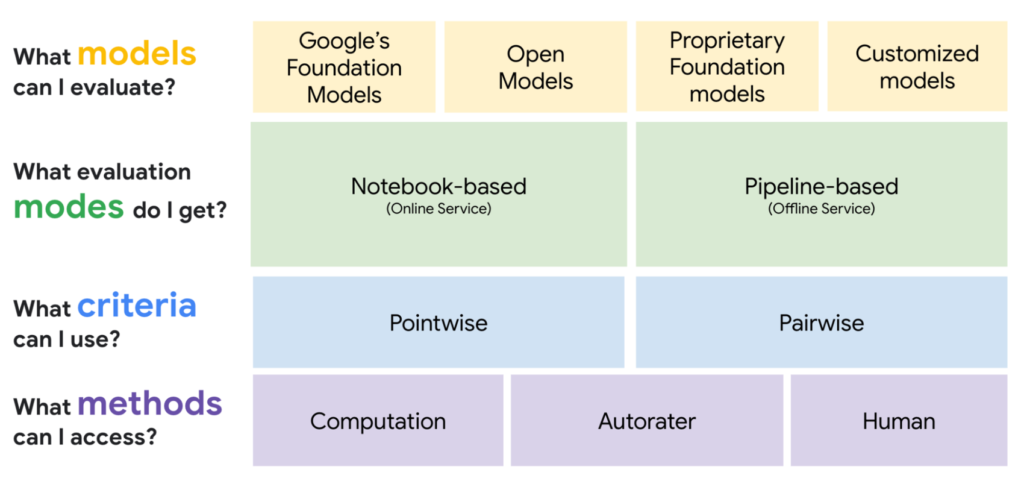

With the option of an interactive or asynchronous evaluation mode, the Gen AI Evaluation Service enables you to assess any model using Google cloud extensive collection of quality-controlled and comprehensible evaluators. They go into greater depth about these three dimensions in the section that follows:

Making important judgements at different phases of a GenAI application development is necessary. Selecting the best model for your work and creating prompts that get the best results are the first steps in the development process. Online evaluation aids in iterating on prompt templates and comparing models on the fly, which speeds up the decision-making process when attempting to find the greatest fit between the model, prompt, and task. But assessment isn’t just for the early phases of creation.

Robust benchmarking requires a more methodical offline evaluation with a larger dataset, for example, to see whether fine-tuning enhanced model performance or to determine whether a smaller model could be sufficient. Following deployment, you must constantly evaluate performance and look for opportunities for an update. Essentially, assessment is a constant activity that Gen AI developers must adopt rather than just one stage in the development process.

Vertex AI Experiments

The Gen AI Evaluation Service provides developers with both online and offline evaluations using the Vertex AI SDK within their choice IDE or coding environments, in order to best meet the wide range of evaluation use cases. Vertex AI Experiments automatically logs all assessments, saving you the trouble of manually keeping track of experiments. Additionally, google Vertex AI Pipelines pre-built pipeline components are available through the Gen AI Evaluation Service for use in production evaluation and monitoring.

Three families can be formed out of the existing evaluation techniques. Computation-based metrics are the first family. They compare a fresh output to a golden dataset of labelled input-output pairs and require ground truth. Google cloud provide you with the ability to customise Google cloud extensive library of pre-built metrics, allowing you to specify your own evaluation criteria and apply any measure of your choosing. Google cloud also developed metric bundles to make it easier for you to sift through the many metrics that are accessible; with just a single click, you can access the appropriate collection of metrics for any use case.

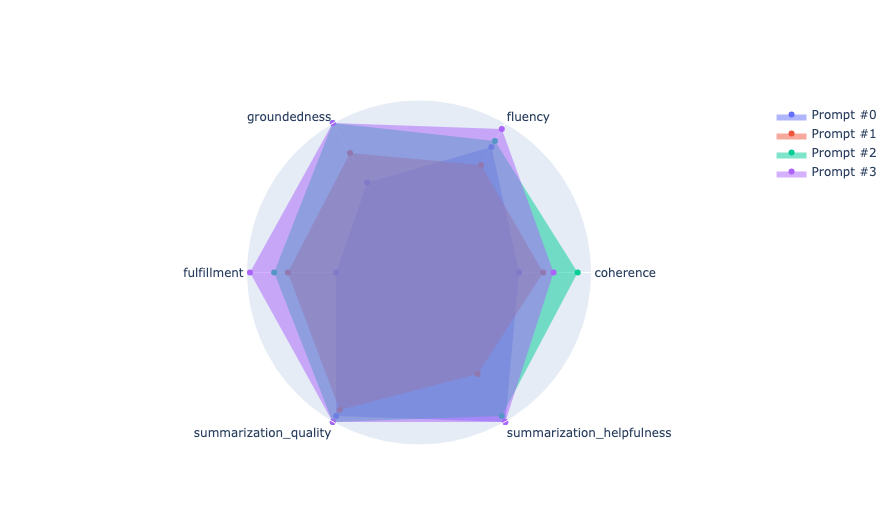

The code logs evaluation parameters and metrics in Vertex AI Experiments automatically and evaluates a summarization task using metric bundles. The following is a visual representation of the final combined assessment metrics:

These measurements provide a sense of progress and are both economical and effective. These measurements, meanwhile, are unable to fully capture the spirit of generative jobs. Developing a formula that captures all of the desired summary attributes is difficult. Furthermore, you may choose summaries that are different from the labelled examples, even with a well constructed golden dataset. For this reason, in order to evaluate generative tasks, you require an extra assessment technique.

The second family of approaches, autoraters, employ LLMs as a judging tool. In this case, an LLM is specially designed to carry out the assessment tasks. Ground truth is not necessary for this approach. You have the option of comparing two models side by side or evaluating one model. To guarantee their quality, Google cloud autoraters are meticulously calibrated using human raters. They are available right out of the box and are managed. For us, the ability to closely examine and develop confidence in an author’s assessment is crucial. For this reason, they offer justifications along with a confidence level for every decision.

An overview is provided in the box at the top: It shows the inputs (the evaluation dataset and competing models) as well as the final outcome, or victory rate. The win rate, which is a percentage, shows how frequently Health Snacks LLM outperformed Model B in terms of summary. In order to facilitate your comprehension of the victory rate, they additionally display the row-based results together with confidence scores and the autorater’s comments. You can assess the accuracy of the autorater for your use case by comparing the explanations and outcomes to what you expected.

The responses from Model B and the Health Snack LLM are listed for each input prompt. The author believes that the response that is highlighted in green and has a winner icon in the upper left corner is the better summary. The explanation gives the reasoning for the choice, and the confidence score, which is based on self-consistency, shows how confident the autor is in their choices.

To increase your confidence in the precision and dependability of the entire evaluation process, you have the option to calibrate the autorater in addition to the explanations and confidence scores. In order to accomplish this, you must provide AutoSxS, which generates alignment-aggregate information, direct access to human preference data. By taking random agreement into consideration, these statistics assess the agreement between Google cloud autorater and the human-preference data.

Even though it’s important to include human evaluation, gathering these assessments is still one of the most time-consuming parts of the review process. Google cloud chose to include human review as the third family of techniques in the GenAI Evaluation framework for this reason. Additionally, they collaborate with independent data-centric suppliers like LabelBox to make it simple for you to obtain human evaluations for a variety of jobs and standards.

To sum up, the Gen AI Evaluation Service offers a wide range of techniques that you may employ with any LLM model to create a unique assessment framework that will effectively evaluate your GenAI application. These techniques are available through a variety of channels, including online and offline.

How Generali Italia produced a RAG-based LLM application using the Gen AI Evaluation Service

A prominent Italian insurance company called Generali Italia was among the first to make use of Google cloud Gen AI Evaluation Service. According to Generali Italia’s Head of Technical Leads, Stefano Frigerio:

“The model evaluation was essential to the success of implementing an LLM in production. A manual review and improvement were not within Google cloud means in a dynamic ecology.

GenAI application

Like other insurance providers, Generali Italia generates a variety of documents, such as premium statements that provide thorough justifications and deadlines, policy statements, and more. Using retrieval-augmented generation (RAG) technology, Generali Italia developed a GenAI application. This innovation speeds up information retrieval by enabling employees to engage in conversational interactions with documents. But the proper implementation of the application would not be possible without a strong framework to evaluate its generative and retrieval functions. To measure performance against their baseline, the team first determined which performance dimensions were important to them. They then used the Gen AI Evaluation Service to compare performance.

Generali Italia’s Tech Lead Data Scientist, Ivan Vigorito, states that the company chose to utilise the Vertex Gen AI Evaluation Service for a number of reasons. Generali Italia was able to acquire autoraters that replicated human ratings in order to evaluate the calibre of LLM responses thanks to the assessment method AutoSxS. As a result, less manual evaluation was required, saving time and money. Additionally, the team was able to conduct evaluations using predetermined criteria thanks to the Gen AI review Service, which improved the review process’ objectivity.

The service helped make model performance understandable and pointed the team in the direction of ways to improve their application with explanations and confidence scores. Ultimately, the Generali team was able to assess any model through the use of external or pre-generated predictions thanks to the Vertex Gen AI Evaluation Service. This capability comes in especially handy when comparing model outputs that are hosted outside of Vertex AI.

Dominico Vitarella, Tech Lead Machine Learning Engineer at Generali Italia, claims that the Gen AI review Service does more for their team than only expedite the review process. It is easily integrated with Vertex AI, giving the team access to a full platform for generative and predictive application training, deployment, and evaluation.