Wayfair has been moving its on-premises data centers to Google Cloud over the past few years. As part of this move, we investigated Vertex AI as an end-to-end machine learning platform and adopted a number of its components as our preferred option for future ML solutions, including Vertex AI Pipelines, Training, and Feature Store. Our team chose to build on that effort by migrating our current use cases to Vertex AI in order to fully take advantage of the Google Cloud environment and address some problems that surfaced when utilizing our previous technology. In our earlier blog article, we went into detail about the technical and MLOps parts of that transformation.

Below, we describe our shift to Vertex AI from the perspective of data scientists. We’ll demonstrate it using our use case for delivery-time prediction as an example.

Utilizing Vertex AI to improve data collecting

Data are the foundation of every data science solution. When predicting delivery times, we combine information from various sources, including transactional data, delivery scans, details on the vendors and carriers who complete the orders, and more. We gather and aggregate the core data every day because running this procedure every time we need training data would be computationally expensive.

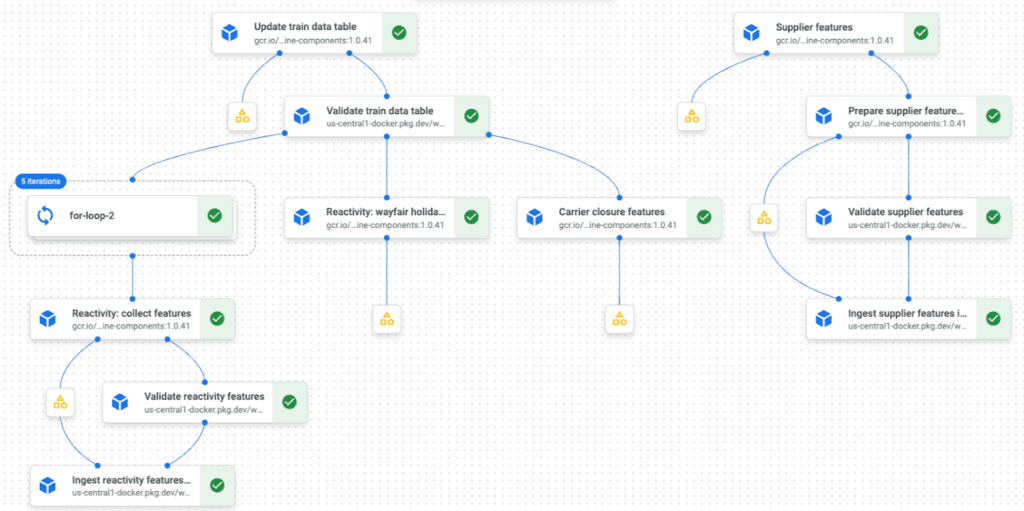

Prior to switching to Vertex AI, this data collection was managed by a number of pipelines under the control of several teams, which added needless complexity and slowed down development. These several pipelines were relocated and combined into a single serverless Vertex AI Pipeline, which is created and maintained solely by our data science team.

Having everything in a single repository with consistent versioning for everything inside, including all of our pipelines and the model code, was the first major advantage we noticed after switching to the new system. Traceability and debugging have greatly improved thanks to the single repository because we can now easily track changes. For instance, it is simple to determine which model code was used during the training pipeline run, and we can then examine the data collecting pipeline to determine which code produced the training data.

The serverless aspect of Vertex AI is a further improvement that has made our life simpler. We decreased our reliance on the internal infrastructure teams that previously looked after a central Airflow server for our pipelines because Google handles everything related to infrastructure and pipeline management. Additionally, because our pipelines receive resources on demand and are not slowed down by concurrently running activities, they operate significantly faster and more consistently than they did in the past.

The last major advantage of our switch to Vertex AI is that we now control the whole data collecting workflow required for our model building, which reduces our dependence on other teams. Vertex AI Pipelines are highly linked with other Google products like BigQuery or Cloud Storage and make it simple to develop or alter pipelines with just a few lines of code. As a result, new model iterations may be completed much more quickly because data scientists are now able to independently create and implement new features.

Experimenting offline at light speed

We can now perform some machine learning as the dataflow has been organized. The majority of our machine learning (ML) occurs in the development environment, where we build models using historical data. The typical experimentation pipeline is shown below:

Supply chain evolves. To test a hypothesis offline, we must simulate model training and inference across time to measure model quality. The process needs extensive parallelization. We exploit Vertex AI Pipelines’ serverlessness by using parallel for-loops and concurrent pipeline executions.

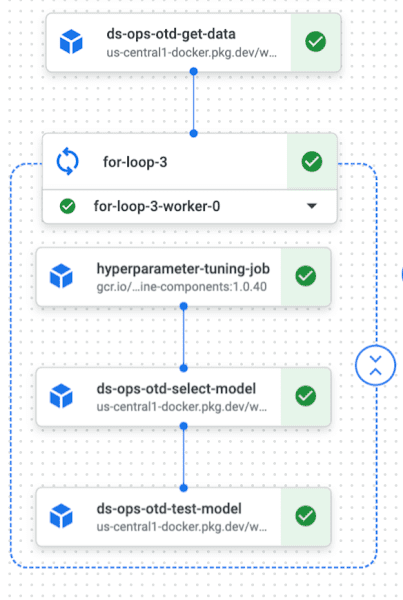

Parallelism helps find ideal hyperparameters. A specific Vertex AI Pipelines component from the Google Cloud Pipeline Components collection solves the hyperparameter optimization problem before separating data into training, validation, and test sets.

The main job calls workers to run the training and validation script on different model hyperparameter combinations to find the optimum combination. Besides speed, this component features a Bayesian optimization approach that increases search quality. Vertex AI Pipelines and the Hyperparameter Tuning Job make our work more efficient and allow us to quickly repeat offline experiments.

After choosing our hyperparameters, we evaluate the test dataset. The major outcome of an experiment is a set of metrics to evaluate the model from many perspectives. Vertex AI Experiments’ simple interface lets us log pipeline parameters and metrics to store and navigate experiment results. We can then assess the findings using the UI or a Jupyter Notebook locally or through Vertex AI Workbench.

Another convenience of Vertex AI is the easy transition from development to production. Our project retrains the live model weekly using the same components. The sole new step in the production pipeline is model deployment, which makes the model live service-friendly. The model lifespan ends there. Our last blog article explained how a CI/CD system manages model and pipeline upgrades. The tooling streamlines and secures the release process, letting us focus on data science problems and experimentation.

Build ML models faster

By switching to Vertex AI, our Supply Chain Science team could iterate model development more efficiently and autonomously. The advantages allow us to execute broad offline trials quickly and rapidly release new ML models to improve Wayfair’s customer experience. The new tooling improved delivery-time forecast, as seen in our latest report.

It’s been amazing working with Vertex AI, and we suggest their platform to other data science teams. Our team prioritizes Pipelines, Hyperparameter Tuning, and Experiments among Vertex AI solutions. Keep an eye out for other Wayfair teams using Vertex AI, and see how this cutting-edge toolset supports data research.