Scaling the Privacy-Conserving LLM Platform of Prediction Guard on an Intel Gaudi 2 AI Accelerator

Overview

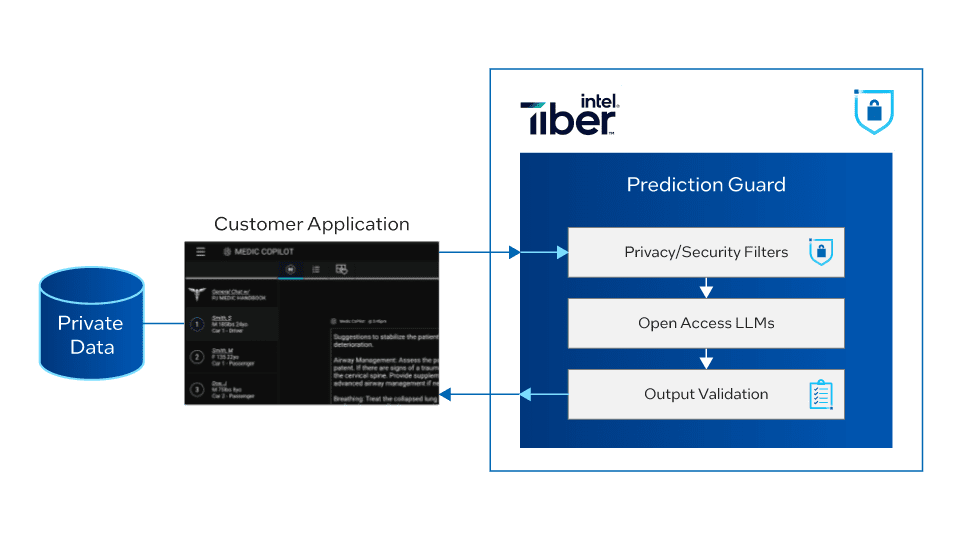

Prediction Guard, a large language model (LLM) platform operating on an Intel Gaudi 2 AI accelerator, is leading the way in satisfying the growing demand for AI platforms that prioritise privacy. Because they make it possible for robust information extraction, chat, content creation, and automation applications, LLMs are revolutionising entire industries. However, because businesses find it difficult to maintain data confidentiality and privacy without compromising accuracy and scalability, the general industry adoption of LLMs is still sluggish.

Prediction Guard hosts cutting-edge, open-source LLMs such as Meta Llama 3, Neural-Chat-7B, and DeepSeek, and has pioneered an LLM platform that meets both of these objectives. It accomplishes this while smoothly combining input filters such as those for personally identifiable information [PII] and prompt injections and output validations, such as those for factual consistency, all while protecting privacy.

Prediction Guard has optimised the deployment of LLM inference on the newest Intel Gaudi 2 AI accelerators within Intel Tiber Developer Cloud in order to power their platform at scale. The Prediction Guard team’s efforts to unleash the enormous performance potential of Intel Gaudi 2 AI accelerators for LLM applications are described in full in this white paper.

Context

Businesses understand the benefits of integrating LLMs. However, in order to “ground” and enhance LLM outcomes within domains pertinent to the business, it is frequently required to incorporate private, sensitive company data. To enhance newly generated customer answers via LLM, for instance, a business might incorporate customer assistance messages and support tickets into an LLM application. To enhance applications that offer decision support, a healthcare organisation could also incorporate treatment guidelines or a patient’s medical history.

Companies must make sure that the LLMs they use are hosted in a secure, private manner due to this integration of private data. However, it is frequently impractically difficult to host LLMs in a high-throughput, low-latency manner at a reasonable cost. Businesses can utilise local LLM systems, but in exchange for anonymity, these frequently give up high throughput and latency. On the other hand, cloud-based hosting solutions may allow for scaling at a very high price.

This hosting issue may impede the deployment of AI. Even if a business manages to host models, LLMs may raise additional questions about the precision of model outputs or potential avenues for security or privacy breaches. Because LLMs have the potential to produce entirely erroneous text (a process commonly referred to as hallucination), model outputs must be validated in some way. Furthermore, PII that could leak into application outputs or malicious instructions known as prompt injections could be present in the inputs to LLMs.

The Platform for Prediction Guard

Best-in-class filters are integrated into the Prediction Guard platform to identify and redact personally identifiable information (PII), prevent harmful prompts and toxic creation, and validate outputs against reliable data sources. When coupled with hosting on affordable, scalable Intel Gaudi 2 AI accelerators, this opens up privacy-preserving LLM applications for businesses in a variety of sectors, including legal, healthcare, and finance.

How Did Intel Gaudi 2 AI Accelerators Help Prediction Guard Achieve Success?

Prediction Guard was the first business to support paying clients utilising this arrangement and was a pioneer in the early adoption of LLM models on Intel Gaudi 2 AI accelerator instances in the Intel Tiber Developer Cloud. The main optimisations included are based on information from the Intel Gaudi product team, NVIDIA Triton Inference Server, and the Optimum for Intel Gaudi libraries.

- Batching inference requests dynamically.

- Inference load balancing among model replicates on many Intel Gaudi 2 AI accelerators.

- Between batches, optimising and padding prompts help to preserve static shapes.

- Adjusting various hyperparameters and key-value (KV) caching in accordance with Intel Gaudi AI accelerator recommendations.

Intel Gaudi 2 AI accelerators

The Prediction Guard system processes requests dynamically in batches with a maximum latency. This indicates that the server waits for requests to enter the system for a certain amount of time. During this period, all incoming requests are combined and processed in one batch. Concurrently built in the Google Go programming language, Prediction Guard API replicas spread out requests in response to multiprompt requests. The load balancer that handles these fanned-out requests distributes them among several model replicas that are powered by Intel Gaudi 2 AI accelerators.

Input shapes should not change across batches for best results on an Intel Gaudi 2 AI accelerator. To analyse a batch of prompts, pad them to a consistent length, and preserve static forms even across dynamically batched requests from the NVIDIA Triton Inference Server, Prediction Guard added specialised logic.

Using padding to the prompt’s maximum length option is one easy approach to pad prompt inputs when using Intel Gaudi 2 AI accelerators for inference. The Optimum for Intel Gaudi library samples on GitHub show these and other helpful text creation features.

Lastly, using advice from the Intel Gaudi product team, Prediction Guard adjusted the KV cache size, numerical precision, and other hyperparameters. They were able to fully utilise the distinctive architecture of the Intel Gaudi 2 AI accelerators as a result.

Hardware Configuration for Prediction Guard

Intel Tiber Developer Cloud and Intel Gaudi 2 AI accelerator instances with the following specs power Prediction Guard model servers:

- For training, eight Intel Gaudi 2 AI accelerators.

- Two third-generation Intel Xeon Scalable processors.

- 24x 100 Gb RoCE ports built inside each Intel Gaudi 2 accelerator, increasing networking capacity.

- 2.4 TB per second scale out and 700 GB per second scale in the server.

- Simple system migration or development with the Intel Gaudi software package.

Outcomes

Performance gains achieved by conducting LLM inference on Intel Gaudi 2 CPUs were revolutionary thanks to Prediction Guard optimisations. Their model servers obtained up to two times higher throughput when they migrated from NVIDIA A100 Tensor Core GPUs to Intel Gaudi 2 AI accelerator deployments. These models included Mistral AI and Llama 2 fine-tunes.

Even more astonishingly, Prediction Guard’s streaming endpoints with Intel Gaudi 2 AI accelerators showed industry-leading latency. With the Neural-Chat-7B model, they observed an average time-to-first-token of only 174 milliseconds. With times ranging from 200 milliseconds to 3.68 seconds for a Neural-Chat-7B model, this metric which is crucial for real-time applications like chatbots matched or outperformed industry-leading cloud providers like Anyscale, Replicate, and Together AI as determined by LLMPerf benchmarks.

Time-to-first-token (for streaming use cases) and output token throughput (for high-throughput use cases like summarisation) are the two main measures used by the LLMPerf benchmark suite to assess LLM inference providers. On both fronts, the Prediction Guard AI-accelerated platform running on Intel Gaudi 2 CPUs produced outstanding results.

Prediction Guard demonstrated the scalability of their technology by assisting over 4,500 participants over three significant hackathons, in addition to powering their enterprise customer applications. These included 1,500 students from Stanford University’s TreeHacks event, 2,000 global developers from Intel’s Advent of GenAI Hackathon, and 1,000 students from Purdue University’s Data 4 Good Case Competition. Prediction Guard installations on Intel Gaudi 2 processors managed the load with ease even during periods of high demand.

In summary

By being the first to deploy LLM on Intel Gaudi 2 AI accelerators and to carefully design optimisations related to batching, load balancing, static shaping, and hyperparameters, Prediction Guard has completely changed the language AI environment. They have succeeded in reaching previously unreachable performance and scalability levels through their dedication to efficiency and innovation.

Prediction Guard has established itself as the front-runner in safe, high-performance language artificial intelligence (AI) solutions suitable for enterprise adoption across a range of industries by seamlessly fusing data privacy safeguards with cutting-edge LLM inference capabilities driven by Intel Gaudi 2 processors.