NVIDIA NIM inference microservices

AI, Get Up! Businesses can unleash the potential of their business data with production-ready NVIDIA NIM inference microservices for retrieval-augmented generation, integrated into the Cohesity, DataStax, NetApp, and Snowflake platforms. The new NVIDIA NeMo Retriever Microservices Boost LLM Accuracy and Throughput.

Applications of generative AI are worthless, or even harmful, without accuracy, and data is the foundation of accuracy.

NVIDIA today unveiled four new NVIDIA NeMo Retriever NIM inference microservices, designed to assist developers in quickly retrieving the best proprietary data to produce informed responses for their AI applications.

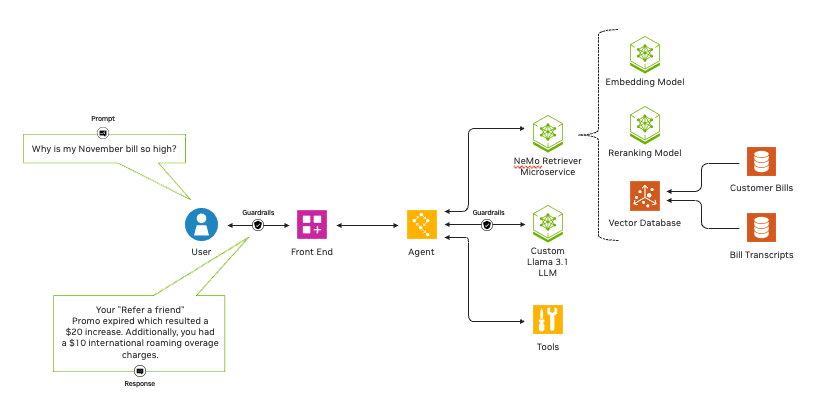

NeMo Retriever NIM microservices, when coupled with the today-announced NVIDIA NIM inference microservices for the Llama 3.1 model collection, allow enterprises to scale to agentic AI workflow, where AI applications operate accurately with minimal supervision or intervention, while delivering the highest accuracy retrieval-augmented generation, or RAG.

Nemo Retriever

With NeMo Retriever, businesses can easily link bespoke models to a variety of corporate data sources and use RAG to provide AI applications with incredibly accurate results. To put it simply, the production-ready microservices make it possible to construct extremely accurate AI applications by enabling highly accurate information retrieval.

NeMo Retriever, for instance, can increase model throughput and accuracy for developers building AI agents and chatbots for customer support, identifying security flaws, or deriving meaning from intricate supply chain data.

High-performance, user-friendly, enterprise-grade inferencing is made possible by NIM inference microservices. The NeMo Retriever NIM microservices enable developers to leverage all of this while leveraging their data to an even greater extent.

Nvidia Nemo Retriever

These recently released NeMo Retriever microservices for embedding and reranking NIM are now widely accessible:

- NV-EmbedQA-E5-v5, a well-liked embedding model from the community that is tailored for text retrieval questions and answers.

- Snowflake-Arctic-Embed-L, an optimized community model;

- NV-RerankQA-Mistral4B-v3, a popular community base model optimized for text reranking for high-accuracy question answering;

- NV-EmbedQA-Mistral7B-v2, a well-liked multilingual community base model fine-tuned for text embedding for correct question answering.

- They become a part of the group of NIM microservices that are conveniently available via the NVIDIA API catalogue.

Model Embedding and Reranking

The two model types that make up the NeMo Retriever microservices embedding and reranking have both open and commercial versions that guarantee dependability and transparency.

With the purpose of preserving their meaning and subtleties, an embedding model converts a variety of data types, including text, photos, charts, and video, into numerical vectors that can be kept in a vector database. Compared to conventional large language models, or LLMs, embedding models are quicker and less expensive computationally.

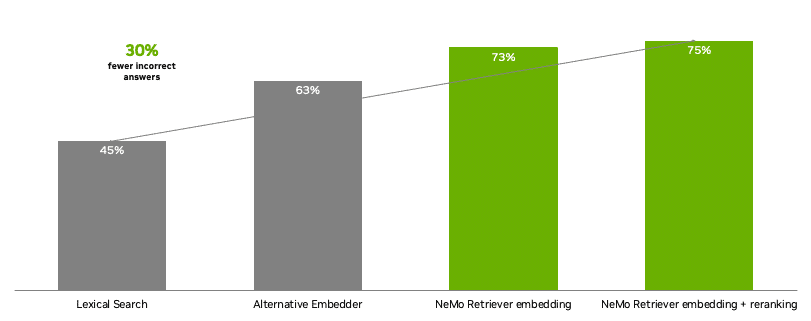

After ingesting data and a query, a reranking model ranks the data based on how relevant it is to the query. These models are slower and more computationally complex than embedding models, but they provide notable improvements in accuracy.

NeMo Retriever microservices offers advantages over other options. Developers utilising NeMo Retriever microservices may create a pipeline that guarantees the most accurate and helpful results for their company by employing an embedding NIM to cast a wide net of data to be retrieved, followed by a reranking NIM to cut the results for relevancy.

Developers can create the most accurate text Q&A retrieval pipelines by using the state-of-the-art open, commercial models available with NeMo NIM Retriever. NeMo Retriever microservices produced 30% less erroneous responses for enterprise question answering when compared to alternative solutions.

NeMo Retriever microservices Principal Use Cases

NeMo Retriever microservices drives numerous AI applications, ranging from data-driven analytics to RAG and AI agent solutions.

With the help of NeMo Retriever microservices, intelligent chatbots with precise, context-aware responses can be created. They can assist in the analysis of enormous data sets to find security flaws. They can help glean insights from intricate supply chain data. Among other things, they can improve AI-enabled retail shopping advisors that provide organic, tailored shopping experiences.

For many use cases, NVIDIA AI workflows offer a simple, supported beginning point for creating generative AI-powered products.

NeMo Retriever NIM microservices are being used by dozens of NVIDIA data platform partners to increase the accuracy and throughput of their AI models.

NIM microservices

With the integration of NeMo Retriever integrating NIM microservices in its Hyper-Converged and Astra DB systems, DataStax is able to provide customers with more rapid time to market with precise, generative AI-enhanced RAG capabilities.

With the integration of NVIDIA NeMo Retriever microservices with Cohesity Gaia, the AI platform from Cohesity will enable users to leverage their data to drive smart and revolutionary generative AI applications via RAG.

Utilising NVIDIA NeMo Retriever, Kinetica will create LLM agents that can converse naturally with intricate networks in order to react to disruptions or security breaches faster and translate information into prompt action.

In order to link NeMo Retriever microservices to exabytes of data on its intelligent data infrastructure, NetApp and NVIDIA are working together. Without sacrificing data security or privacy, any NetApp ONTAP customer will be able to “talk to their data” in a seamless manner to obtain proprietary business insights.

Services to assist businesses in integrating NeMo Retriever NIM microservices into their AI pipelines are being developed by NVIDIA’s global system integrator partners, which include Accenture, Deloitte, Infosys, LTTS, Tata Consultancy Services, Tech Mahindra, and Wipro, in addition to their service delivery partners, Data Monsters, EXLService (Ireland) Limited, Latentview, Quantiphi, Slalom, SoftServe, and Tredence.

Nvidia NIM Microservices

Utilize Alongside Other NIM Microservices

NVIDIA Riva NIM microservices, which boost voice AI applications across industries increasing customer service and enlivening digital humans, can be used with NeMo Retriever microservices.

The record-breaking NVIDIA Parakeet family of automatic speech recognition models, Fastpitch and HiFi-GAN for text-to-speech applications, and Metatron for multilingual neural machine translation are among the new models that will soon be available as Riva NIM microservices.

The modular nature of NVIDIA NIM microservices allows developers to create AI applications in a variety of ways. To give developers even more freedom, the microservices can be connected with community models, NVIDIA models, or users’ bespoke models in the cloud, on-premises, or in hybrid settings.

Businesses may use NIM to implement AI apps in production by utilising the NVIDIA AI Enterprise software platform.

NVIDIA-Certified Systems from international server manufacturing partners like Cisco, Dell Technologies, Hewlett Packard Enterprise, Lenovo, and Supermicro, as well as cloud instances from Amazon Web Services, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure, can run NIM microservices on customers’ preferred accelerated infrastructure.

Members of the NVIDIA Developer Program will soon have free access to NIM for testing, research, and development on the infrastructure of their choice.