Micron 9400 NVMe SSDs with NVIDIA

Training dataset sizes are approaching billions of parameters. Larger models cannot fit entirely in system memory, however some models can. Data loaders in this scenario must use a variety of techniques to access models stored on flash storage. An SSD-stored memory-mapped file is one such technique. This makes the file accessible to the data loader as if it were in memory, but the training system’s speed is significantly decreased due to the CPU and software stack overhead. This is where the GPU-Initiated Direct Storage (GIDS) data loader and Big Accelerator Memory (BaM) come in.

What are GIDS and BaM?

BaM is a system architecture that makes use of SSDs’ fast speed, big density, low latency, and durability. In contrast to systems that need CPU(s) to supply storage requests in order to service GPUs, BaM aims to offer efficient abstractions that allow GPU threads to conduct fine-grained accesses to datasets on SSDs and achieve substantially greater performance. BaM acceleration makes use of a unique storage driver created especially to make direct storage device access possible thanks to GPUs’ built-in parallelism. Unlike NVIDIA Magnum IO GPUDirect Storage (GDS), BaM uses the GPU to prepare the connection with the SSD, while GDS depends on the CPU.

Note: NVIDIA Research’s prototype projects, the NVIDIA Big Accelerator Memory (BaM) and the NVIDIA GPU Initiated Direct Storage (GIDS) dataloader, are not intended for public release.

As shown below, Micron has previously worked with NVIDIA GDS:

- The Performance of the Micron 9400 NVMe SSDs Using NVIDIA Magnum IO GPUDirect Storage Platform

- Micron and Magnum IO GPUDirect Storage Partner to Deliver AI & ML With Industry-Disruptive Innovation

Based on the BaM subsystem, the GIDS dataloader hides storage delay and satisfies memory capacity needs for GPU-accelerated Graph Neural Network (GNN) training. Since feature data makes up the majority of the entire graph dataset for large-scale graphs, GIDS accomplishes this by storing the graph’s feature data on the SSD. To facilitate fast GPU graph sampling, the graph structure data which is usually considerably smaller than the feature data is pinned into system memory. Finally, to minimize storage accesses, the GIDS dataloader sets aside a software-defined cache for recently accessed nodes on the GPU memory.

Training graph neural networks using GIDS

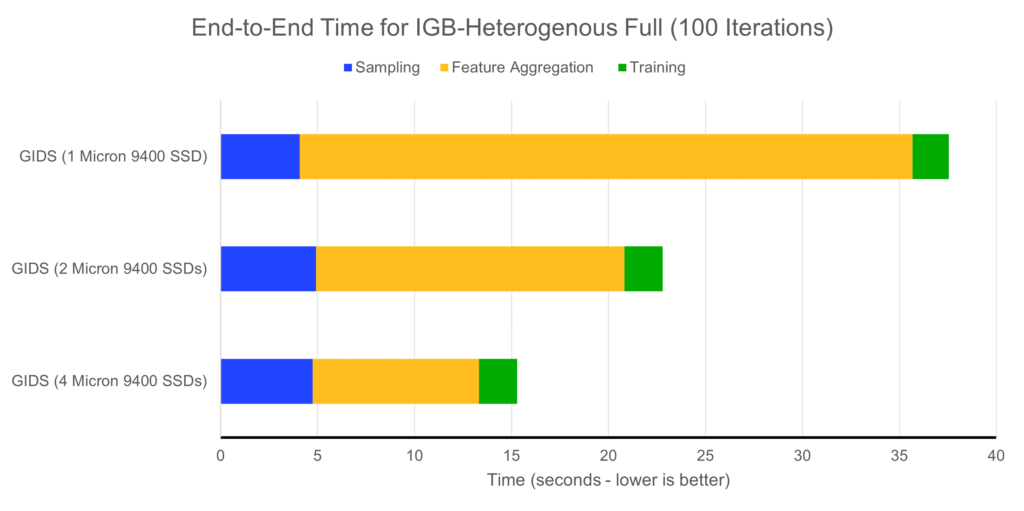

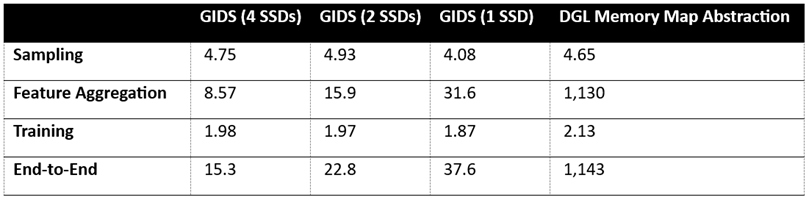

They used the heterogeneous complete dataset from the Illinois Graph Benchmark (IGB) for GNN training in order to demonstrate the advantages of BaM and GIDS. With a size of 2.28 TB, this dataset is too big to fit in the system memory of most systems. As illustrated in Figure 1 and Table 1, they changed the number of SSDs and timed the training for 100 iterations using a single NVIDIA A 100 80 GB Tensor Core GPU to provide a wide variety of outcomes.

GIDS Training Time for IGB-Heterogenous Full Dataset – 100 Iterations

Table 1: GIDS Training Time for IGB-Heterogenous Full Dataset – 100 Iterations

The GPU performs graph sampling in the initial phase of training by accessing the graph structure data stored in system memory (shown in blue). The structure that is kept in system memory does not change across these tests, hence this number fluctuates very little across the various test settings.

The real training time is another component (seen in green on the far right). They can see that, as would be anticipated given its strong GPU dependence, there is little variation in this component across the various test setups.

The most significant area, where the most disparity is seen, is feature aggregation (highlighted in gold). They observe that scaling from 1 to 4 Micron 9400 NVMe SSDs significantly improves (reduces) the feature aggregation processing time since the feature data for this system is stored on the SSDs. As they increase from 1 SSD to 4 SSDs, feature aggregation becomes better by 3.68x.

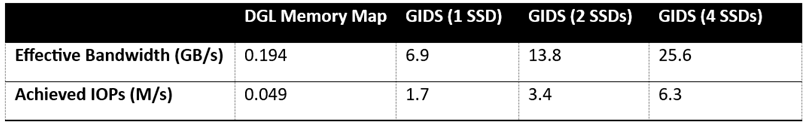

They also incorporated a baseline computation that accesses the feature data using the Deep Graph Library (DGL) data loader and a memory map abstraction. They can see how ineffective the CPU software stack is at keeping the GPU saturated during training since this way of accessing the feature data necessitates using the CPU software stock rather than direct access by the GPU. With 1 Micron 9400 NVMe SSDs employing GIDS, the feature abstraction increase over baseline is 35.76x, while with 4 Micron 9400 NVMe SSDs , it is 131.87x. Table 2, which display the effective bandwidth and IOPs during these testing, provide an additional perspective on this data.

Table 2: Effective Bandwidth and IOPS of GIDS Training vs Baseline

They recognize that a paradigm change is required to train these models quickly and to take advantage of the advancements offered by top GPUs as datasets continue to expand. They think that BaM and GIDS are excellent places to start, and they want to collaborate with more of these kinds of systems in the future.

Test Framework

| Component | Details |

| Server | Supermicro AS 4124GS-TNR |

| CPU | 2x AMD EPYC 7702 (64 Core) |

| Memory | 1 TB Micron DDR4-3200 |

| GPU | NVIDIA A10080GB Memory Clock: 1512 MHzSM Clock: 1410 MHz |

| SSDs | 4x Micron 9400 MAX 6.4TB |

| OS | Ubuntu 22.04 LTS, Kernel 5.15.0.86 |

| NVIDIA Driver | 535.113.01 |

| Software Stock | CUDA 12.2, DGL 1.1.2, Pytorch 2.1 running in NVIDIA Docker container |