Lightricks

With the help of a new AI-powered expansion, you can effectively express your next creative concept with captivating, dynamic pictures that perfectly capture your vision.

With the help of brand-new, AI-powered expansion, creatives who want to express and visualize thoughts can now accurately realize their ideas through eye-catching, dynamic visuals.

With three improved user workflows that let you realise your vision, you can take unparalleled control. Start your story with pre-edited content, or use custom AI technology to create a blank storyboard for complete creative control.

The difference is all in the delivery. Using the cast, style, storyline, and other assets you’ve created, export a concept video and complete slide deck. It’s now simpler than ever to put together everything you’ll need to propose your next project.

Reach your preferred aesthetic with ease. With just a few clicks, apply any style to your whole storyboard. Use automatic generations to replicate the look and feel of an image that you upload as a reference.

Savour sophisticated upscale features that let you export a high-resolution version of your creation. This implies that your images are reproduced with greater clarity and detail than they have ever been.

Light a creative fire inside of you

We believe the right gear will change your worldview regardless of your photography or videography experience. All of Lightricks products are designed to make it easier for people of all abilities to express themselves and experience the transformational power of creation.

Rethink the way you create

Lightricks studio develop user-friendly applications that enable content producers of all skill levels to turn their curiosity into awesome stuff. This translates to a lower learning curve and more user-friendly features that remove barriers to production and reinsert the joy of the creative process.

Change the way you appear online

Raise the bar on your inventiveness and give your work the consideration it merits. Make a connection with your audience and shape your community to best reflect your goal. Lightricks is available to assist you in navigating the dynamic landscape of the creative economy.

Videoleap

With the use of cutting-edge computer vision and artificial intelligence technology, Lightricks creates cutting-edge photo and video creation tools that let companies and content providers create scalable, highly engaging content. Using the pgvector extension in Cloud SQL for PostgreSQL, Lightricks was able to improve search capabilities and increase the number of retrieval rates by 40% for its robust video editor, Videoleap.

Google Cloud goal at Lightricks is to close the gap that exists between creation and imagination. With their video editing tool, Videoleap, both novices and experts can easily chop and combine movies no matter where they are.

With the use of AI algorithms, user-generated content (UGC) templates, and a simple editor, Google hope to make video editing accessible to everyone. Specifically, Videoleap’s template search feature is essential to their users’ ability to effectively browse this extensive and varied library of video templates. Google wanted a way to go towards a more dynamic search approach while still improving search. Google is realised that Cloud SQL for PostgreSQL was the best option for Videoleap when it revealed support for vector searches.

Seeking a solution that is superior to others

Google is already using Cloud SQL for PostgreSQL as their managed relational database before looking into vector database solutions. As a result, they were able to concentrate on improving their apps and spend less time managing the databases. However, in order to stay up with the expanding trends in video editing, Google required more support for Videoleap’s search features. Google’s goal was to enable consumers to select templates that rapidly matched their creative vision and to have more control over their browsing experience.

For a while, users had to utilize precise terms to retrieve relevant results from Google’s platform’s search feature, which depended on exact keyword matching based on predetermined annotations. This limited user flexibility. This method frequently fell short of capturing the wide range of user questions, which may contain intricate expressions or terminology that isn’t explicitly included in their annotations.

Since it would have taken a lot of time to resolve each of these differences on its own, Google chose to investigate the possibilities of vector search, which uses vector embeddings to extract pertinent data from databases. It didn’t take long for us to realise that adding this technique to Videoleap will improve search quality and speed while producing more context-aware results.

In order to meet Google objectives for search functionality, we looked at a few options. Pinecone and Vespa were Google’s first attempts, but their delayed integration and increased complexity made them unsatisfactory. Development overhead is greatly increased when a second vector database is introduced since it necessitates modifications to deployment procedures, pipelines for continuous integration, and local environments.

In addition, the learning curve for developers is higher than for pgvector, the well-known PostgreSQL extension for vector search, mostly because it requires them to comprehend a new data type. It can be difficult and error-prone to maintain data consistency between PostgreSQL and an external vector database, necessitating careful management of continuous data synchronization. It can also be difficult to take advantage of PostgreSQL’s transactional features for atomic updates across relational and vector data. The division of systems could potentially impede effective joins between vector data and other PostgreSQL tables.

Chroma Vector DB

Google also gave Chroma DB some thought, however it didn’t have any dependable hosting choices or deployment alternatives. Google therefore knew it was the appropriate decision when Cloud SQL for PostgreSQL introduced vector support via pgvector. It not only matched their requirements exactly, but it also worked seamlessly with the PostgreSQL architecture Google already had. For many use scenarios, its streamlined approach makes it a more dependable and efficient solution by lowering development overhead and minimizing the chance of data inconsistencies.

Using pgvector in conjunction with Cloud SQL to increase functionality

Google can effortlessly combine data, manage transactions, and enable semantic search by utilizing pgvector with Cloud SQL. Through the use of various indexing schemes for variables like speed and accuracy, Google may adjust and customize it to their specifications.

A microservices architecture is used in the application Google developed for Videoleap’s search function. It stores Cloud SQL with multiple embeddings of the templates and UGC template metadata to allow search while maintaining high availability and scalability. This method improves the modularity, flexibility, and scalability of the system while adhering to the concepts of microservices.

Faster results visualization with dynamic search features

Delivering relevant results for a wide range of queries was revolutionized by switching to a semantic search paradigm with vector embeddings. Rather than depending only on precise term matching, this modification was especially helpful for capturing the semantic intent of search queries. Because of this flexibility, Google can still get pertinent answers even if the query contains variations like synonyms, misspellings, or similar concepts. Instead of being constrained by the exact words used, a search for “labrador plays with frisbee” may now, for instance, yield a video of a golden retriever playing with a ball.

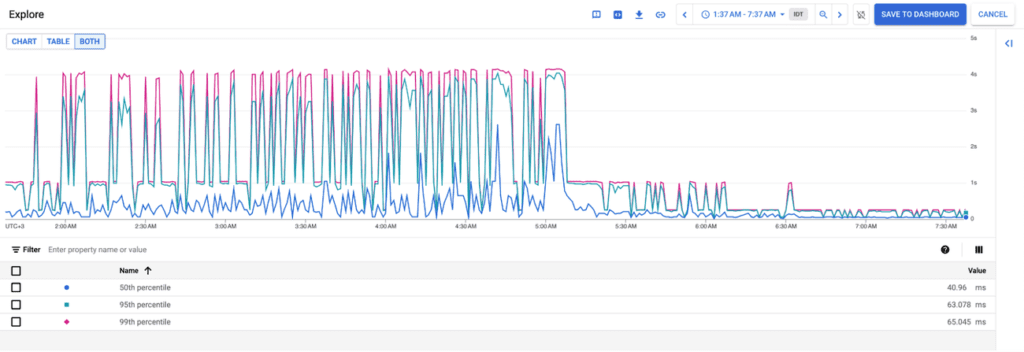

With the new method, Google observed a notable rise in export rates, suggesting that the modification to their search functionality undoubtedly added value for producers. Both the quantity of retrievals and the percentage of templates used from recovered results rose by 40% while using pgvector. The pgvector addition allowed us to query millions of embeddings with great precision and added support for the Hierarchical Navigable Small Worlds (HNSW) algorithm. 90% of Google’s inquiries (P90) had response times ranging from one to four seconds, but they now took less than 100 milliseconds. By enabling users to quickly find pertinent results, this unleashes the creative process and makes editing as gratifying as the original production.

Using AI to bring search possibilities into greater focus

The addition of a visual content-based search capability is a key component of Google’s improved search capabilities. Neural networks can be used to generate vector embeddings with pgvector and Cloud SQL. As a result, they can now comprehend and match visual content with user intent with more accuracy and relevance, leading to more relevant search results.

Being aware of the emerging trend of AI-assisted editing, in which compositions are frequently based on textual cues, requires being up to date with the latest developments in video editing tools. For this reason, Google have a new Videoleap search function that uses AI suggestions to help content creators identify visual content that fits with more precise or subtle topics. One way to distinguish content would be to look for references to the Barbie movie vs images of generic Barbie doll models.