Virtual platforms are compute-intensive, thus using several host cores to speed them is obvious. Multiple host cores may be used to perform a simulation at various granularity levels in parallel using the Intel Simics Simulator. Intel will examine a single device model utilizing threading to accelerate the simulator in this blog article. This is a high-level explanation of “workshop-02” threading in the Intel Simics Simulator Training Package. The Intel Simics Simulator Public Release includes the training bundle.

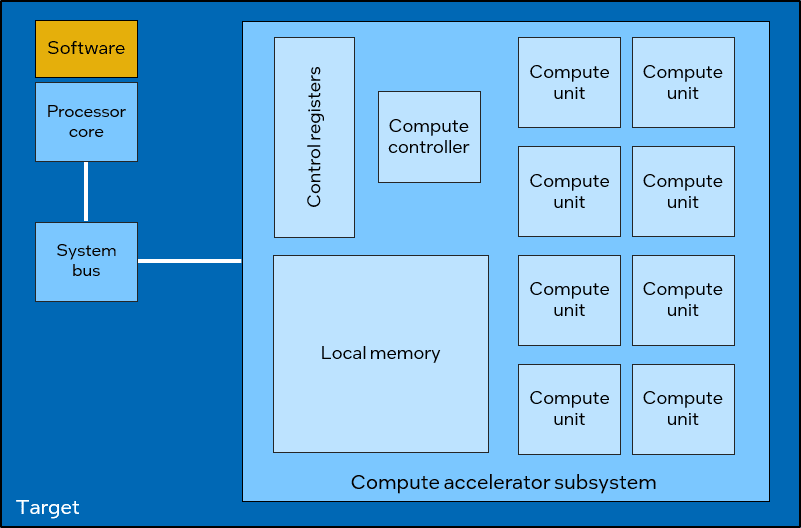

Target System

Parallelizing a computing accelerator subsystem with numerous parallel compute units is their goal. In addition to compute units, a control unit starts and waits for tasks to finish. Software on a processor sets up accelerator memory descriptors and interacts with compute unit and control unit control registers.

Job Structure

The computing accelerator runs tasks from start to finish before accepting new ones. Each task has a serial setup phase, when software creates work descriptors and the control unit starts computing, a parallel compute phase, and a collection phase. According to virtual platform software and hardware, all computing units execute concurrently throughout the computation phase. Work virtual execution duration relies on data amount and should not alter independent of virtual platform operation.

This is easiest to simulate in serial mode, where a host thread operates each compute unit. This is not efficient for this example, but it is durable and, most importantly, straightforward to model. How can this be parallelized?

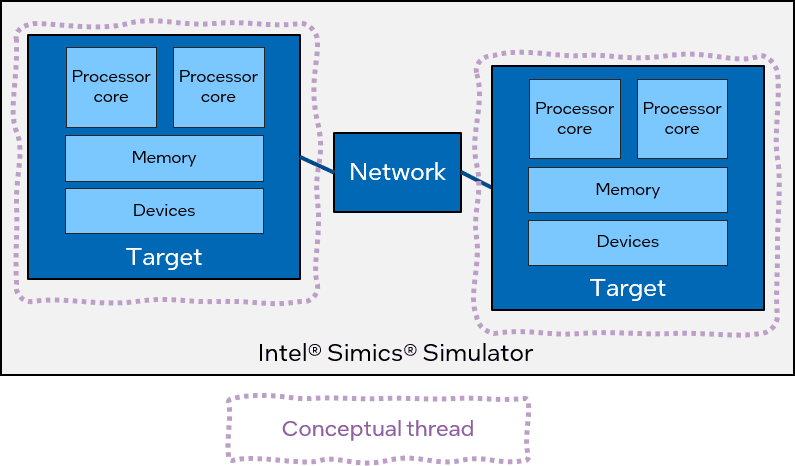

Standard Simulator Parallelism

Parallelizing a simulation using Intel Simics Simulator works practically easily on any virtual platform. Parallelizing simulated machines in a virtual network is the simplest model technique. Similar to establishing many virtual simulator instances inside a single simulator session. Each machine (or target) operates serially in parallel with other internally serial simulations. Special network-related link objects are used for all machine communication.

Its simplicity in modeling makes this parallelism method appealing. It involves eliminating process-global data (global variables). No two execution threads may access simulation object data concurrently. This was the first sort of parallelism presented in the Intel Simics Simulator over 15 years ago, a simple step from the serial simulator.

This doesn’t help since all computing units are on one target computer. Let CPU cores execute in parallel while serializing devices for enhanced parallelism. This works well for parallel software tasks, as explained in a recent blog on threading on the basic RISC-V virtual architecture.

This paradigm requires parallelism and genuine concurrency, making processor core and memory simulation implementation more complicated. Left unchanged, device models operate like serial simulations.

Unless Intel make a device a “processor” by implementing the simulator processor API, this doesn’t assist with device models. That’s probably excessive and complex.

Actual Details

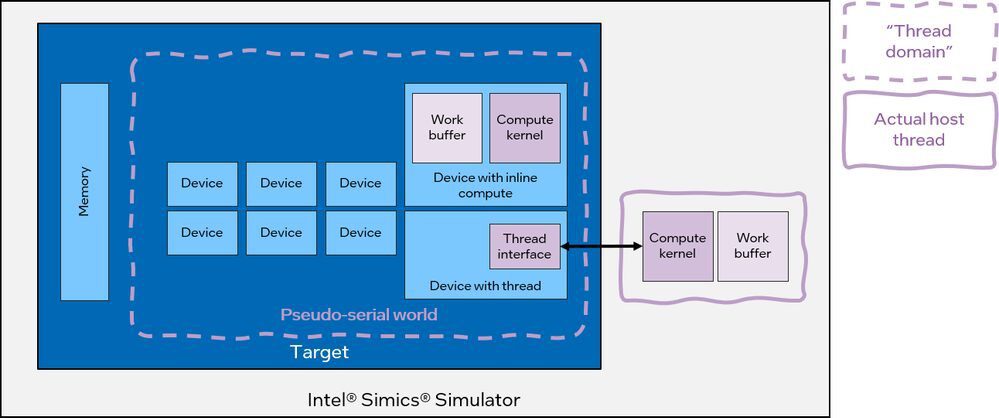

To parallelize device models, you need further information about the Intel Simics Simulator threading model. The simulator employs “thread domains” to describe parallelism in a single target system. Simulation objects that assume serial execution are placed in a pseudo-serial universe on each target. Serial world code is executed by one thread at a time.

In thread domains outside pseudo-serial, only “thread-aware” objects are permitted. Thread-aware processor cores, interrupt controller models, per-core timers, and memory-management units are common. Simple device types like the computing unit prefer not to since it’s more work.

Thread parallelization

Most software-compute unit interaction does not need parallelization. Software writes and reads configuration and status registers, inexpensive operations without parallelization. A modest local device model remedy is to thread out just the main computing process.

Serial and threaded cases execute compute kernel code on a work buffer similarly. The compute kernel runs in the device model’s buffer on the main simulation thread in serial mode. Threaded offload throws computational work onto a thread “on the side” with a work buffer outside the device model. The overhead of reading and writing words from memory is avoided by constantly using a work buffer.

For most accelerator models, it’s trivial to read all the data from memory to a buffer, calculate, and publish the results. Virtual platforms may read or write arbitrarily huge amounts of memory in a single atomic simulation step. Perhaps not precisely what a hardware direct memory access (DMA) operation looks like, but it seldom matters.

Remember that compute threads are host operating system threads generated for concurrent work. Simulation execution threads handled by the simulator core scheduler execute thread domain simulation work. The host threads are not devoted to thread domains.

Virtual Platform

Threading and Virtual Platform Semantics

Simulation semantics must be considered while implementing a threaded compute kernel. The controller device must tell software whether the compute activity is idle and ready for a new task, processing, or done. Issue a completion interrupt too.

Serial execution makes this simple. Software sees the status update depending on the accelerator’s task size: a delay is calculated and a timed event is delivered. The real work might be done at the start or finish. It is synchronous on the main execution thread with code handling completion.

In a threaded paradigm, computation is asynchronous to the main simulator thread. Compute work completion time is unknown in advance. One solution is to update the model’s visible state when the computation finishes. This makes the simulation non-deterministic and unlike serial.

Better still, start the compute activity immediately and submit an event using the same virtual time as in the serial instance. If the compute thread has not completed by the time the event is triggered, the completion event handler must wait while blocking simulation progress.

A “done flag” is needed to indicate calculation status. Thread-safe access to this flag is the only aspect of the setup that involves thread programming. Everything else is basic serial code.

Practical Mandelbrot Computation

The Intel Simics Simulator Public Release Training package includes the “workshop-02” example that uses this threading paradigm. It includes entire source code and instructions for running and evaluating compute accelerator improvements, including threading.