Explore the world of in-network computing for telecom workloads and uncover its benefits for your network infrastructure.

Next-generation hybrid cloud platform Azure Operator Nexus is for communications service providers. Azure Operator Nexus installs NFs in cloud and edge networks. NFs may perform a variety of activities, from layer4 load balancers, firewalls, NATs, and 5G user-plane functions (UPF) to deep packet inspection, radio access networking, and analytics. The huge number of traffic and concurrent flows that NFs handle makes their performance and scalability crucial to network operations.

Network operators had two choices for deploying these crucial NFs until recently. Two employ NFV on a cluster of commodity CPU servers, while one uses standalone hardware middlebox appliances.

The performance, memory capacity, cost, and energy efficiency of each option must be weighed against its workloads and operating conditions, such as traffic rate and the number of concurrent flows that NF instances must handle.

Our investigation demonstrates that CPU server based middleboxes are more cost effective, scalable, and flexible than proprietary ones. This technique can manage loads under hundreds of Gbps, making it effective for modest traffic. As traffic volume rises, the method fails and additional CPU cores must be allocated to network activities.

In-network computing: A new paradigm

Microsoft’s novel approach deploying NFs on programmable switches and network interface cards has caught industry and academic attention. The development of high-performance programmable network devices and data plane programming languages like P4 and NPL has enabled this change.

Programmable switching Application-Specific Integrated Circuits (ASIC) can handle tens of Tbps, or a few billion packets per second, while allowing data plane programmability. Programmable Network Interface Cards (NIC), or “smart NICs,” with NPUs or FPGAs provide a comparable option. These advances convert these devices’ data planes into programmable platforms.

This technical advancement has created in-network computing. We can operate a variety of CPU server or proprietary hardware device functions directly on network data plane devices. This contains NFs and other distributed system components. In-network computing allows network engineers to implement various NFs on programmable switches or NICs to handle large volumes of traffic (> 10 Tbps) cost-effectively (one switch versus tens of servers) without dedicating CPU cores to network functions.

Current network computing constraints

In-network computing has great promise, but its complete adoption in the cloud and edge is still elusive. Handling stateful application demands on a programmable data plane device has been the biggest problem. The existing technique is fine for running a single application with set, limited workloads, but it limits in-network computing’s potential.

Due to resource inelasticity, network operators and application developers’ developing demands and the existing, fairly restricted understanding of in-network computing differ. The paradigm is stressed as the number of in-network apps and traffic processing expand.

A single program may run on a device with limited resources, such as tens of MB of SRAM on a programmable switch. Expanding these restrictions requires major hardware upgrades, so when an application’s burden exceeds a device’s resource capacity, it fails. This limits the adoption and optimization of in-network computing.

Resource elasticity for in-network computing

To address resource limits in in-network computing, we’ve enabled resource elasticity. In switch applications those operating on programmable switches face the toughest resource and capability constraints of today’s programmable data plane devices. An on rack resource augmentation architecture is a more practical alternative to hardware intensive techniques like improving switch ASICs or hyper optimizing applications.

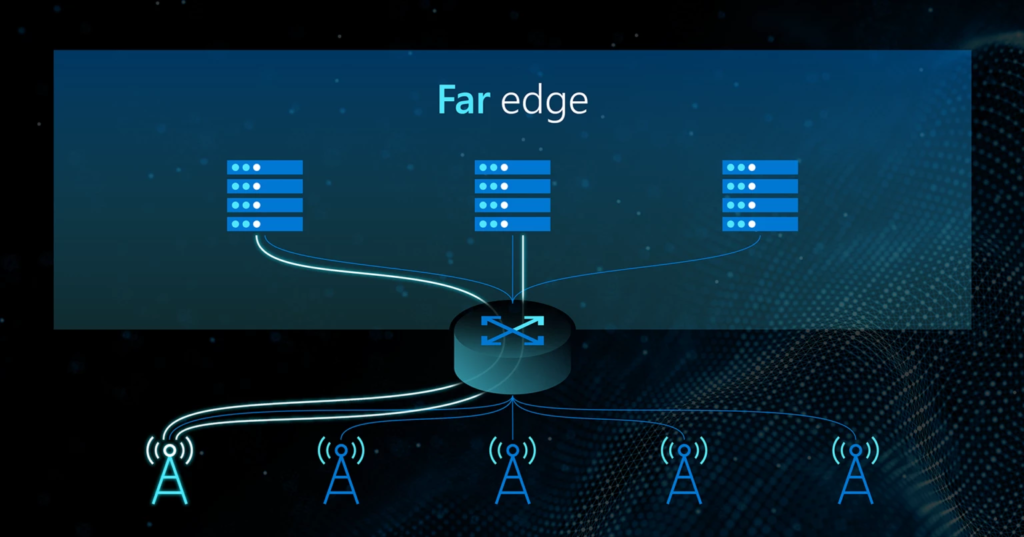

This concept envisions a rack mounted deployment of a programmable switch, smart NICs, and CPU server software switches. External devices provide an economical and gradual way to increase a programmable network’s effective capacity to meet future workload needs. This concept presents an innovative and viable answer to in-network computing’s restrictions.

At the 2020 ACM SIGCOMM conference, we introduced the Table Extension Architecture (TEA).An novel high performance virtual memory abstraction gives TEA elastic memory. This permits top of rack (ToR) programmable switches to handle NFs with one million per-flow table entries.

These may need hundreds of gigabytes of RAM, which switches lack. Ingenious TEA allows switches to access idle DRAM on CPU servers in the same rack cost efficiently and scalable. The innovative use of Remote Direct Memory Access (RDMA) technology hides complexity and provides application developers with just high level APIs.

TEA delivers low and predictable latency and scalable performance for table lookups without using the servers’ CPUs, according to our tests with different NFs. This unique design has been used in network telemetry and 5G user-plane services, attracting academic and industrial interest.

ExoPlane debuted at the USENIX Symposium on Networked Systems Design and Implementation in April.2 ExoPlane is an operating system for on-rack switch resource augmentation to serve various applications.

ExoPlane uses a realistic runtime operating paradigm and state abstraction to manage application states across numerous devices with low performance and resource overheads. The planner and runtime environment comprise the operating system. The planner loads various switch written programs with minimum or no changes and optimizes resource allocation depending on network operator and developer inputs.

The ExoPlane runtime environment effectively manages state, balances loads, and handles device failures across the switch and external devices. Our test shows that ExoPlane has low latency, scalable throughput, and rapid failover with a small resource footprint and little application changes.

The future of in-network computing

As we examine in-network computing, we see endless possibilities, intriguing research avenues, and new operational deployments. Our work with TEA and ExoPlane has showed us what on rack resource augmentation and elastic in-network computing can do. We think they can enable in-network computing for future applications, telecommunication workloads, and data plane hardware.

The changing environment of networked systems will constantly provide new difficulties and possibilities. Microsoft is actively studying, creating, and lighting up such technological improvements via infrastructure upgrades. In-network computing frees CPU cores, reducing cost, scaling, and improving functionality for Operators like Azure Operator Nexus.

[…] on our hardware platforms with top cloud platform providers is provided by the Dell Technologies Telecom Partner Self-Certification programme. This programme is part of our commitment to foster an open […]