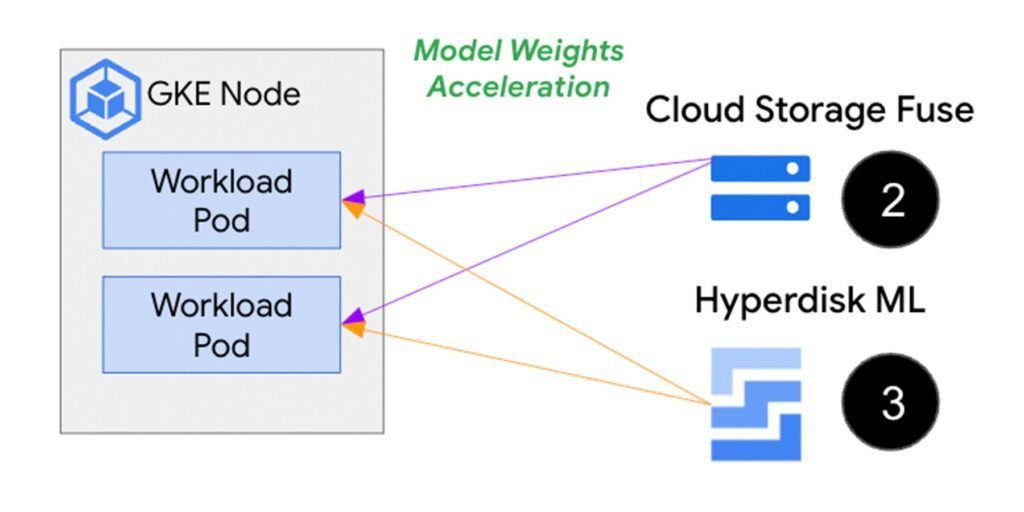

Cloud Storage Fuse or Hyperdisk ML accelerates model + weight load times from Google Cloud Storage.

The amount of model data required to support more complex AI models is growing. Costs and the end-user experience may be impacted by the seconds or even minutes of scaling delay that comes with loading the models, weights, and frameworks required to provide them for inference.

Inference servers like Triton, Text Generation Inference (TGI), or vLLM, for instance, are packed as containers that are often larger than 10GB; this might cause them to download slowly and prolong the time it takes for pods to start up in Kubernetes. The data loading issue is exacerbated by the fact that the inference pod must load model weights, which may be hundreds of gigabytes in size, after it has started.

In order to reduce the total time required to load your AI/ML inference workload on Google Kubernetes Engine (GKE), this article examines methods for speeding up data loading for both downloading models + weights and inference serving containers.

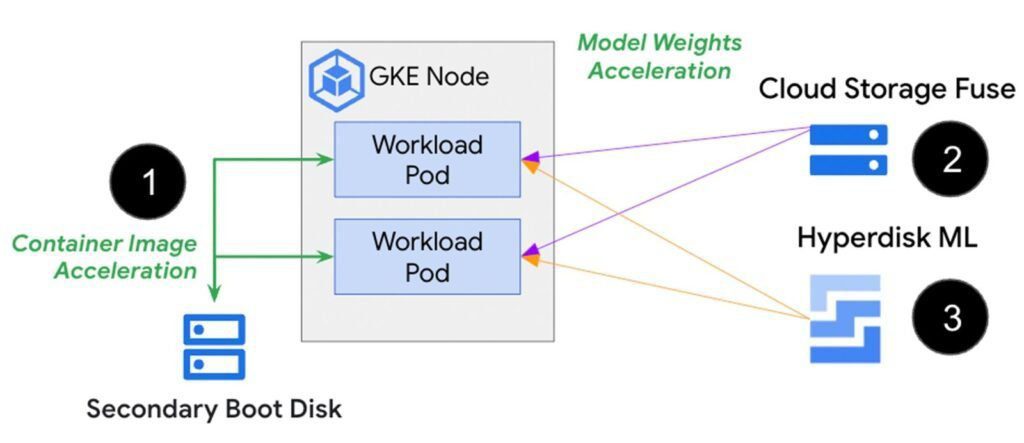

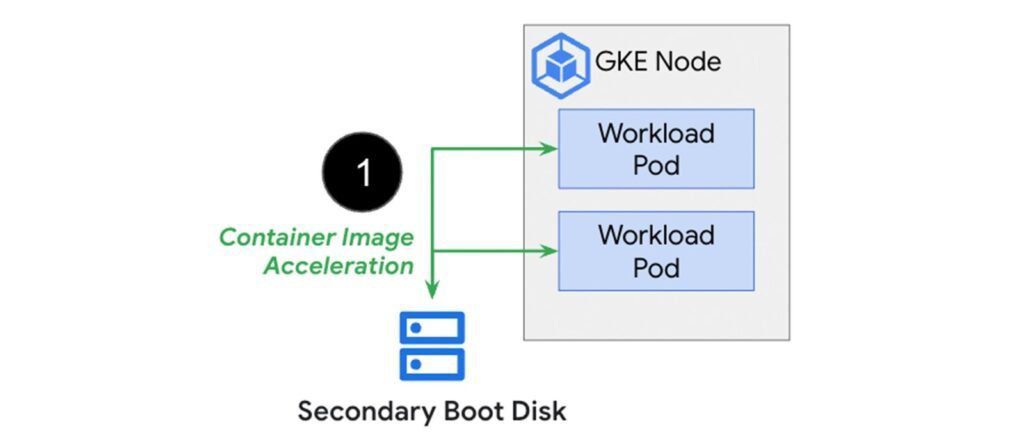

- Using secondary boot drives to cache container images with your inference engine and relevant libraries directly on the GKE node can speed up container load times.

- Using Cloud Storage Fuse or Hyperdisk ML to speed up model + weight load times from Google Cloud Storage.

In order to skip the image download procedure during pod/container starting, the graphic above depicts a secondary boot disk (1) that saves the container image in advance. Additionally, Cloud Storage Fuse (2) and Hyperdisk ML (3) are choices to link the pod to model + weight data saved in Cloud Storage or a network connected disk for AI/ML inference applications with demanding performance and scalability requirements. Below, we’ll examine each of these strategies in further depth.

Accelerating container load times with secondary boot disks

During construction, GKE enables you to pre-cache your container image onto a secondary boot drive that is connected to your node. By loading your containers in this manner, you may start launching them right away and avoid the image download stage, which significantly reduces startup time. The graphic below illustrates how download times for container images increase linearly with picture size. A cached copy of the container image that is already loaded on the node is then used to compare those timings.

When a 16GB container image is cached on a secondary boot drive in advance, load times may be as much as 29 times faster than when the image is downloaded from a container registry. Furthermore, you may take advantage of the acceleration regardless of container size using this method, which makes it possible for big container pictures to load consistently quickly!

To use secondary boot disks, first construct a disk containing all of your images, then make an image from the disk and provide the disk image when you build secondary boot disks for your GKE node pools. See the documentation for more details.

Accelerating model weights load times

Checkpoints, or snapshots of model weights, are produced by many machine learning frameworks and stored in object storage like Google Cloud Storage, which is a popular option for long-term storage. The two primary solutions for retrieving your data at the GKE-pod level using Cloud Storage as the source of truth are Hyperdisk ML (HdML) and Cloud Storage Fuse.

There are two primary factors to take into account while choosing a product:

- Performance: the speed at which the GKE node can load the data

- Operational simplicity: how simple is it to make changes to this information?

For model weights stored in object storage buckets, Cloud Storage Fuse offers a direct connection to Cloud Storage. To avoid repeated downloads from the source bucket, which increase latency, there is also a caching option for files that must be read more than once.

Because a pod doesn’t need to do any pre-hydration operational tasks in order to download new files into a designated bucket, Cloud Storage Fuse is an attractive option. Note that you will need to restart the pod with an updated Cloud Storage Fuse configuration if you alter the buckets to which the pod is attached. You may increase speed even further by turning on parallel downloads, which cause many workers to download a model at once, greatly enhancing model pull performance.

Compared to downloading files straight to the pod from Cloud Storage or another online source, Hyperdisk ML offers you superior speed and scalability. Furthermore, a single Hyperdisk ML instance may support up to 2500 nodes with an aggregate bandwidth of up to 1.2 TiB/sec. Because of this, it is a good option for inference tasks involving several nodes and read-only downloads of the same data. In order to utilize Hyperdisk ML, put your data into the disk both before and after each update. Keep in mind that if your data changes often, this adds operational overhead.

Your use case will determine the model+weight loading product you choose. Each is compared in further depth in the table below:

| Storage Option | Ideal Use Case | Performance | Availability | Model update process |

| Cloud Storage Fuse with GKE CSI | Frequent data updates | Fast | Regional. Pods can freely be deployed across zones and access data. | Redeploy pods and update persistent volume claims to point the Cloud Storage CSI to the bucket or file with the new data. |

| Hyperdisk ML with GKE CSI | Minimal data updates | Fastest | Zonal. Data can be made regional with an automated GKE clone feature to make data available across zones. | Create new persistent volume, load new data, and redeploy pods that have a PVC to reference the new volume. |

As you can see, while designing a successful model loading approach, there are more factors to consider in addition to throughput.

In conclusion

Workload starting times may be prolonged when loading big AI models, weights, and container pictures into GKE-based AI models. Using Hyperdisk ML OR Cloud Storage Fuse for models + weights, or a secondary boot disk for container images, a mix of the three previously mentioned techniques Prepare to speed up your AI/ML inference apps’ data load times.