Gemini Model Planes

Given that generative AI is currently a hot topic among developers and business stakeholders, it’s critical to investigate the ways in which server less execution engines such as Google Cloud’s Workflows can automate and coordinate large language model (LLM) use cases. They recently discussed using workflows to orchestrate Vertex AI’s PaLM and Gemini APIs. In this blog post, they demonstrate a specific use case with broad applicability long-document summarization that Workflows can accomplish.

Gemini Large Language Model

To execute complex tasks like document summarising, open-source LLM orchestration frameworks such as LangChain (for Python and TypeScript developers) or LangChain4j (for Java developers) integrate different components including LLMs, document loaders, and vector databases. For this reason, Workflows can likewise be used without requiring a large time investment in an LLM orchestration system.

Techniques for Summarising

Summarising a brief document is as simple as typing its content in its entirety as a prompt into the context window of an LLM. On the other hand, large language model prompts are typically token-count constrained. Extended documents necessitate an other methodology. There are two typical methods:

Map/reduce

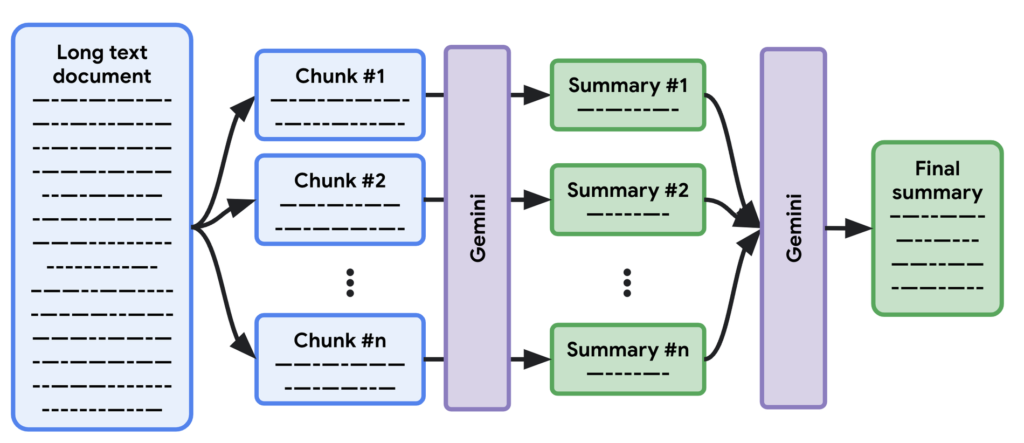

To make a lengthy document fit the context window, it is divided into smaller portions. A summary is written for every segment, and as a last step, a summary of all the summaries is written.

Iterative improvement

google cloud assess the document individually, much like the map/reduce method. The first section is summarised, and the LLM iteratively refines it with information from the next section, and so on, all the way to the end of the document.

Both approaches produce excellent outcomes. The map/reduce strategy does, however, have one benefit over the refining technique. Refinement is a progressive process in which the previously refined summary is used to summarise the next section of the document.

You can use map/reduce to construct a summary for each segment in parallel (the “map” operation) and a final summary in the last phase (the “reduce” action), as shown in the picture below. Compared to the sequential method, this is faster.

Google Gemini Models

In an earlier post, they demonstrated how to use Workflows to invoke PaLM and Gemini models and emphasised one of its primary features: concurrent step execution. This function allows us to concurrently produce summaries of the lengthy document parts.

- When a new text document is added to a Cloud Storage bucket, the workflow is started.

- The text file is divided into “chunks” that are successively summarised.

- The smaller summaries are all gathered together and combined into a single summary in the last step of summarising.

- Thanks to a subworkflow, all calls to the Gemini 1.0 Pro model are made.

Obtaining the text file and concurrently summarising the relevant portions (“map” component)

A few constants and data structures are prepared in the assign file vars phase. Here, google cloud set the chunk size to 64,000 characters so that the text would fit in the LLM’s context window and adhere to Workflow’s memory constraints. In addition, there are variables for the summaries’ lists and one for the final summary.

The dump file content sub-step loads each section of the document from Cloud Storage before the loop over chunks step removes each text chunk in parallel. The subworkflow in generate chunk summary, which summarises that portion of the document, is then invoked using the Gemini model. The current summary is finally saved in the summaries array.

An overview of summaries (the “reduce” portion)

With all of the chunk summaries now in hand, they can combine the smaller summaries into a final summary of summaries, or aggregate summary as you may call it:They concatenate each chunk summary in concat summaries. To obtain the final summary, they make one final call to google cloud Gemini model summarization subworkflow in the reduce summary phase. They also return the results, including the final summary and the chunk summaries, in return result.

Asking for summaries from the Gemini model

Google cloud “map” and “reduce” procedures both invoke a subworkflow that uses Gemini models to capture the call. Let’s examine this last step of their process in more detail: They set up a few variables for the desired LLM configuration (in this case, Gemini Pro) in init.

They perform an HTTP POST request to the model’s REST API in the call gemini phase. Take note of how merely providing the OAuth2 authentication technique allows us to declaratively authenticate to this API. They pass the prompt for a summary, certain model parameters (like temperature) and the maximum length of the summary to be generated in the body.

The ensuing synopsis

The process is started and completed by saving the text of Jane Austen’s “Pride and Prejudice” into a Cloud Storage bucket. This produces the following preliminary and comprehensive summaries:

Gemini Models

Although they kept the workflow straightforward for the sake of this article, there are a number of ways it might be made even better. For instance, they hard-coded the total character count for each section’s summary, but it could be a process parameter or even calculated based on how long the context-window limit of the model is.

They could deal with situations where an exceptionally long list of document section summaries wouldn’t fit in memory because workflows themselves have a memory limit for the variables and data they store in memory. Not to be overlooked is google cloud more recent large language model, Gemini 1.5, which can summarise a lengthy document in a single run and accept up to one million tokens as input. Of course, you may also utilise an LLM orchestration framework, but Workflows itself can handle certain interesting use cases for LLM orchestration, as this example shows.

In brief

In this paper, they developed a lengthy document summarization exercise without the usage of a specialised LLM framework and examined a new use case for orchestrating LLMs with Workflows. They decreased the time required to construct the entire summary by using Workflows’ parallel step capabilities to create section summaries concurrently.