Local LLM

Large language models (LLMs) have remarkable capabilities, but they also demand a lot of processing power, which is rarely available on a home computer. As such, Intel only option is to install them on strong custom AI servers that are housed either on-site or in the cloud.

The Reasons for Preferring Local LLM Inference

What if Intel could use a standard personal PC to run cutting edge technology open source LLMs? Wouldn’t they benefit from something like:

- Enhanced privacy: No external API would receive data for analysis.

- Reduced latency: Round trip network costs would be avoided.

- Offline work: As the dream of frequent travellers, intel might operate without network connectivity.

- Reduced cost: They wouldn’t pay for model hosting or API calls.

- Customisability: Users are able to select the models that most closely match the tasks they perform on a regular basis. They can also refine these models or apply local retrieval-augmented generation (RAG) to make them more relevant.

All of this sounds quite thrilling. Why then don’t Intel act on it right now? Going back to a opening statement, the average a laptop that is reasonably priced is not computationally powerful enough to execute LLMs with a satisfactory level of performance. No multi-thousand core GPU or extremely quick high-memory bandwidth is visible.

LLM Local

Why It’s Now Possible to Do Local LLM Inference

Nothing is beyond the capacity of the human mind to be made smaller, faster, more elegant, or more affordable. The AI community has been putting a lot of effort into making models smaller without sacrificing their ability to anticipate future events. There are three fascinating areas:

Hardware acceleration

The most popular deep learning operations, such matrix multiplication and convolution, are accelerated by hardware built into modern CPU architectures. This allows for the development of new generative AI applications on AI PCs and greatly increases their speed and effectiveness.

Small language models (SLMs)

These models are as good as or better than larger models because of their creative designs and training methods. Their reduced number of parameters means that inference takes less time and memory, which makes them great choices in contexts with limited resources.

Microsoft Phi-2 Model

Quantisation

By lowering the bit width of model weights and activations for instance, from 16-bit floating point (fp16) to 8-bit integers (int8) quantization is a technique that reduces memory and computing needs. By lowering the bit count, the resulting model uses less memory during inference, which shortens the latency for memory-bound processes like the text generation process’s decoding stage. Moreover, integer arithmetic allows for speedier execution of operations such as matrix multiplication when quantising both the weights and activations.

Intel utilise all of the aforementioned in this essay. Thanks to the OpenVINO Toolkit integration in thire Optimum for Intel library, they can apply 4-bit quantisation to the model weights starting with the Microsoft Phi-2 model. Next, they will use an Intel Core Ultra powered mid-range laptop to perform inference.

Core Ultra Processors from Intel

The Intel Core Ultra processor, which debuted in December 2023, is a new architecture designed specifically for high-end laptops.

The Intel Core Ultra CPU, the company’s first client processor to employ a chiplet design, has the following features:

- A CPU with up to 16 cores that uses less power

- Up to eight Xe-core integrated GPUs (iGPUs), each with sixteen Xe Vector Engines (XVE). An XVE can operate on 256-bit vectors using vector operations, as the name suggests. Additionally, it carries out the DP4a instruction, which adds a third 32-bit integer to the result of computing the dot product of two vectors of 4-byte values and stores the result in a 32-bit integer.

- An initial offering for Intel architectures is a neural processing unit (NPU). A specialised AI engine designed for effective client AI is the NPU. It is designed to efficiently manage complex AI calculations, freeing up the primary CPU and graphics for other uses. The NPU is intended to be more power-efficient for AI tasks when compared to using the CPU or iGPU.

They chose a mid-range laptop with an Intel Core Ultra 7 Processor 155H to execute the demo below. Let’s choose a cute little language model to use with this laptop now.

Microsoft Phi-2

The Phi-2 Model from Microsoft

Phi-2, a 2.7 billion parameter model trained for text creation, was released in December 2023.

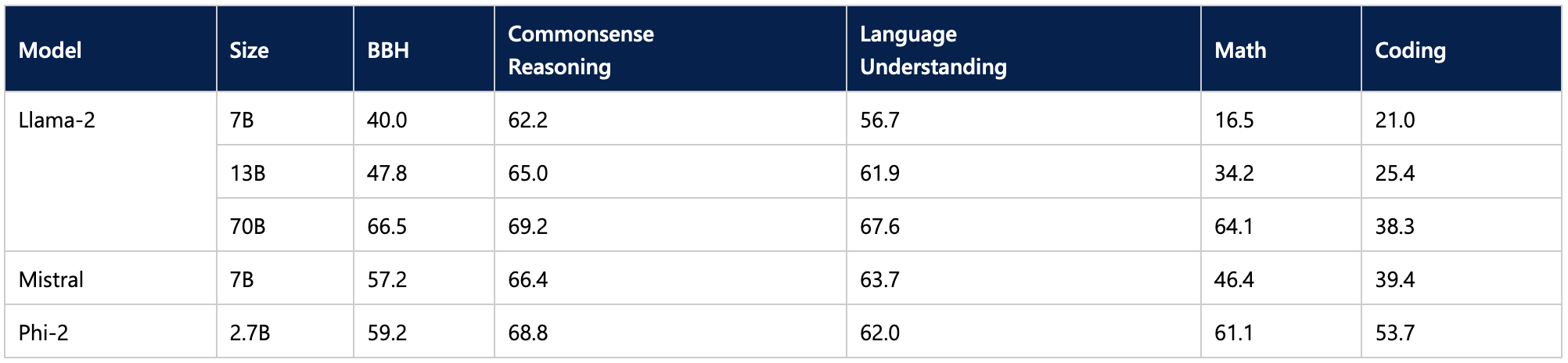

Unaffected by its lower size, Phi-2 surpasses some of the top 7-billion and 13-billion LLMs on documented benchmarks and even manages to keep up with the significantly larger Llama 2 70B model.

OpenVINO Quantization Integrated with Optimum

The OpenVINO toolkit is an open-source toolbox that focusses on model quantisation to optimise AI inference across several Intel hardware platforms (GitHub*, docs).

Intel is collaborating with Intel to incorporate OpenVINO into Optimum, open-source library that speeds up Hugging Face* models on Intel processors (GitHub, documentation).

First, confirm that you have installed all required libraries and the most recent version of optimum-intel:

pip install –upgrade-strategy eager optimum[openvino,nncf]

It is simple to quantise Phi-2 to 4 bit with this integration. They load the model from the hub, specify the optimisation parameters, and define a quantisation setup. After optimisation and quantisation, save it locally.

The fraction of weights intel quantise to 4 bit (i.e., 80%) and the remaining weights to 8 bit is determined by the ratio parameter. The weight quantisation groups’ sizes are defined by the group_size argument, with each group having a unique scaling factor. Accuracy is typically increased by decreasing these two numbers, albeit at the cost of increased model size and inference latency.

What is the speed of Intel laptop’s quantised model then? To make your own judgement, watch the videos below. Don’t forget to choose 1080p for the highest level of sharpness.

Intel model is asked a high school physics question in the first video: “Lily drops a rubber ball from the top of a wall. Two meters make up the wall. How much time will it take the ball to fall to the ground?

In the second video, intel model is given a coding challenge: “Use Numpy to create a class that implements a fully connected layer with forward and backward functions.” For coding, use markdown markers.”

The resulting response in both cases is of extremely good quality, as you can see. The excellent quality of Phi-2 has not been compromised by the quantization procedure, and the generation speed is sufficient.

What is Openvino?

An open-source software toolkit called OpenVINO is used to deploy and optimise deep learning models. With comparatively few lines of code, programmers can create scalable and effective AI solutions thanks to it. It works with a number of widely used model categories and formats, including generative AI, computer vision, and huge language models.

In summary

Hugging Face and Intel have made it possible for you to run LLMs on your laptop and take advantage of all the advantages of local inference, including low latency, cheap cost, and privacy. More high-quality models tailored for Intel Core Ultra and its successor, Lunar Lake, are something expect to see. Why not try quantising models for Intel platforms with the OpenVINO Integration with Optimum library and share your fantastic models on the Hugging Face Hub? More is always useful.