NVIDIA Develops the Automotive Ecosystem to Introduce Physical AI in Public Places. To deliver AI from the cloud to the car, top global automakers, mobility innovators, suppliers, and software providers use NVIDIA accelerated computing.

With over 20 years of automotive computing, software, and safety experience, NVIDIA is leading the way in the AV revolution, enabling innovation from the cloud to the automobile.

Numerous transportation professionals are exhibiting their most recent developments using NVIDIA technologies, which include those for passenger cars, trucks, commercial vehicles, and more, at NVIDIA GTC, a global AI conference being held this week in San Jose, California.

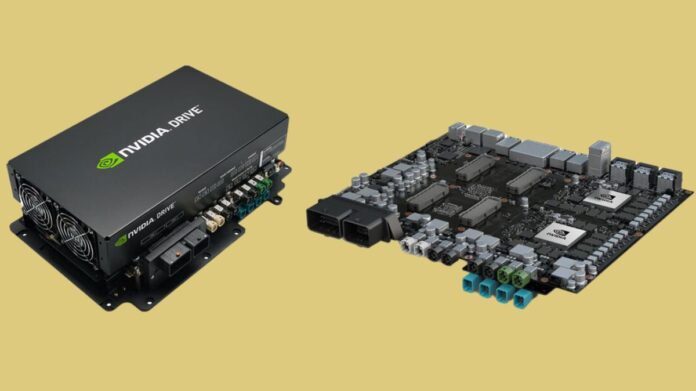

Mobility leaders are increasingly using NVIDIA’s three main accelerated compute platforms: the NVIDIA DRIVE AGX in-car computer, which processes real-time sensor data for safe, highly automated, and autonomous driving capabilities; the NVIDIA DGX systems, which train the AI-based stack in the data centre; and the NVIDIA Omniverse and Cosmos, which run on NVIDIA OVX systems for simulation and synthetic data generation.

This opens up new possibilities for the multitrillion-dollar vehicle industry’s developers and manufacturers to create, produce, and implement intelligent mobility solutions that are functionally safe and give customers safer, smarter, and more pleasurable experiences.

Transforming Passenger Vehicles

Using NVIDIA’s accelerated compute platforms, General Motors (GM), the biggest automaker in the United States, is working with the company to create and construct its next-generation cars, plants, and robots. In order to train AI models, GM has been investing in NVIDIA GPU platforms.

The firms’ partnership has now grown to include the NVIDIA DRIVE AGX-accelerated large-scale deployment of next-generation cars and the optimization of factory planning through Omnivese with Cosmos. In the end, this will enable safer, smarter, and more accessible mobility by assisting GM in developing tangible AI systems that are suited to its corporate vision, expertise, and craft.

Next-generation electric vehicles from Volvo Cars and its subsidiary Zenseact use the NVIDIA DGX platform to contextualize and analyze sensor data, gain new insights, and train future safety models that will improve overall vehicle performance and safety. Volvo Cars uses the NVIDIA DRIVE AGX in-vehicle computer in its vehicles.

Lenovo and the robotics startup Nuro have partnered to develop a strong end-to-end system for level 4 autonomous cars that puts convenience, safety, and dependability first. NVIDIA DRIVE AGX in-vehicle computing is the foundation of the system.

Advancements in Trucking

Trucking is also being revolutionized by NVIDIA’s AI-powered technologies, which are assisting in resolving urgent issues like a lack of drivers, growing e-commerce demands, and high operating expenses. The processing power required for safe, dependable, and effective autonomous operations is provided by NVIDIA DRIVE AGX, greatly enhancing road safety and logistics.

For its freight-only class 6 and 7 trucks, made by Isuzu Motors, which provide driverless middle-mile delivery of a variety of items to Fortune 500 clients like Tyson Foods, Kroger, and Loblaw, Gatik is integrating DRIVE AGX for the onboard AI processing required.

In order to sustainably increase efficiency and lower shipper costs, Uber Freight is also implementing DRIVE AGX as the AI computing foundation of its present and future carrier fleets.

Torc is creating a physical, scalable AI computing architecture for self-driving cars. The solution supports Torc’s productization and scaled market entrance in 2027 by utilising NVIDIA DRIVE AGX in-vehicle compute and the NVIDIA DriveOS operating system with Flex’s Jupiter platform and production capabilities.

Increasing Interest in DRIVE AGX

With production cars built on the NVIDIA DRIVE AGX Thor centralized car computer beginning to hit the road, the next wave of mobility is already here. The NVIDIA DRIVE AGX Orin platform is the AI brain driving today’s intelligent fleets.

Magna is a major international automotive supplier that is assisting in meeting the growing demand for the DRIVE Thor platform, which is built on the NVIDIA Blackwell architecture and is intended for the most demanding processing workloads, including as those involving large language models (LLMs), generative artificial intelligence (AI), and vision language models. In order to incorporate active safety and comfort features as well as AI experiences for the interior cabin, Magna will create driving systems constructed with DRIVE AGX Thor for use in automakers’ vehicle roadmaps.

Simulation and Data: The Backbone of AV Development

The Omniverse Blueprint for AV simulation, a reference approach for building realistic 3D environments for autonomous vehicle training, testing, and validation, was unveiled by NVIDIA earlier this year. NVIDIA Cosmos world foundation models (WFMs) are being added to the blueprint in order to increase the diversity of photoreal data.

Cosmos, which was first introduced at the January CES trade show, is already being used in the automotive industry. Plus, for example, is integrating Cosmos physical AI models into its SuperDrive technology to hasten the development of level 4 self-driving trucks.

In order to increase situation diversity, Foretellix is expanding its integration of the blueprint by incorporating weather and illumination circumstances into its sensor simulation scenarios utilising the Cosmos Transfer WFM. In order to facilitate physics-based modelling of camera, lidar, radar, and ultrasonic sensor data, Mcity is incorporating the blueprint into the digital twin of its AV testing facility.

The design has been incorporated into CARLA, an open-source AV simulator that provides high-fidelity sensor simulation. Capgemini, a global systems integrator, will be the first to include CARLA’s Omniverse integration into their AV development platform to improve sensor simulation.

NVIDIA is training and optimising NVIDIA Cosmos‘ simulation capabilities utilising Nexar’s vast, high-quality edge-case data. Nexar is accelerating its AI research by improving AV training, high-definition mapping, and predictive modelling by utilising Cosmos, neural infrastructure models, and the NVIDIA DGX Cloud platform.

Improving In-Vehicle Experiences With NVIDIA AI Enterprise

Mobility leaders are utilising generative and agentic AI to improve in-vehicle experiences by integrating the NVIDIA AI Enterprise software platform, which is powered by DRIVE AGX.

The next generation of agentic in-vehicle user experiences will be enhanced by Cerence AI’s new LLM-based AI assistant platform, Cerence xUI, which is being showcased at GTC. The Cerence xUI hybrid platform, which is first optimized on NVIDIA DRIVE AGX Orin, operates both onboard and in the cloud.

The CaLLM family of language models serves as the basis for Cerence xUI. It is refined using Cerence AI’s automotive dataset and is built on open-source foundation models. Through the use of NVIDIA AI Enterprise and the NVIDIA TensorRT-LLM library and NVIDIA NeMo, Cerence AI has enhanced inference performance and optimized CaLLM to function as the primary agentic orchestrator, enabling enhanced driver experiences in the cloud and at the edge.

Additionally, SoundHound will be showcasing its next-generation in-car voice assistant, which leverages NVIDIA DRIVE AGX and generative AI at the edge to improve the in-car experience by delivering cloud-based LLM intelligence straight to cars.

Autonomy’s Complexity and NVIDIA’s Safety-First Approach

When it comes to putting large numbers of automated and self-driving cars on the road, safety is paramount. However, one of the most difficult computing problems of current times is creating AVs. It requires a great deal of processing power, accuracy, and a steadfast dedication to safety.

By decreasing accidents and saving lives, autonomous vehicles and highly automated vehicles offer to provide mobility for those who need it most. NVIDIA has created NVIDIA Halos, a full-stack comprehensive safety system that integrates chips, software, hardware, AI models, and vehicle design to enable the safe development of autonomous vehicles (AVs) from the cloud to the vehicle, in order to assist fulfil this promise.

Today at GTC, NVIDIA will have its first AV Safety Day, which will include in-depth talks on automotive safety frameworks and implementation.

Additionally, on Thursday, March 20, NVIDIA will hold Automotive Developer Day, which will include presentations on the most recent developments in end-to-end AV development and other topics.

New Resources for AV Programmers

In order to speed up the development and implementation of end-to-end stacks from cloud to automobile, NVIDIA has announced new NVIDIA NIM microservices for the automotive industry. Motional’s nuScenes collection is used by the new NIM microservices for in-car applications, which include:

- BEVFormer is a cutting-edge transformer-based model that creates a single bird’s-eye view representation for 3D perception by combining multi-frame camera data.

- SparseDrive is a comprehensive autonomous driving model that generates a safe planning trajectory by combining motion prediction and planning.

NVIDIA provides a range of models for automotive enterprise applications, such as Cosmos Nemotron, a vision language model that queries and summarizes images and videos for multimodal understanding and AI-powered perception; NV-CLIP, a multimodal transformer model that creates embeddings from images and text; and many more.