Vision language models are models that can learn from both text and images at the same time to perform a variety of tasks, such as labeling photos and answering visual questions. The primary components of visual language models are covered in this post: get a general idea, understand how they operate, choose the best model, utilize them for inference, and quickly adjust them using the recently available trl version!

What is a Vision Language Model?

Multimodal models that are able to learn from both text and images are sometimes referred to as vision language models. They are a class of generative models that produce text outputs from inputs that include images and text. Large vision language models can deal with a variety of picture types, such as papers and web pages, and they have good zero-shot capabilities and good generalization.

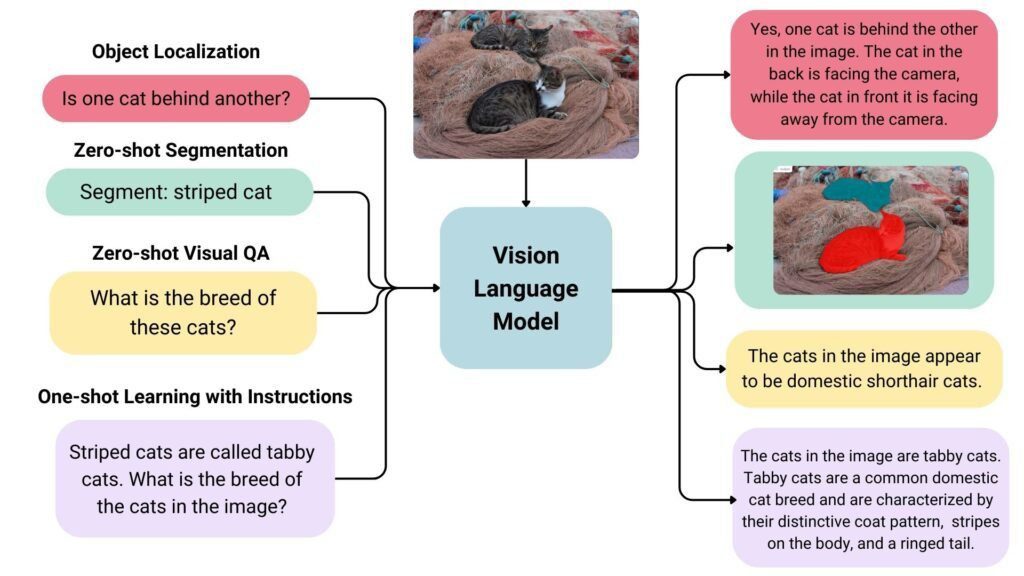

Among the use cases are image captioning, document comprehension, visual question answering, image recognition through instructions, and image chat. Spatial features in an image can also be captured by some vision language models. When asked to detect or segment a specific subject, these models can produce segmentation masks or bounding boxes. They can also localize various items or respond to queries regarding their absolute or relative positions. The current collection of huge vision language models varies greatly in terms of their training data, picture encoding methods, and, consequently, their capabilities.

An Overview of Vision Language Models That Are Open Source

The Hugging Face Hub has a large number of open vision language models. The table below lists a few of the most well-known.

- Base models and chat-tuned models are available for use in conversational mode.

- “Grounding” is a characteristic of several of these models that lessens model hallucinations.

- Unless otherwise indicated, all models are trained on English.

Vision Language Model

Finding the right Vision Language Model

The best model for your use case can be chosen in a variety of ways.

Vision Arena is a leaderboard that is updated constantly and is entirely dependent on anonymous voting of model results. Users input a prompt and a picture in this arena, and outputs from two distinct models are anonymously sampled before the user selects their favorite output. In this manner, human preferences alone are used to create the leaderboard.

Another leaderboard that ranks different vision language models based on similar parameters and average scores is the Open VLM Leaderboard. Additionally, you can filter models based on their sizes, open-source or proprietary licenses, and rankings for other criteria.

The Open VLM Leaderboard is powered by the VLMEvalKit toolbox, which is used to execute benchmarks on visual language models. LMMS-Eval is an additional evaluation suite that offers a standard command line interface for evaluating Hugging Face models of your choosing using datasets stored on the Hugging Face Hub, such as the ones shown below:

accelerate launch –num_processes=8 -m lmms_eval –model llava –model_args pretrained=”liuhaotian/llava-v1.5-7b” –tasks mme,mmbench_en –batch_size 1 –log_samples –log_samples_suffix llava_v1.5_mme_mmbenchen –output_path ./logs/

The Open VLM Leaderbard and the Vision Arena are both restricted to the models that are provided to them; new models must be added through updates. You can search the Hub for models under the task image-text-to-text if you’d like to locate more models.

The leaderboards may present you with a variety of benchmarks to assess vision language models. We’ll examine some of them.

MMMU

The most thorough benchmark to assess vision language models is A Massive Multi-discipline Multimodal Understanding and Reasoning Benchmark for Expert AGI (MMMU). It includes 11.5K multimodal tasks that call for college-level topic knowledge and critical thinking in a variety of fields, including engineering and the arts.

MMBench

3000 single-choice questions covering 20 distinct skills, such as object localization and OCR, make up the MMBench assessment benchmark. The study also presents Circular Eval, an assessment method in which the model is supposed to provide the correct response each time the question’s answer options are jumbled in various combinations. Other more specialized benchmarks are available in other disciplines, such as OCRBench document comprehension, ScienceQA science question answering, AI2D diagram understanding, and MathVista visual mathematical reasoning.

Technical Details

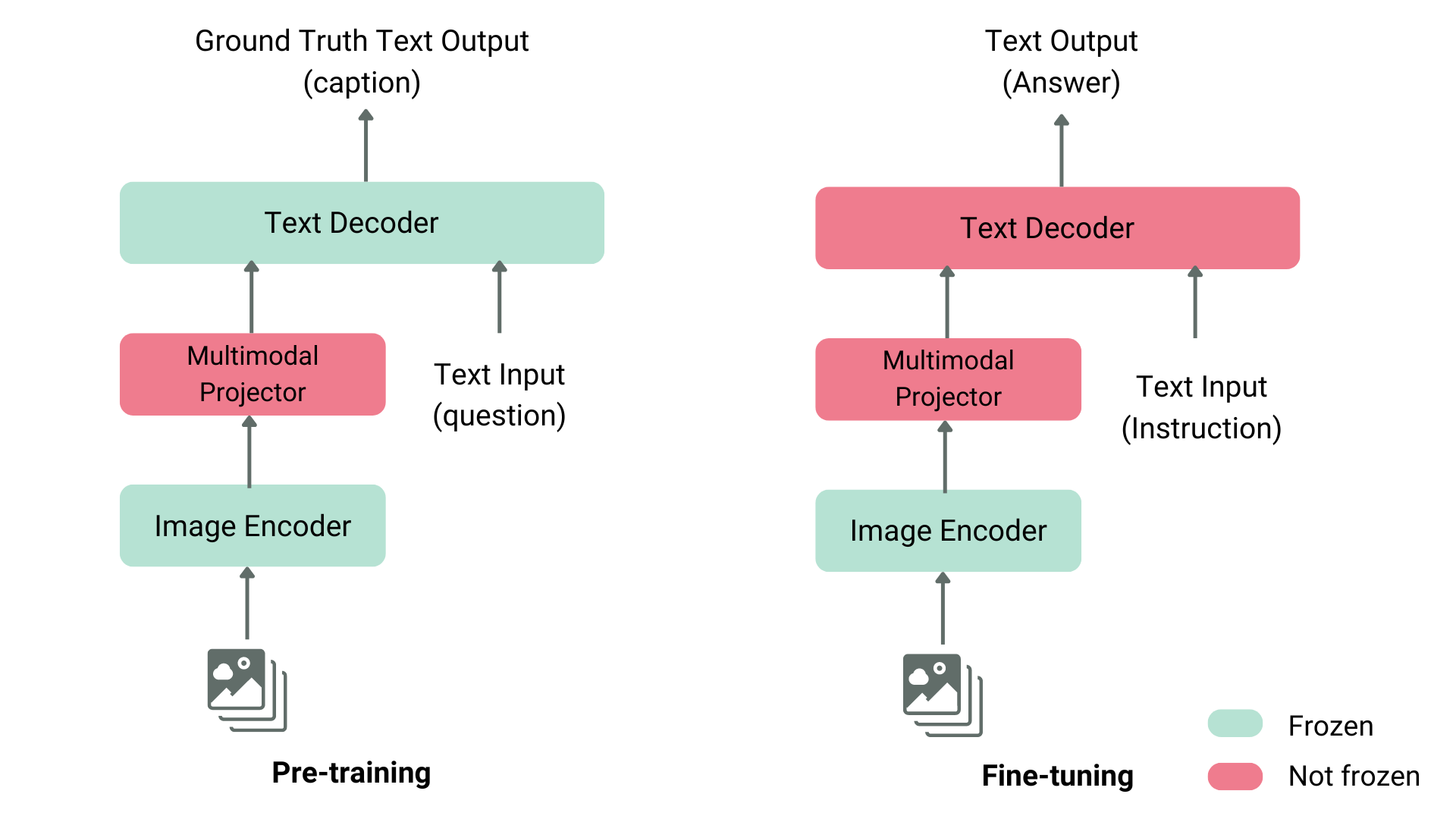

A vision language model can be pretrained in a number of ways. Unifying the text and image representation and feeding it to a text decoder for generation is the key trick. An image encoder, an embedding projector often a dense neural network to align picture and word representations, and a text decoder are frequently stacked in the most popular and widely used models. Regarding the training phases, many models have been used various methodologies.

For example, LLaVA includes a Vicuna text decoder, a multimodal projector, and a CLIP image encoder. After feeding the GPT-4 a dataset of pictures and captions, the authors produced queries pertaining to both the image and the caption. The authors have merely trained the multimodal projector to align the image and text features by feeding the model images and created questions, comparing the model output to the ground truth captions, and freezing the image encoder and text decoder. Following the pretraining of the projector, they train the projector using the decoder, unfreeze the text decoder, and maintain the image encoder frozen. The most popular method for training vision language models is pre-training and fine-tuning.

In contrast to LLaVA-like pre-training, the creators of KOSMOS-2 decided to fully train the model end-to-end, which is computationally costly. To align the model, the authors then fine-tuned language-only instruction. Another example is the Fuyu-8B, which lacks even an image encoder. Rather, the sequence passes via an auto-regressive decoder after picture patches are supplied straight into a projection layer. Pre-training a vision language model is usually not necessary because you can either utilize an existing model or modify it for your particular use case.

What Are Vision Language Models?

A vision-language model is a hybrid of natural language and vision models. By consuming visuals and accompanying written descriptions, it learns to link the information from the two modalities. The vision component of the model pulls spatial elements from the photographs, while the language model encodes information from the text.

Detected objects, image layout, and text embeddings are mapped between modalities.For example, the model will learn to associate a term from the text descriptions with a bird in the picture.

In this way, the model learns to understand images and translate them into text, which is Natural Language Processing, and vice versa.

VLM instruction

To create VLMs, zero-shot learning and pre-training foundation models are required. Transfer learning techniques such as knowledge distillation can be used to improve the models for more complex downstream tasks.

These are simpler techniques that, despite using fewer datasets and less training time, yield decent results.

On the other hand, modern frameworks use several techniques to provide better results, such as

- Learning through contrast.

- Mask-based language-image modeling.

- Encoder-decoder modules with transformers, for example.

These designs may learn complex correlations between the many modalities and produce state-of-the-art results. Let’s take a closer look at these.