Sub-10 billion factor algorithms democratise on-device generative AI

Welcome to AI on the Edge, a new weekly content series featuring the latest on-device artificial intelligence insights from our most active subject matter experts on this dynamic, ever-expanding field.

articles shown the exorbitant costs and AI privacy problems of cloud-only generative AI models. On-device generative AI is the only way to accelerate AI adoption and innovation.

On-device generative AI will grow with two converging vectors

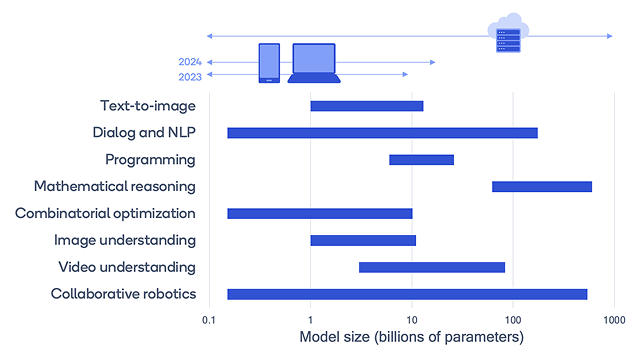

Chipsets for edge devices like smartphones, VR headsets, and PCs are improving processing and memory performance. Stable Diffusion, with over 1 billion parameters, runs on phones and PCs, and many other generative AI models with 10 billion or more parameters will soon run on devices.

The other vector reduces the number of parameters generative AI models need to provide reliable outputs. Smaller models with 1 to 10 billion parameters are accurate and improving.

How useful are sub-10 billion parameter models?

How relevant are sub-10 billion parameter models in a world where generative AI models increase from 10s to 100s to trillions of parameters?

Sub-10 billion parameter large language models (LLMs), automatic voice recognition (ASR) models, programming models, and language vision models (LVMs) are emerging that produce meaningful outputs for a wide range of use cases. These smaller AI models are not proprietary or exclusive to a few leading AI businesses. The availability of open-source foundation models generated and optimised by a global open-source community that is accelerating model creation and modification is also fueling them.

Public foundation LLMs like Meta’s Llama 2 have 7B, 13B, and 70B parameters. The more accurate larger models can be utilised for knowledge distillation, a machine learning technique that transfers knowledge from a large model to a smaller one, or to provide high-quality training instances.

Improving imitation learning and knowledge distillation to reduce model parameters while preserving accuracy and reasoning is a priority. Microsoft has launched Orca, a 13 billion-parameter model that “imitates the logic and reasoning of larger models.”1 GPT-4 step-by-step explanations help it outperform state-of-the-art instruction-tuned models.

Open-source foundation models are often fine-tuned using specialised datasets for a use case, topic, or subject matter to produce domain-specific applications that surpass the original model. The cost and time to fine-tune models is decreasing with low-rank adaptation.

What devices will execute interesting models under 10 billion parameters?

Edge gadgets like smartphones, tablets, and PCs have billions of units installed and regular upgrades that improve performance. While many of these gadgets use AI, devices that can run sub-10 billion parameter generative AI models have hit the market in the past year. Millions of devices can run these models even in that short time frame.

As noted, Snapdragon 8 Gen 2 processor devices can execute a 1 billion-parameter version of Stable Diffusion. Snapdragon-powered Samsung Galaxy S23 smartphones run LLMs with 7 billion parameters. These devices will increase as natural replacement cycles refresh the installed base.

With the growing availability of open-source foundation models, rapid innovation in the AI research and open-source communities, and lower costs to fine-tune generative AI models, we are seeing a wave of small yet accurate models covering a wide range of use cases and customised for specific domains. In-device generative AI is interesting and has almost no limits.

Fine-tuned models might be smaller than foundation models but still produce valuable and actionable results because to their narrower emphasis.

Other methods can customise and shrink models while preserving accuracy. Future AI on the Edge postings will cover these methods, knowledge distillation, LoRA, and fine-tuning.

These sub-10 billion parameter models can perform what?

The following are several sub-10 billion parameter models and their application cases

1.Meta’s Llama 2 series of publicly available foundation LLMs has a 7 billion parameter model that underpins several LLMs for general knowledge questions, programming, and summarising content. Based on “publicly available instruction datasets and over 1 million human annotations,” Llama-2-Chat has been fine-tuned for chat use cases and tested across reasoning, coding, competency, and knowledge standards.

2. Code Llama is a cutting-edge LLM that generates code and natural language about code.

Google’s proprietary PaLM 2 foundation LLM Gecko has fewer than 2 billion parameters. Gecko summarises text and aids email and text writing. PaLM 2 was trained on multilingual text in over 100 languages, scientific publications, and mathematical expressions to improve reasoning and source code datasets to improve coding.

3.The 1 billion-parameter open-source LVM Stable Diffusion (version 1.5) by Stability AI has been optimised for image production using short text prompts.

Qualcomm Technologies demonstrated Stable Diffusion at Mobile World Congress 2023 on a Snapdragon 8 Gen 2 smartphone in aeroplane mode using quantization to reduce memory footprint. ControlNet, a 1.5 billion open-sourced generative AI picture-to-image model, builds on Stable Diffusion and provides more precise image generation by conditioning on an input image and text description. We presented this on a smartphone at CVPR 2023.

4.OpenAI’s proprietary 1.6 billion-parameter ASR model, Whisper, can transcribe and translate several languages to English

DoctorGPT is an open-source 7 billion parameter LLM that has been fine-tuned from Llama 2 with a medical dialogue dataset to act as a medical assistant and pass the US Medical Licencing Exam.

5.Fine-tuning foundation models for certain domains is powerful and disruptive; this is only one example of numerous foundation model-based chatbots.

Baidu’s Ernie Bot Turbo LLM has 3 billion, 7 billion, and 10 billion parameters. This model was trained using massive Chinese language datasets to give Chinese-speaking clients “stronger dialogue question and answer, content creation and generation capabilities, and faster response speed”.

6.Chinese gadget manufacturers use it as an alternative to ChatGPT and other LLMs trained on English datasets.

LLMs, LVMs, and other generative AI models are used for ASR, real-time translation, and text-to-speech

Meta’s Llama 2 series of publicly available foundation LLMs has a 7 billion parameter model that underpins several LLMs for general knowledge questions, programming, and summarising content. Based on “publicly available instruction datasets and over 1 million human annotations,” Llama-2-Chat has been fine-tuned for chat use cases and tested across reasoning, coding, competency, and knowledge standards.

Many more sub-10B parameter generative AI models exist, including Bloom (1.5B), chatGLM (7B), and GPT-J (6B). The list is growing and changing so fast that it’s impossible to keep up with generative AI progress.

[…] & academic: On the subject of AI alone, more than 5000 academic articles are released every day. Using Beewant, researchers may […]

[…] organization’s infrastructure might be a security vector that gives attackers access to its AI models, we must secure the […]